Machine Learning Tv Youtube News & Videos

Machine Learning Tv Articles

Decoding Time Series Patterns: Trends, Seasonality, and Predictions

Machine Learning TV explores time series patterns like trend, seasonality, and autocorrelation, offering insights into predicting and analyzing data with real-world examples.

Mastering Language Model Evaluation: Perplexity and Text Coherence

Learn how to evaluate language models using perplexity, a key metric measuring text complexity. Split data for training, validation, and testing to assess model performance. Lower perplexity scores indicate more natural language generation. Explore bi-gram and trigram models for enhanced text coherence.

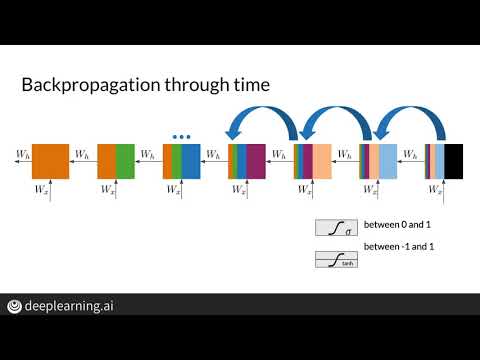

Mastering Vanishing Gradients: LSTM Solutions for RNN Efficiency

Explore how Machine Learning TV tackles the vanishing gradient problem in RNNs using LSTMs. Discover solutions like weight initialization and gradient clipping to optimize training efficiency.

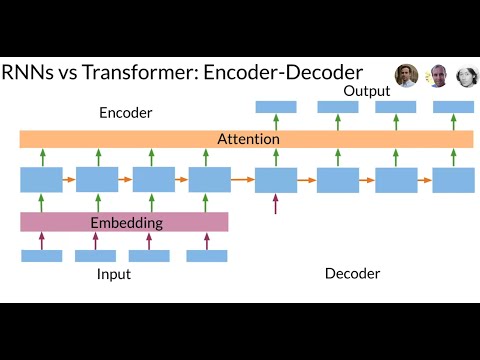

Revolutionizing Neural Networks: The Power of Transformer Models

Discover how the Transformer model revolutionizes neural networks, outperforming RNNs in sequence data processing. Say goodbye to slow computations and vanishing gradients with the Transformer's attention-based approach and multi-head layers. Embrace the future of efficient translation and sequence tasks!

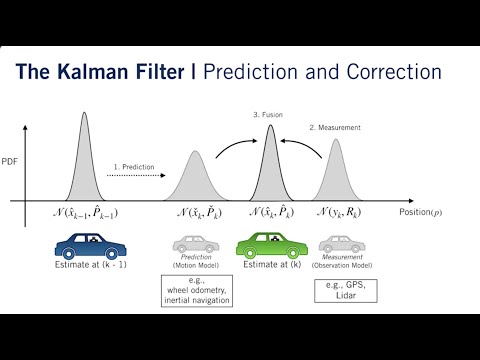

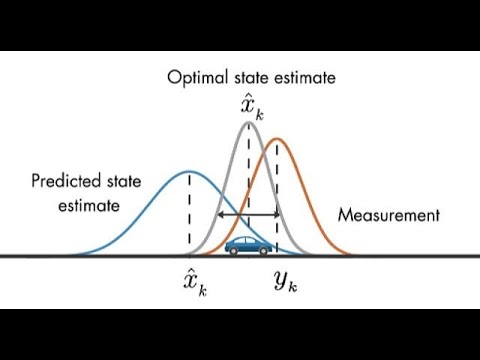

Unveiling the Kalman Filter: From NASA's Apollo Missions to Modern Machine Learning

Discover the Kalman filter's role in modern machine learning, its history, application in NASA's Apollo missions, and two-stage prediction-correction process. Explore its impact on state estimation accuracy and the unscented transform as a modern alternative.

Decoding Shapley Value: Fair Value Distribution in Cooperative Games

Explore the Shapley value method in cooperative game theory, determining fair value distribution based on individual contributions. Learn about axioms, additivity, and the unique effectiveness of the Shapley value theorem. Achieve equitable outcomes in group settings with this robust allocation approach.

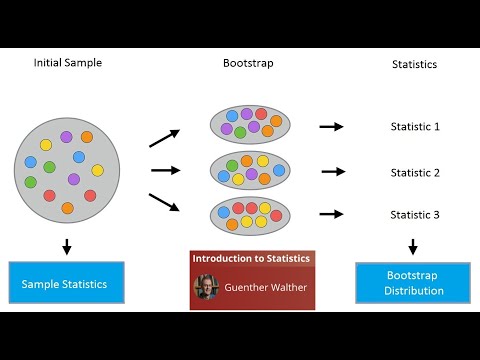

Exploring Monte Carlo Method and Bootstrap in Statistical Inference

Machine Learning TV explores Monte Carlo method and bootstrap in statistical inference, showcasing their power in estimating parameters and constructing confidence intervals with simulations.

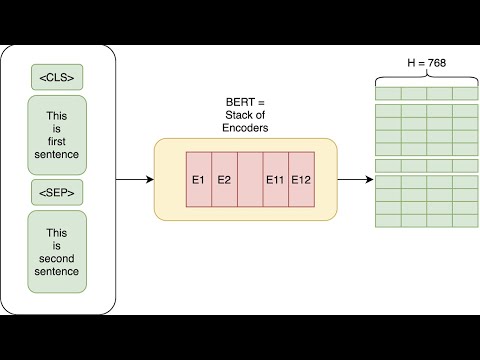

Mastering BERT: Bird Algorithm, RoBERTa, and SageMaker Processing

Discover how Machine Learning TV introduces the Bird algorithm, transforming raw text into BERT embeddings. Contrasting with BlazingText, learn about RoBERTa's enhanced performance and scaling up with Amazon SageMaker processing. Unlock the power of BERT embeddings for NLP tasks efficiently.

Mastering Kalman Filters: Best Estimation for Self-Driving Cars

Machine Learning TV explores the Kalman filter, highlighting bias and consistency in state estimation. They reveal the filter as the best linear unbiased estimator, crucial for accurate and reliable estimates in self-driving car systems.

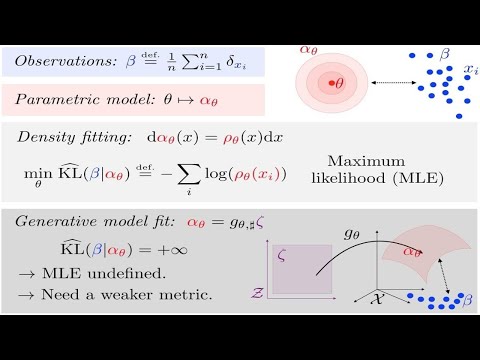

Mastering Model Estimation: MLE, MAP, and Bayesian Insights

Machine Learning TV explores Maximum Likelihood Estimation (MLE) and Maximum A Posteriori (MAP) methods for model estimation, showcasing their applications in linear regression and introducing the concept of Kullback-Leibler (KL) divergence. Learn how regularized models fit into the Bayesian framework for efficient parameter estimation.

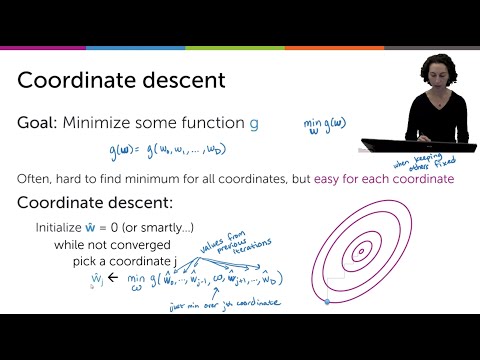

Mastering Optimization: The Efficiency of Coordinate Descent

Discover the power of coordinate descent as an alternative optimization method to gradient descent. Learn how this efficient algorithm simplifies the optimization process by focusing on one dimension at a time, eliminating the need for a step size parameter. Coordinate descent excels in solving complex optimization problems, making it a valuable tool for various applications, including lasso regression.

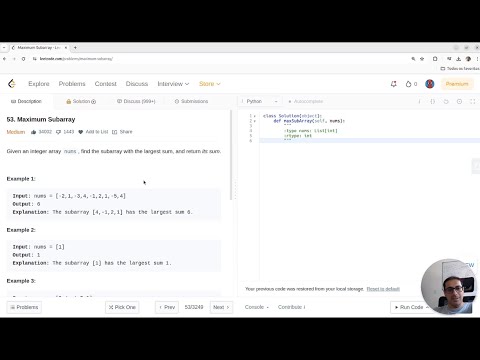

Mastering the Maximum Subarray: Efficient Algorithms for Data Scientists

Join Machine Learning TV as they tackle the Maximum Subarray Problem, optimizing algorithms for data scientists. Explore efficient expansion strategies and clever tweaks to improve performance and conquer LeetCode challenges with precision and innovation.

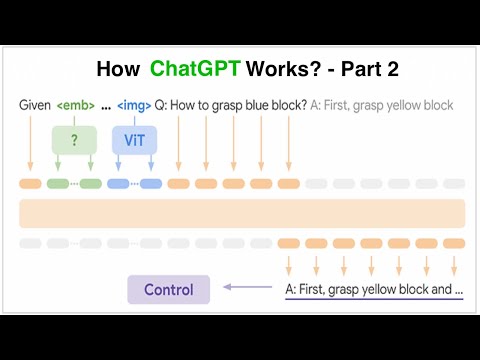

Unleashing the Power of Language Models: Predicting Words and Aligning with Human Preferences

Discover how llms predict the next word using web data, with practical applications like sentiment analysis and question answering. Explore the power of general language models and the challenges of aligning model outputs with human preferences using reinforcement learning.

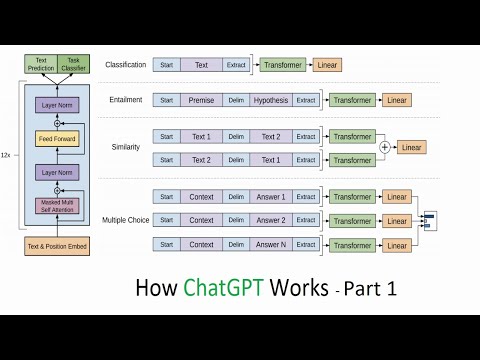

Unveiling the Power of Large Language Models with Princeton NLP Experts

Princeton NLP experts Alexander and Amit explore building large language models like Chachi GPT from scratch, discussing tokenization, word embeddings, and the powerful Transformer architecture's role in natural language processing. Dive into the world of NLP with this insightful discussion!