Exploring Monte Carlo Method and Bootstrap in Statistical Inference

- Authors

- Published on

- Published on

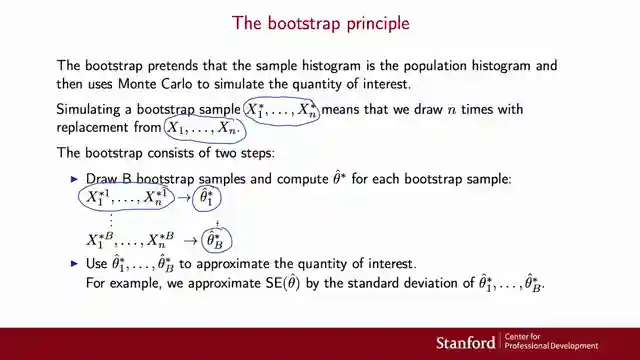

Machine Learning TV takes us on a thrilling ride through the world of statistical inference, showcasing the sheer power of computer technology in revolutionizing data analysis. They dive deep into the Monte Carlo method and the bootstrap, two game-changing concepts that have reshaped how we estimate parameters and construct confidence intervals. With the swagger of a seasoned race car driver, they demonstrate how simulations step in when traditional formulas hit a roadblock, zooming through examples like estimating the average height of the entire US population with just a sample size of 100.

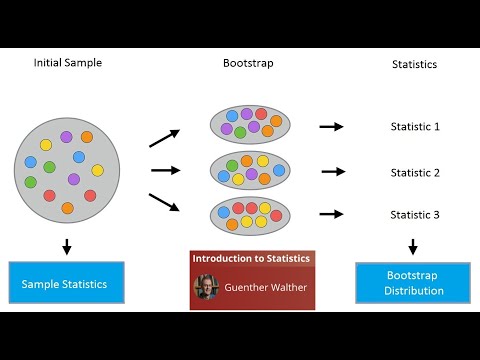

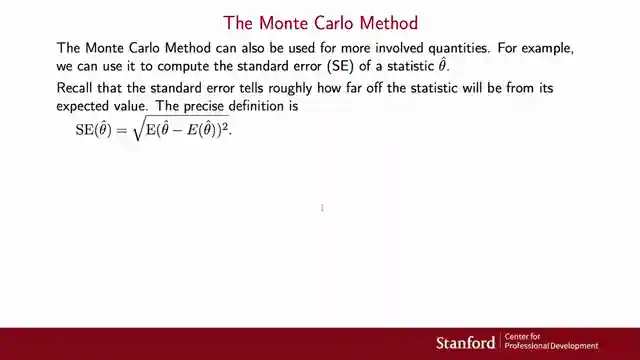

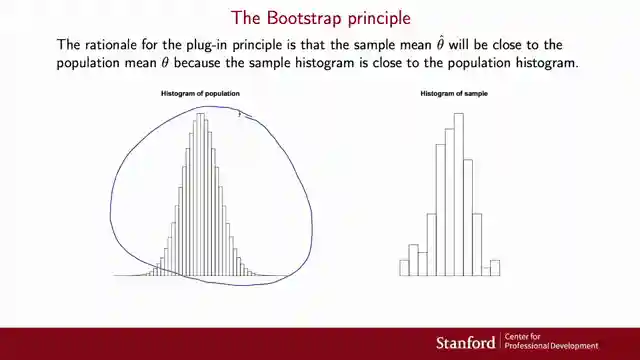

The team at Machine Learning TV roars through the complexities of statistical estimation, highlighting the Monte Carlo method as a trusty steed that gallops towards accurate approximations by harnessing the law of large numbers. They shift gears seamlessly to introduce the bootstrap, a turbocharged technique that pushes the boundaries of sampling limitations, allowing statisticians to rev up their analyses even in situations where drawing multiple samples seems like a distant dream. The adrenaline rush continues as they explain how the bootstrap marries the plug-in principle with Monte Carlo simulation, creating a high-octane blend that fuels precise estimations of quantities like the standard error of a statistic.

With the finesse of a seasoned driver taking on challenging terrain, Machine Learning TV navigates through the nuances of non-parametric and parametric bootstrapping, showcasing their prowess in adapting to different data structures for optimal sampling strategies. They rev up the engine further by exploring advanced bootstrapping techniques like the bootstrap percentile interval and the bootstrap pivotal interval, demonstrating how these tools can steer statisticians towards more accurate confidence intervals, even when faced with non-normal sampling distributions. The team doesn't stop there; they push the pedal to the metal by showing how bootstrapping can be applied to complex scenarios like regression models, where resampling error terms revs up the engine for estimating standard errors with precision.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Bootstrap and Monte Carlo Methods on Youtube

Viewer Reactions for Bootstrap and Monte Carlo Methods

Bootstrap and Monte Carlo simulations helped with understanding statistics

Question about whether X1 and Xn have to be different in a sample

Appreciation for the clean and clear explanation in the video

Studying the same course on Coursera as the video content

Related Articles

Revolutionizing Neural Networks: The Power of Transformer Models

Discover how the Transformer model revolutionizes neural networks, outperforming RNNs in sequence data processing. Say goodbye to slow computations and vanishing gradients with the Transformer's attention-based approach and multi-head layers. Embrace the future of efficient translation and sequence tasks!

Decoding Time Series Patterns: Trends, Seasonality, and Predictions

Machine Learning TV explores time series patterns like trend, seasonality, and autocorrelation, offering insights into predicting and analyzing data with real-world examples.

Mastering Language Model Evaluation: Perplexity and Text Coherence

Learn how to evaluate language models using perplexity, a key metric measuring text complexity. Split data for training, validation, and testing to assess model performance. Lower perplexity scores indicate more natural language generation. Explore bi-gram and trigram models for enhanced text coherence.

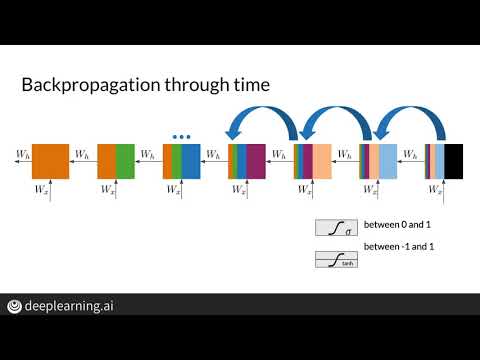

Mastering Vanishing Gradients: LSTM Solutions for RNN Efficiency

Explore how Machine Learning TV tackles the vanishing gradient problem in RNNs using LSTMs. Discover solutions like weight initialization and gradient clipping to optimize training efficiency.