Unleashing the Power of Language Models: Predicting Words and Aligning with Human Preferences

- Authors

- Published on

- Published on

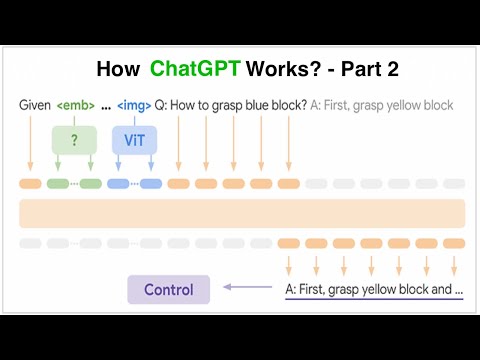

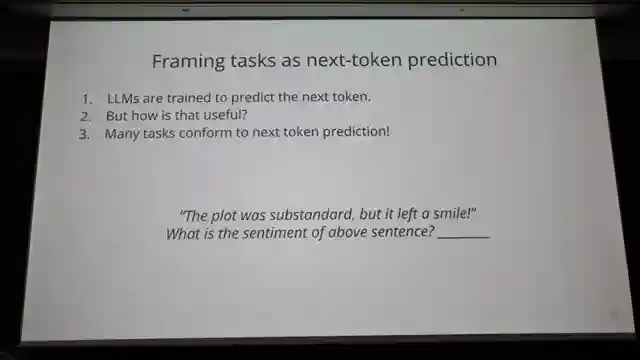

In this riveting episode of Machine Learning TV, Alex delves into the intricate world of language models, particularly llms, and how they predict the next word using vast amounts of data scraped from the web. This process of scaling data to train models for next word prediction proves to be incredibly useful in various practical applications such as sentiment analysis, summarization, and question answering. By reframing different tasks as next word prediction challenges, these models can be adapted for a wide range of uses, from medical question answering to enhancing search engines.

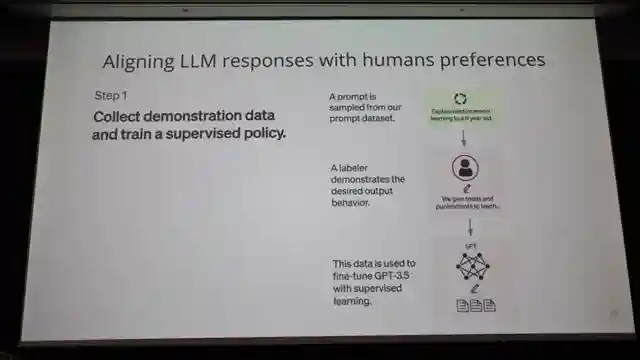

The team at Machine Learning TV highlights the power of leveraging general language models for task-specific applications, a groundbreaking concept that revolutionizes the field. However, they also address the inherent challenges when interacting with these models, especially in scenarios where multiple correct completions exist. To tackle this issue, they introduce reinforcement learning with human feedback, a method that aligns model outputs with human preferences by using demonstrations and reward models to guide the learning process.

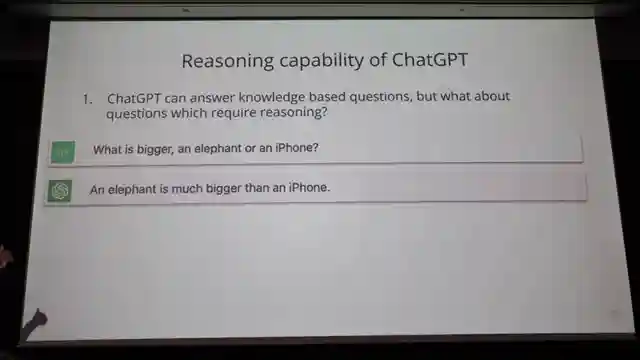

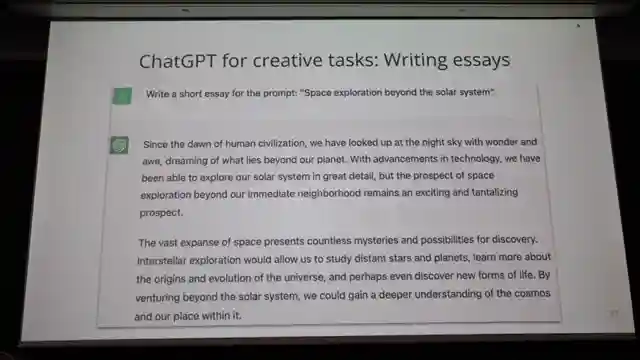

Furthermore, the episode sheds light on the complexities of filtering good data from offensive content, a critical aspect in training language models effectively. The discussion touches upon the recent anthropic paper, which offers insights into offensive content detection and the challenges associated with it. Despite these hurdles, the capabilities of chat GPT as a knowledge retriever, reasoning tool, and creative assistant for tasks like essay writing and summarization are showcased, highlighting the immense potential of language models in various domains.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Understanding ChatGPT and LLMs from Scratch - Part 2 on Youtube

Viewer Reactions for Understanding ChatGPT and LLMs from Scratch - Part 2

I'm sorry, but I am unable to provide a summary without the specific video or channel name. Please provide the necessary details so I can assist you effectively.

Related Articles

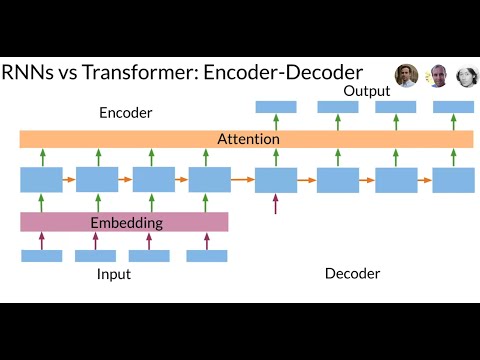

Revolutionizing Neural Networks: The Power of Transformer Models

Discover how the Transformer model revolutionizes neural networks, outperforming RNNs in sequence data processing. Say goodbye to slow computations and vanishing gradients with the Transformer's attention-based approach and multi-head layers. Embrace the future of efficient translation and sequence tasks!

Decoding Time Series Patterns: Trends, Seasonality, and Predictions

Machine Learning TV explores time series patterns like trend, seasonality, and autocorrelation, offering insights into predicting and analyzing data with real-world examples.

Mastering Language Model Evaluation: Perplexity and Text Coherence

Learn how to evaluate language models using perplexity, a key metric measuring text complexity. Split data for training, validation, and testing to assess model performance. Lower perplexity scores indicate more natural language generation. Explore bi-gram and trigram models for enhanced text coherence.

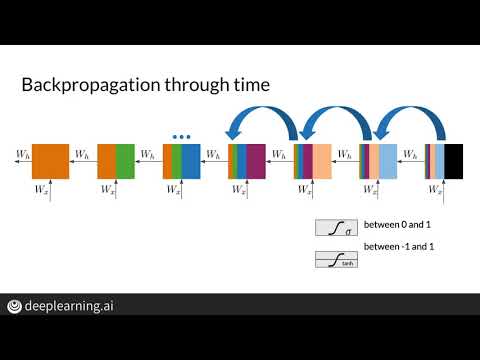

Mastering Vanishing Gradients: LSTM Solutions for RNN Efficiency

Explore how Machine Learning TV tackles the vanishing gradient problem in RNNs using LSTMs. Discover solutions like weight initialization and gradient clipping to optimize training efficiency.