Decoding Time Series Patterns: Trends, Seasonality, and Predictions

- Authors

- Published on

- Published on

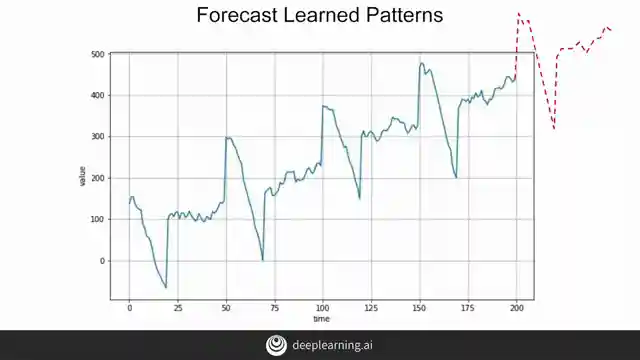

In this riveting episode, Machine Learning TV delves into the intriguing world of time series patterns, from the predictable trends to the enigmatic white noise. They unravel the mysteries of trend, showcasing how time series exhibit a clear direction of movement, akin to the unstoppable force of Moore's Law. The team then shifts gears to discuss seasonality, where patterns repeat at regular intervals, like the ebb and flow of active users on a software developer website. They cleverly decode the dips in activity, revealing the hidden rhythm of weekends when productivity takes a back seat.

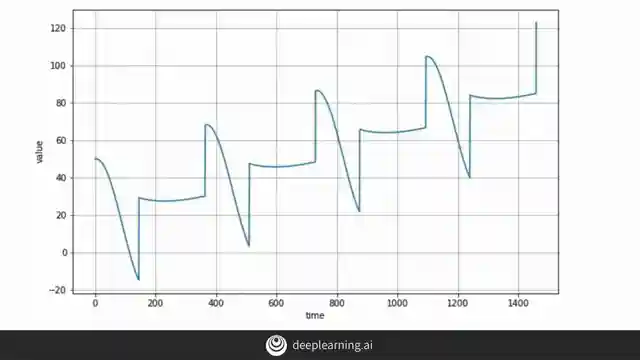

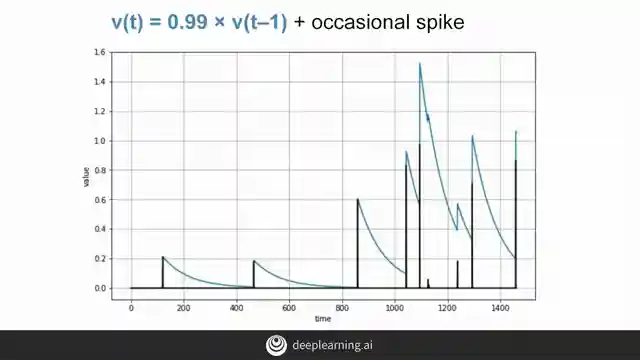

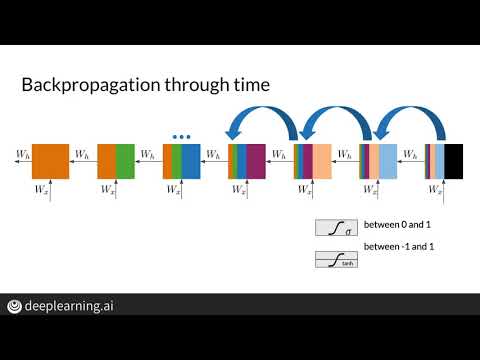

But wait, there's more! Machine Learning TV uncovers the secrets of autocorrelation, unveiling the intricate dance between a time series and its delayed reflection. They shed light on the concept of memory within time series, where each step builds upon the past, punctuated by unpredictable spikes known as innovations. The team showcases examples of multiple autocorrelations, painting a vivid picture of exponential delays and subtle bounces that shape real-life time series. Through their expert analysis, they emphasize the crucial role of machine learning models in spotting patterns and making predictions, despite the unpredictable nature of noise in time series data.

As the discussion unfolds, Machine Learning TV navigates the treacherous waters of non-stationary time series, where behaviors evolve over time, throwing conventional training strategies into disarray. They challenge the notion that more data is always better, highlighting the importance of adapting to the ever-changing landscape of non-stationary time series. With a keen eye for detail, the team underscores the complexities of predicting time series with abrupt changes, urging viewers to embrace the challenge of generating accurate forecasts in the face of uncertainty. This tantalizing glimpse into the world of time series forecasting sets the stage for an exhilarating journey through synthesized sequences and real-world data analysis in the episodes to come.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Common Patterns in Time Series: Seasonality, Trend and Autocorrelation on Youtube

Viewer Reactions for Common Patterns in Time Series: Seasonality, Trend and Autocorrelation

Positive feedback on the video content and the creator's skills.

Related Articles

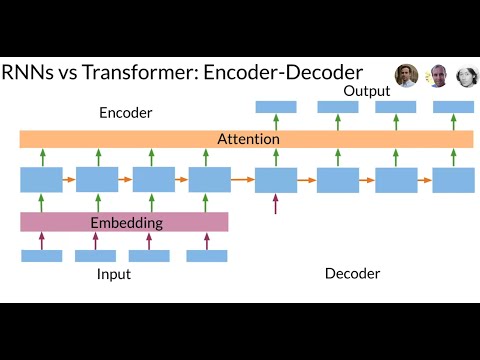

Revolutionizing Neural Networks: The Power of Transformer Models

Discover how the Transformer model revolutionizes neural networks, outperforming RNNs in sequence data processing. Say goodbye to slow computations and vanishing gradients with the Transformer's attention-based approach and multi-head layers. Embrace the future of efficient translation and sequence tasks!

Decoding Time Series Patterns: Trends, Seasonality, and Predictions

Machine Learning TV explores time series patterns like trend, seasonality, and autocorrelation, offering insights into predicting and analyzing data with real-world examples.

Mastering Language Model Evaluation: Perplexity and Text Coherence

Learn how to evaluate language models using perplexity, a key metric measuring text complexity. Split data for training, validation, and testing to assess model performance. Lower perplexity scores indicate more natural language generation. Explore bi-gram and trigram models for enhanced text coherence.

Mastering Vanishing Gradients: LSTM Solutions for RNN Efficiency

Explore how Machine Learning TV tackles the vanishing gradient problem in RNNs using LSTMs. Discover solutions like weight initialization and gradient clipping to optimize training efficiency.