Decoding Shapley Value: Fair Value Distribution in Cooperative Games

- Authors

- Published on

- Published on

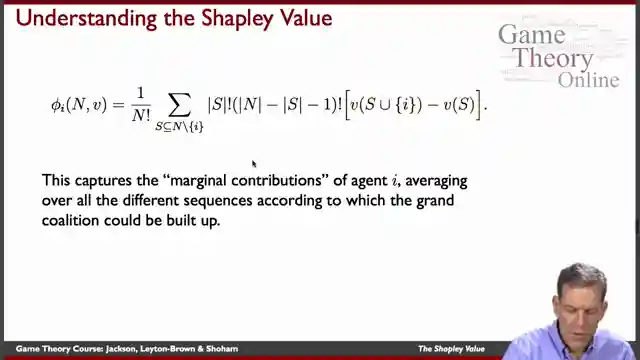

In this episode, the Machine Learning TV crew delves into the fascinating world of the Shapley value, a method that determines how to divvy up the spoils in a group based on individual contributions. They tackle the age-old question of fairness in cooperative games, exploring the essence of what makes a division just. Through the lens of axioms, they navigate the complex terrain of value allocation, ensuring that each member receives their due based on their impact on the group's success.

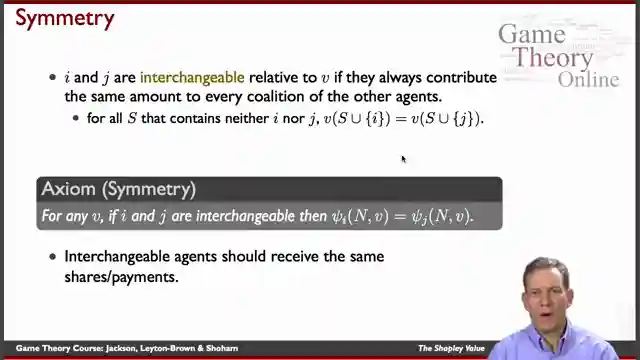

The Shapley value stands as a beacon of rationality in a sea of uncertainty, advocating for a system where every player gets a slice of the pie proportional to their input. By considering factors like essential group members and varying levels of contribution, the Shapley value formula emerges as a robust solution to the thorny issue of value distribution. With axioms like interchangeability and the concept of dummy players, the team constructs a framework that upholds the principles of fairness and meritocracy.

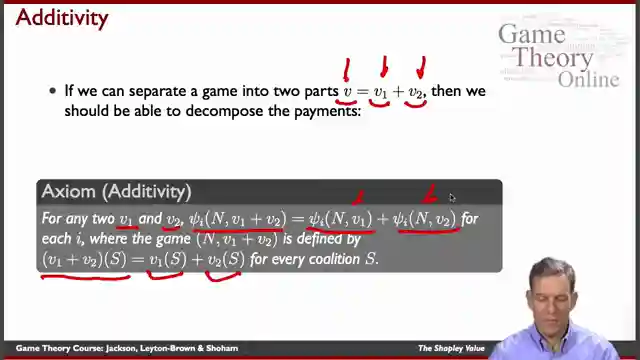

Additivity plays a crucial role in maintaining consistency across different cooperative games, laying the groundwork for a seamless value allocation process. The Shapley value theorem solidifies the method's standing as the gold standard in cooperative game theory, showcasing its unrivaled effectiveness in achieving equitable outcomes. Through detailed examples and calculations, the team demonstrates how the Shapley value formula operates in practice, ensuring that each member of the group receives their fair share of the rewards.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Understanding The Shapley Value on Youtube

Viewer Reactions for Understanding The Shapley Value

Positive feedback on the explanation of Shapley values for machine learning

Question about the additivity axiom and its application to Shapley values for multiple predictions

Request for book recommendations

Comment on the clarity of the explanation

Mention of limitations of Shapley values for complex non-linear patterns

Related Articles

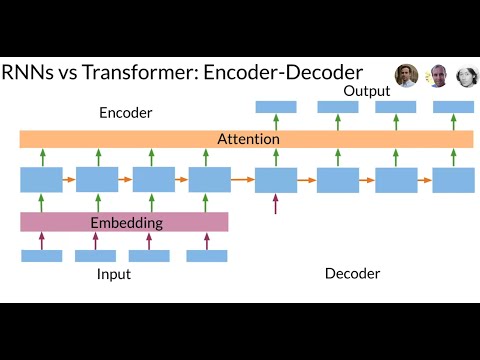

Revolutionizing Neural Networks: The Power of Transformer Models

Discover how the Transformer model revolutionizes neural networks, outperforming RNNs in sequence data processing. Say goodbye to slow computations and vanishing gradients with the Transformer's attention-based approach and multi-head layers. Embrace the future of efficient translation and sequence tasks!

Decoding Time Series Patterns: Trends, Seasonality, and Predictions

Machine Learning TV explores time series patterns like trend, seasonality, and autocorrelation, offering insights into predicting and analyzing data with real-world examples.

Mastering Language Model Evaluation: Perplexity and Text Coherence

Learn how to evaluate language models using perplexity, a key metric measuring text complexity. Split data for training, validation, and testing to assess model performance. Lower perplexity scores indicate more natural language generation. Explore bi-gram and trigram models for enhanced text coherence.

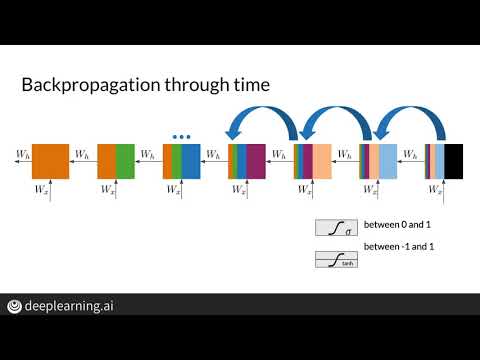

Mastering Vanishing Gradients: LSTM Solutions for RNN Efficiency

Explore how Machine Learning TV tackles the vanishing gradient problem in RNNs using LSTMs. Discover solutions like weight initialization and gradient clipping to optimize training efficiency.