Unveiling the Magic: Inside Large Language Models

- Authors

- Published on

- Published on

Today on AemonAlgiz, we delve into the intricate inner workings of large language models, peeling back the layers of complexity to reveal the magic that powers these digital marvels. From the foundational concepts like softmax and layer normalization to the fascinating world of tokenization, the journey begins with a quest to understand the very essence of how these models compute their output tokens. Softmax, with its knack for constraining values between 0 and 1, plays a crucial role in taming the wild fluctuations of intermediate states within these models, setting the stage for a symphony of computations.

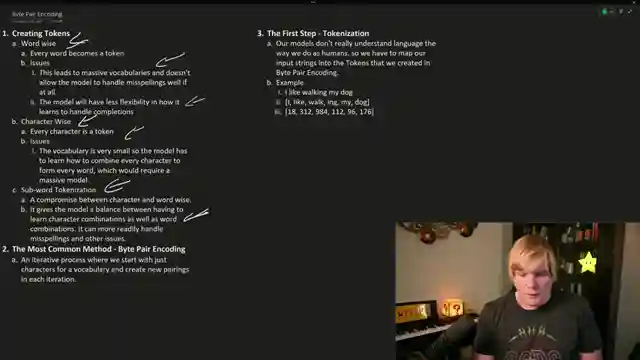

Tokenization emerges as a pivotal step, where the creation of tokens breathes life into the model's understanding of language. Subword tokenization, a delicate dance between word-wise and character-wise approaches, strikes a balance that allows for flexibility in handling misspellings and diverse linguistic nuances. The subsequent journey into embedding spaces unveils a realm where tokens find their place in high-dimensional spheres, forming connections that guide the model in deciphering the intricacies of language. Positional encoding adds another layer of depth, providing the model with a sense of spatial awareness amidst the sea of tokens.

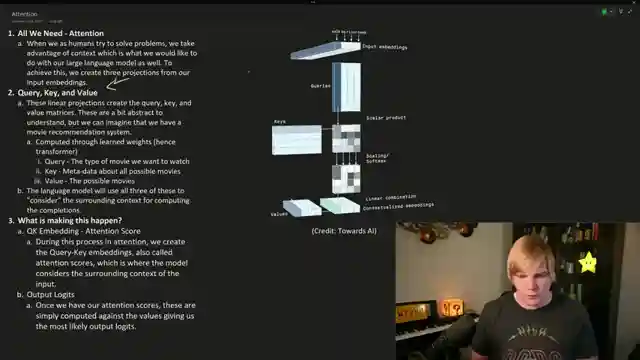

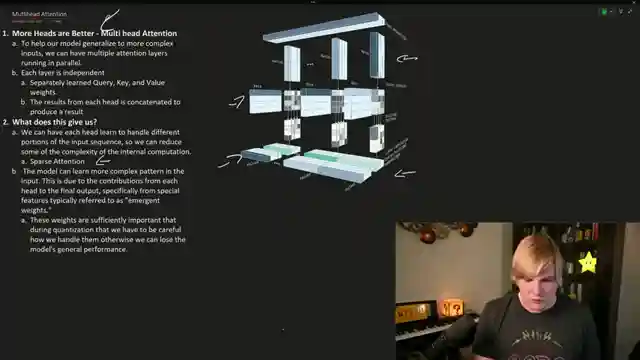

Attention takes the spotlight, transforming the model into a virtual problem-solving maestro akin to a human mind seeking context clues to unravel mysteries. Query, key, and value matrices become the tools of this digital detective, guiding it towards selecting the next token with finesse and precision. Multi-attention heads step into the arena, showcasing the power of parallel processing and enabling the model to tackle complex inputs with grace. Emergent weights, those enigmatic elements that hold the key to the model's performance, lurk beneath the surface, demanding careful handling to maintain the model's prowess. As the journey culminates in the intricate dance of computations leading to the final output tokens, the symphony of algorithms and data intertwines to create a masterpiece of modern technology.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Large Language Models Process Explained. What Makes Them Tick and How They Work Under the Hood! on Youtube

Viewer Reactions for Large Language Models Process Explained. What Makes Them Tick and How They Work Under the Hood!

Viewers praise the high-quality and in-depth content on LLM

Appreciation for the detailed explanations provided in the videos

Requests for the creator to continue making videos

Comments on the video and audio synchronization

Mention of missing the creator's uploads and hoping for more content

Questions about the sudden decrease in discussion about qlora

Request for the addition of graphics in the videos

Inquiry about the format of embeddings and potential confusion

Concern for the well-being of the creator and checking in on their status

Request for a Discord channel for viewers to discuss LLMs

Related Articles

Mastering LoRA's: Fine-Tuning Language Models with Precision

Explore the power of LoRA's for training large language models in this informative guide by AemonAlgiz. Learn how to optimize memory usage and fine-tune models using the ooga text generation web UI. Master hyperparameters and formatting for top-notch performance.

Mastering Word and Sentence Embeddings: Enhancing Language Model Comprehension

Learn about word and sentence embeddings, positional encoding, and how large language models use them to understand natural language. Discover the importance of unique positional encodings and the practical applications of embeddings in enhancing language model comprehension.

Mastering Large Language Model Fine-Tuning with LoRA's

AemonAlgiz explores fine-tuning large language models with LoRA's, emphasizing model selection, data set preparation, and training techniques for optimal results.

Mastering Large Language Models: Embeddings, Training Tips, and LORA Impact

Explore the world of large language models with AemonAlgiz in a live stream discussing embeddings for semantic search, training tips, and the impact of LORA on models. Discover how to handle raw text files and leverage LLMS for chatbots and documentation.