Mastering Large Language Models: Embeddings, Training Tips, and LORA Impact

- Authors

- Published on

- Published on

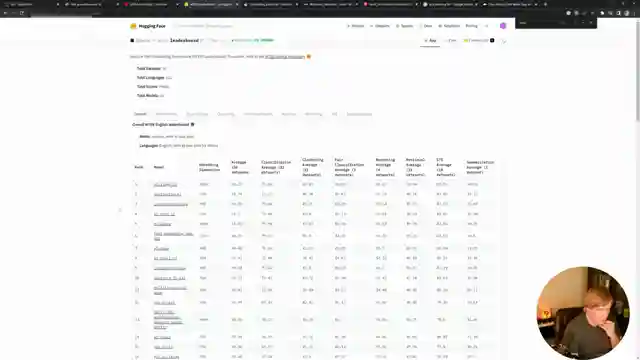

Today on AemonAlgiz, the team embarked on a thrilling live stream adventure delving into the realm of large language models. Among the burning questions from the audience was how to grapple with colossal raw text files, such as those in the realms of cancer or PubMed. Our host, in their infinite wisdom, advocated for the strategic use of embeddings for semantic search, pointing enthusiasts towards the Massive Text Embedding Benchmark for optimal solutions. They also cautioned against the resource-intensive nature of training mammoth models like GPT-3, even with top-tier hardware, suggesting the more cost-effective A100 instances from Lambda Labs as a remedy.

Furthermore, the team shed light on a groundbreaking initiative by Lambda Labs offering a generous 30-day training program for LLMS, a boon for aspiring enthusiasts. They navigated through the intricate web of embeddings, emphasizing their pivotal role in taming vast swathes of raw text for tasks like semantic search and summarization, advocating for tools like Pinecone and Weaviate. An enthralling demonstration using Vault AI showcased the power of embeddings in document embedding and search, a true spectacle for the data aficionados in the audience.

In a daring twist, the discussion veered towards the enigmatic realm of LORA's impact on models, unravelling the mysteries of low-rank matrices and their role in approximating training. The team explored the tantalizing prospect of stacking LLMS for a fusion of different author styles, igniting the imagination of the audience. As the audience probed about using embeddings for chatbots and documentation, the team championed the use of LLMS for data formatting into Q&A formats and proposed the formidable InstructorXL for semantic search in the realm of code documentation. A riveting journey through the landscapes of large language models, brimming with insights and possibilities, unfolded on the AemonAlgiz stage.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch LoRA Q&A with Oobabooga! Embeddings or Finetuning? on Youtube

Viewer Reactions for LoRA Q&A with Oobabooga! Embeddings or Finetuning?

Viewer appreciation for informative content and practical examples

Request for repeating questions during QA sessions

Suggestions for extensions and tools to enhance user experience

Query on best model for finetuning and hosting trained models in production

Interest in Weaviate and vector databases

Discussion on future of LLMs and specialization

Use of SentenceTransformers and Encoder-Decoder models for specific domain corpus

Creation of custom databases for fast similarity cosine search

Project involving training LLM in specific domain for generating output structure

Query on fine-tuning embedding models with data

Related Articles

Mastering LoRA's: Fine-Tuning Language Models with Precision

Explore the power of LoRA's for training large language models in this informative guide by AemonAlgiz. Learn how to optimize memory usage and fine-tune models using the ooga text generation web UI. Master hyperparameters and formatting for top-notch performance.

Mastering Word and Sentence Embeddings: Enhancing Language Model Comprehension

Learn about word and sentence embeddings, positional encoding, and how large language models use them to understand natural language. Discover the importance of unique positional encodings and the practical applications of embeddings in enhancing language model comprehension.

Mastering Large Language Model Fine-Tuning with LoRA's

AemonAlgiz explores fine-tuning large language models with LoRA's, emphasizing model selection, data set preparation, and training techniques for optimal results.

Mastering Large Language Models: Embeddings, Training Tips, and LORA Impact

Explore the world of large language models with AemonAlgiz in a live stream discussing embeddings for semantic search, training tips, and the impact of LORA on models. Discover how to handle raw text files and leverage LLMS for chatbots and documentation.