Mastering LoRA's: Fine-Tuning Language Models with Precision

- Authors

- Published on

- Published on

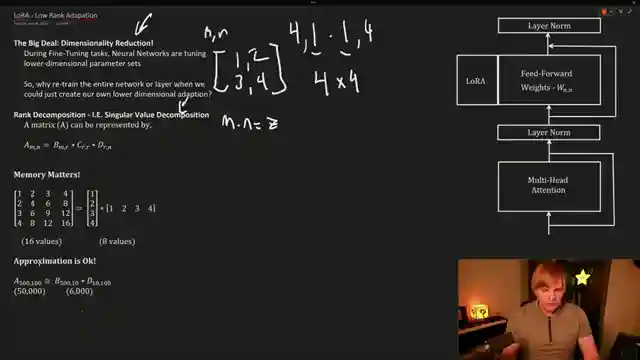

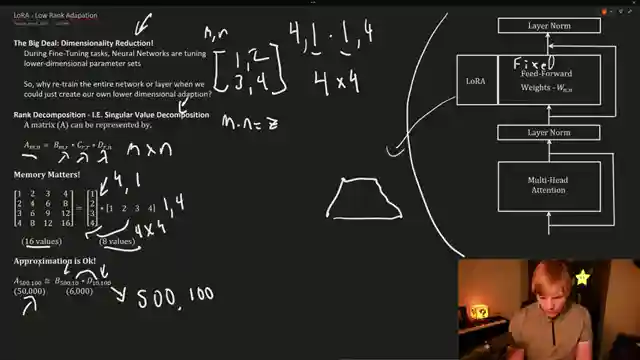

In this riveting episode by AemonAlgiz, we dive headfirst into the world of LoRA's, a cutting-edge tool for honing large language models. Picture this: LoRA's work their magic through dimensionality reduction, optimizing memory usage like a maestro conducting a symphony. By cleverly representing matrices with lower-dimensional counterparts, these bad boys revolutionize the fine-tuning game, making the most out of every computational nook and cranny.

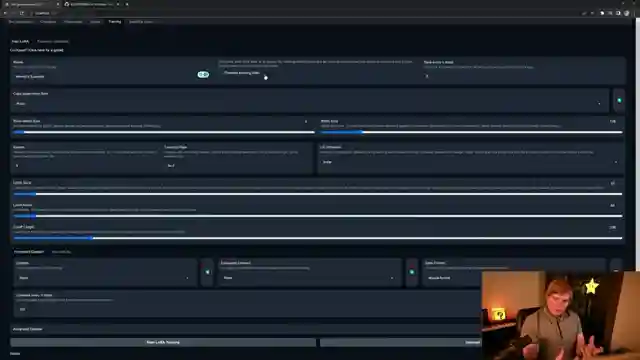

But wait, there's more! The team showcases how to put LoRA's to the test using the ooga text generation web UI, a playground for language model aficionados. Buckle up as they walk us through the nitty-gritty of hyperparameters like batch size, epoch, and learning rate scheduler, crucial cogs in the training machine. And let's not forget about the LoRA rank, LoRA alpha, and cutoff length, all playing a vital role in shaping the model's performance.

As the video unfolds, viewers are treated to a crash course in formatting training data, emphasizing the importance of setting up training and validation sets with precision. Advanced options like dropout come into play, acting as the guardian angel against the dreaded overfitting menace. So, if you're ready to take your language model finesse to the next level, buckle up and hit that "start LoRA training" button to embark on a journey of optimization and innovation.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Fine-Tune Language Models with LoRA! OobaBooga Walkthrough and Explanation. on Youtube

Viewer Reactions for Fine-Tune Language Models with LoRA! OobaBooga Walkthrough and Explanation.

Request for more videos on building datasets

Interest in using AI for safety and morality projects

Request for explanations on using AI for query, key, and value matrices in attention

Interest in training and fine-tuning models to clone characters or oneself

Request for expanding on the parameters section

Request for a video on setting parameters in Python code instead of GUI

Inquiry about using existing Loras

Question about the adequacy of a small training set for generating coherent replies

Inquiry about downloading and using fine-tuned models in other projects

Request for a guide on fine-tuning models with domain knowledge and personality

Related Articles

Mastering LoRA's: Fine-Tuning Language Models with Precision

Explore the power of LoRA's for training large language models in this informative guide by AemonAlgiz. Learn how to optimize memory usage and fine-tune models using the ooga text generation web UI. Master hyperparameters and formatting for top-notch performance.

Mastering Word and Sentence Embeddings: Enhancing Language Model Comprehension

Learn about word and sentence embeddings, positional encoding, and how large language models use them to understand natural language. Discover the importance of unique positional encodings and the practical applications of embeddings in enhancing language model comprehension.

Mastering Large Language Model Fine-Tuning with LoRA's

AemonAlgiz explores fine-tuning large language models with LoRA's, emphasizing model selection, data set preparation, and training techniques for optimal results.

Mastering Large Language Models: Embeddings, Training Tips, and LORA Impact

Explore the world of large language models with AemonAlgiz in a live stream discussing embeddings for semantic search, training tips, and the impact of LORA on models. Discover how to handle raw text files and leverage LLMS for chatbots and documentation.