Mastering Word and Sentence Embeddings: Enhancing Language Model Comprehension

- Authors

- Published on

- Published on

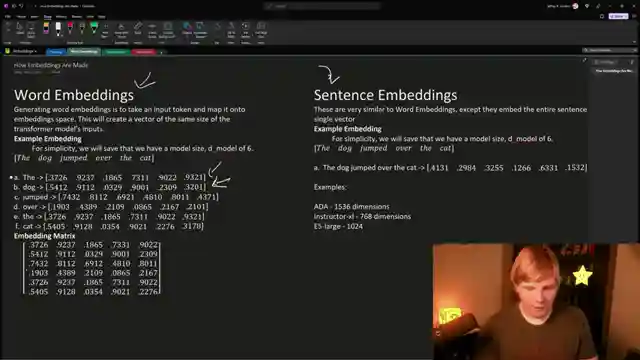

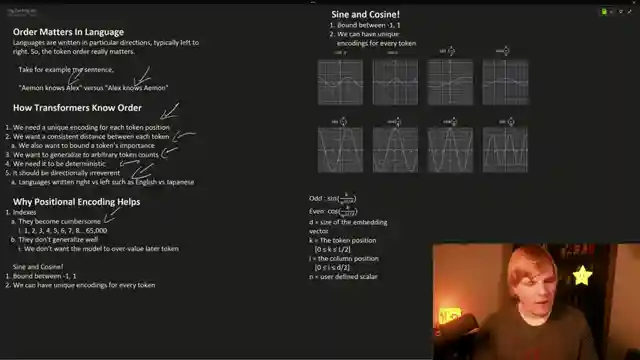

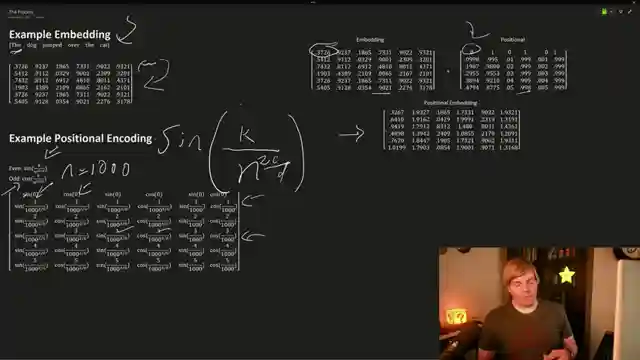

On today's episode, AemonAlgiz delves into the fascinating world of word and sentence embeddings, shedding light on how large language models utilize them to decipher natural language. They address the importance of positional encoding for language models to grasp the significance of each token's position within the text. By employing sine and cosine functions, they ensure unique and bound positional encodings, allowing the network to understand the sequential order of words accurately. The team showcases the process of computing positional encodings for word embeddings, emphasizing the critical role they play in training the network to assign importance to specific tokens.

Furthermore, AemonAlgiz demonstrates the practical application of word and sentence embeddings using Instructor Excel and BERT, enabling users to gauge the similarity between different elements. They highlight the distinction between word embeddings, which are per word, and sentence embeddings, which encapsulate entire text chunks for comparison. Through spatial comparisons facilitated by embeddings, language models can effectively comprehend the relationships between words and text segments, enhancing their ability to interpret natural language accurately. The episode culminates in a comprehensive overview of how word and sentence embeddings are instrumental in training language models to understand the nuances of token importance and positional relevance within input text.

In conclusion, AemonAlgiz provides a captivating glimpse into the intricate workings of word and sentence embeddings, showcasing their pivotal role in empowering large language models to navigate the complexities of natural language processing. By unraveling the mechanisms behind positional encoding and demonstrating the practical applications of embeddings, the team equips viewers with a deeper understanding of how language models leverage these tools to enhance their comprehension of textual data. Stay tuned for more insightful discussions on language model training techniques and fine-tuning strategies in upcoming episodes, as AemonAlgiz continues to unravel the mysteries of artificial intelligence and natural language understanding.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch What Is Positional Encoding? How To Use Word and Sentence Embeddings with BERT and Instructor-XL! on Youtube

Viewer Reactions for What Is Positional Encoding? How To Use Word and Sentence Embeddings with BERT and Instructor-XL!

Clear, technical, and straightforward explanations

Adding extra material on preparing and preprocessing datasets

Detailed explanations and code examples

Easy to follow and understand tutorials

Question about sentence embeddings

Appreciation for simplifying complex topics

Inquiry about the multilingual aspect of Instructor-XL

Feedback on small IDE font size

Audio and video synchronization issue

Suggestion for better visibility for visually impaired viewers

Related Articles

Mastering LoRA's: Fine-Tuning Language Models with Precision

Explore the power of LoRA's for training large language models in this informative guide by AemonAlgiz. Learn how to optimize memory usage and fine-tune models using the ooga text generation web UI. Master hyperparameters and formatting for top-notch performance.

Mastering Word and Sentence Embeddings: Enhancing Language Model Comprehension

Learn about word and sentence embeddings, positional encoding, and how large language models use them to understand natural language. Discover the importance of unique positional encodings and the practical applications of embeddings in enhancing language model comprehension.

Mastering Large Language Model Fine-Tuning with LoRA's

AemonAlgiz explores fine-tuning large language models with LoRA's, emphasizing model selection, data set preparation, and training techniques for optimal results.

Mastering Large Language Models: Embeddings, Training Tips, and LORA Impact

Explore the world of large language models with AemonAlgiz in a live stream discussing embeddings for semantic search, training tips, and the impact of LORA on models. Discover how to handle raw text files and leverage LLMS for chatbots and documentation.