Unveiling AI's Reasoning: GSM Symbolic Data Set Challenges Pattern Matching

- Authors

- Published on

- Published on

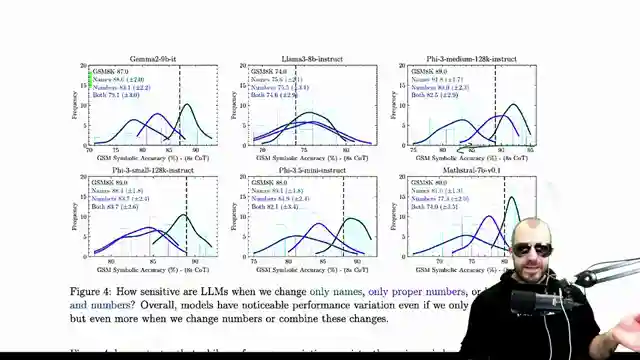

In this thrilling episode, Yannic Kilcher delves into the fascinating world of mathematical reasoning in large language models, questioning whether these AI behemoths truly engage in reasoning or merely rely on pattern matching. The team introduces the groundbreaking GSM symbolic data set as a solution to combat potential training set poisoning in the GSM 8K Benchmark, shaking up the AI research landscape. However, controversies arise regarding the data set construction and the elusive definition of reasoning in these models, sparking a fiery debate among tech enthusiasts.

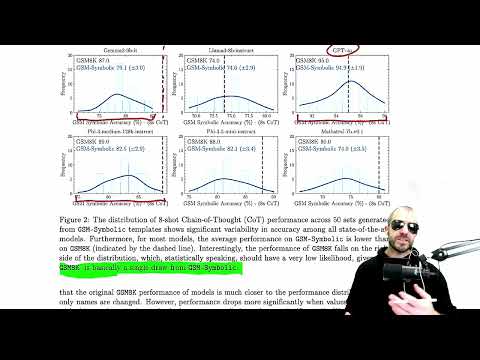

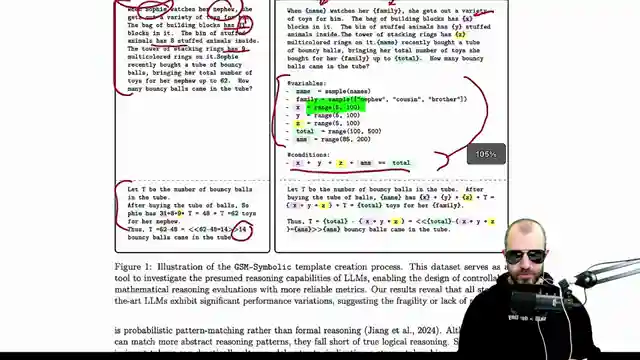

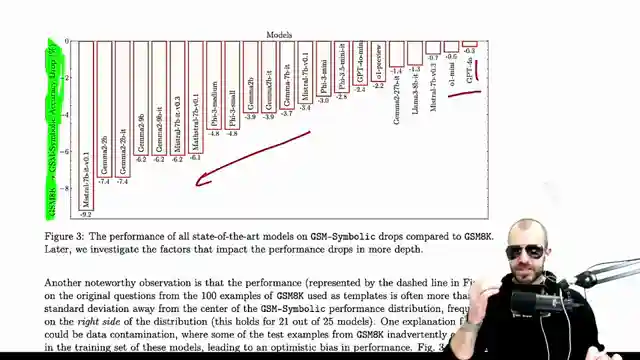

With the roaring engines of innovation, the team crafts a synthetic data set packed with endless variations of high school math questions, pushing the llms to their limits. By annotating templates with valid values and conditions, they unleash a torrent of challenges to test the llms' mettle. The results reveal a tumultuous landscape of performance, with larger models showcasing steadier outcomes while smaller counterparts struggle to keep up, setting the stage for a riveting showdown of AI prowess.

As the dust settles, a crucial revelation emerges - the models exhibit a peculiar affinity for the original GSM 8K tasks, hinting at a potential reservoir of prior knowledge lurking within their digital minds. However, a closer inspection unveils a disparity in performance drop among models, shedding light on the importance of baseline performance considerations. The team boldly challenges the notion that data set distribution alone dictates llms' struggles, proposing a daring hypothesis that illogical scenarios in template-generated data may be the true culprit behind the performance discrepancies, igniting a fiery debate in the realm of artificial intelligence.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch GSM-Symbolic: Understanding the Limitations of Mathematical Reasoning in Large Language Models on Youtube

Viewer Reactions for GSM-Symbolic: Understanding the Limitations of Mathematical Reasoning in Large Language Models

Students struggling with abstract mathematical concepts when presented in different formats

Importance of testing reasoning abilities with unrealistic scenarios

Concerns about test set poisonings in recent models

Discussion on whether humans can reason effectively

Critique of a paper for presenting known results as new information

Debate on the reasoning abilities of LLMs and humans

Criticism of the conclusion that LLMs rely on memorization over reasoning

Importance of fine-tuning LLMs for improved reasoning

Question about the randomness factor in LLMs' inference

Comparison of reasoning abilities between well-trained LLMs and humans

Related Articles

Decoding Large Language Models: Anthropic's Transformer Circuit Exploration

Anthropic explores the biology of large language models through transformer circuits, using circuit tracing and transcoders for interpretability. Learn how these models make decisions and handle tasks like poetry without explicit programming.

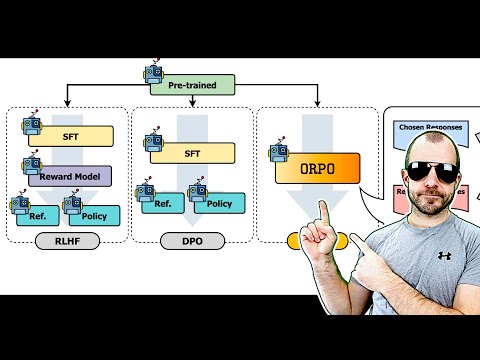

Revolutionizing AI Alignment: Orpo Method Unveiled

Explore Orpo, a groundbreaking AI optimization method aligning language models with instructions without a reference model. Streamlined and efficient, Orpo integrates supervised fine-tuning and odds ratio loss for improved model performance and user satisfaction. Experience the future of AI alignment today.

Tech Roundup: Meta's Chip, Google's Robots, Apple's AI Deal, OpenAI Leak, and More!

Meta unveils powerful new chip; Google DeepMind introduces low-cost robots; Apple signs $50M deal for AI training images; OpenAI researchers embroiled in leak scandal; Adobe trains AI on Mid Journey images; Canada invests $2.4B in AI; Google releases cutting-edge models; Hugging Face introduces iFix 2 Vision language model; Microsoft debuts Row one model; Apple pioneers Faret UI language model for mobile screens.

Unveiling OpenAI's GPT-4: Controversies, Departures, and Industry Shifts

Explore the latest developments with OpenAI's GPT-4 Omni model, its controversies, and the departure of key figures like Ilia Sver and Yan Le. Delve into the balance between AI innovation and commercialization in this insightful analysis by Yannic Kilcher.