Revolutionizing AI Alignment: Orpo Method Unveiled

- Authors

- Published on

- Published on

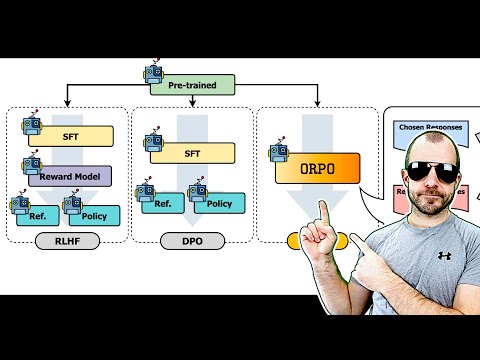

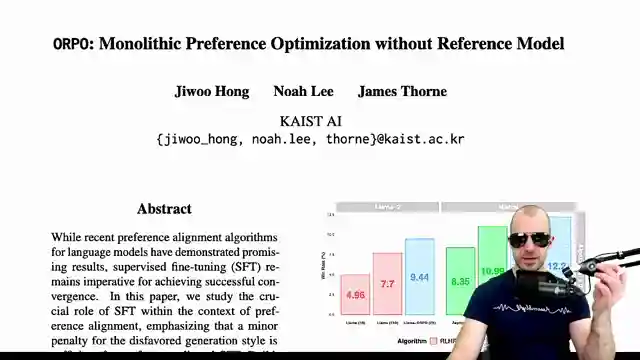

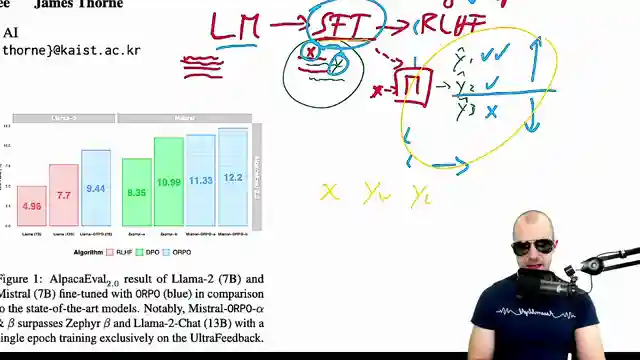

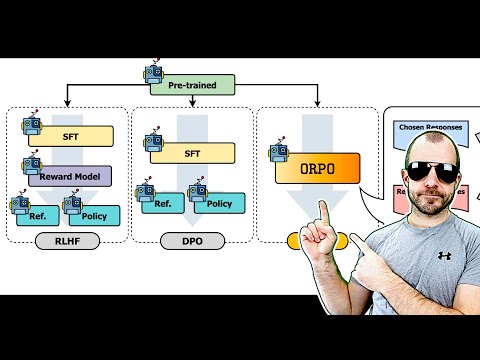

Today, we delve into the world of cutting-edge AI with Yannic Kilcher's breakdown of the Orpo paper, a revolutionary approach to optimizing preferences without the need for a reference model. Developed by the brainiacs at Kaist AI, this method aims to align language models with instruction-tuned models, a task as complex as navigating a roundabout in a tank. Alignment, in this context, involves fine-tuning models to produce outputs that not only follow instructions but also meet our expectations, akin to training a dog to fetch a pint of beer.

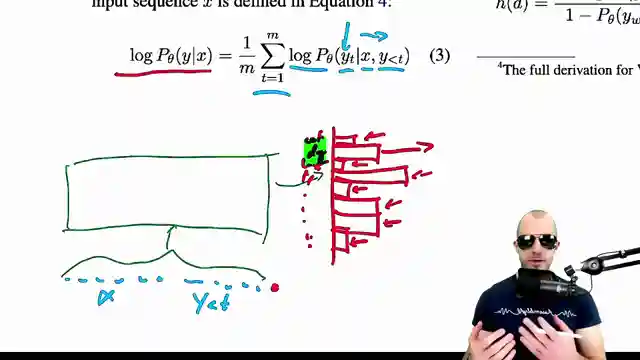

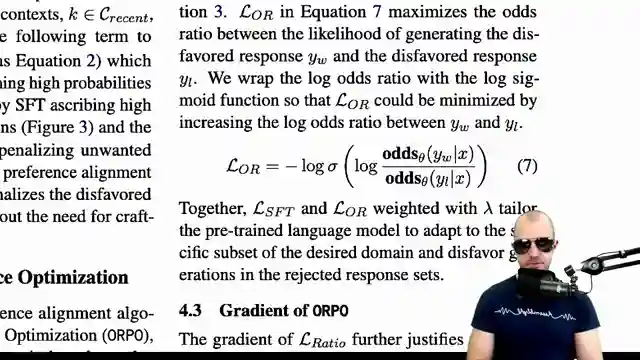

Orpo shakes up the AI landscape by streamlining the alignment process, waving goodbye to the need for multiple models and hello to a more efficient approach. By blending supervised fine-tuning with an odds ratio loss, Orpo ensures that model outputs are not just accurate but also in line with our preferences. Picture it as teaching a robot to make you a cup of tea exactly the way you like it, without it accidentally serving you a cup of motor oil instead. This innovative method eliminates the need for intermediary steps, saving time and computational resources while delivering results that make a noticeable difference.

The Orpo paper presents a game-changing solution to the age-old challenge of aligning AI models with human preferences. By combining the best of supervised fine-tuning and odds ratio loss, Orpo creates a seamless process that enhances model performance and user satisfaction. It's like upgrading your trusty old car with a jet engine – suddenly, you're not just driving, you're flying. So buckle up, because with Orpo leading the way, the future of AI looks brighter and more aligned than ever before.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch ORPO: Monolithic Preference Optimization without Reference Model (Paper Explained) on Youtube

Viewer Reactions for ORPO: Monolithic Preference Optimization without Reference Model (Paper Explained)

Viewers appreciate the technical content and in-depth analysis provided in the video

Positive feedback on the explanation and insights given by the creator

Comments on the mathematical aspects discussed in the video

Mention of research from South Korea being represented

Speculation on the potential impact of the discussed paper in the data science community

Comments on the loss function and its simplification

Confusion and requests for clarification on certain concepts discussed in the video

Speculation and discussion on the potential success of certain investment opportunities like Cyberopolis

Appreciation for the creator's explanations and content

Concern and well wishes for the creator's well-being due to a gap in posting videos.

Related Articles

Decoding Large Language Models: Anthropic's Transformer Circuit Exploration

Anthropic explores the biology of large language models through transformer circuits, using circuit tracing and transcoders for interpretability. Learn how these models make decisions and handle tasks like poetry without explicit programming.

Revolutionizing AI Alignment: Orpo Method Unveiled

Explore Orpo, a groundbreaking AI optimization method aligning language models with instructions without a reference model. Streamlined and efficient, Orpo integrates supervised fine-tuning and odds ratio loss for improved model performance and user satisfaction. Experience the future of AI alignment today.

Tech Roundup: Meta's Chip, Google's Robots, Apple's AI Deal, OpenAI Leak, and More!

Meta unveils powerful new chip; Google DeepMind introduces low-cost robots; Apple signs $50M deal for AI training images; OpenAI researchers embroiled in leak scandal; Adobe trains AI on Mid Journey images; Canada invests $2.4B in AI; Google releases cutting-edge models; Hugging Face introduces iFix 2 Vision language model; Microsoft debuts Row one model; Apple pioneers Faret UI language model for mobile screens.

Unveiling OpenAI's GPT-4: Controversies, Departures, and Industry Shifts

Explore the latest developments with OpenAI's GPT-4 Omni model, its controversies, and the departure of key figures like Ilia Sver and Yan Le. Delve into the balance between AI innovation and commercialization in this insightful analysis by Yannic Kilcher.