Unlocking Performance: Q Laura for Fine-Tuning Large Language Models

- Authors

- Published on

- Published on

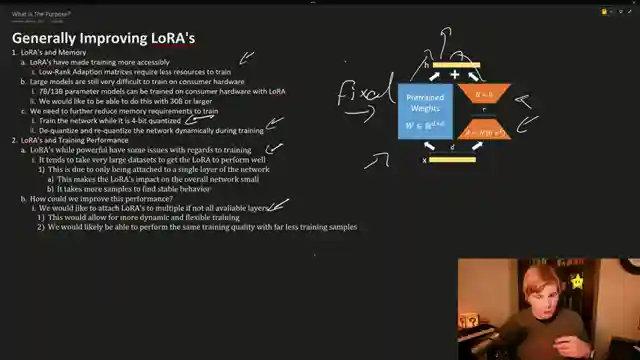

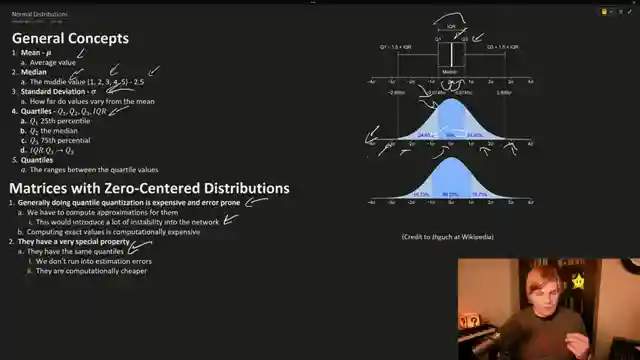

Today on AemonAlgiz, we dive into the thrilling world of fine-tuning large language models with Corpus Q Laura. The team unveils a groundbreaking approach to training 30 billion parameter models on consumer hardware by slashing memory requirements through dynamic quantization. By attaching Loras to multiple layers, they aim to revolutionize training efficiency, addressing the challenges faced by traditional methods. The mathematics behind Q Loras, rooted in statistics and zero-centered distributed matrices, offers a streamlined path to quantization, leveraging the normal distributions in neural networks for optimal performance.

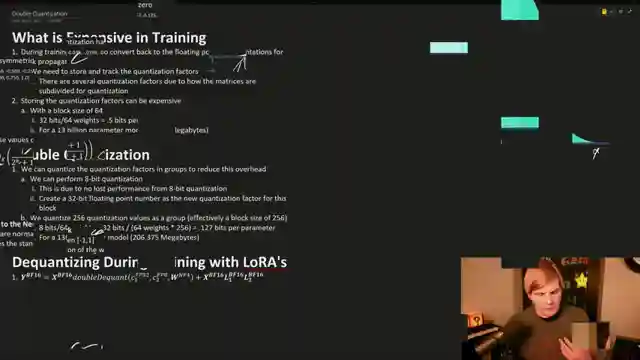

In a bold move, the team introduces double quantization, unlocking significant memory savings during training by quantizing quantization factors in groups. With Q Laura leading the charge, pre-training with 4-bit normal floats and dynamic de-quantization and re-quantization during training become the new norm. This innovative approach not only enhances the scalability of large language models but also sets the stage for a paradigm shift in the field. By leveraging paging optimizers, the team ensures seamless operations and tackles memory exceptions head-on, paving the way for attaching Lora adapters to every layer for unparalleled training performance.

Q Laura emerges as a game-changer, outperforming traditional methods and enabling training on a broader range of hardware, including 30 billion parameter models on 24 gigs of VRAM. The power behind Q Lauras lies in their ability to fine-tune and train large language models with precision and control, setting a new standard in the realm of language model innovation. Join AemonAlgiz on this exhilarating journey as they push the boundaries of technology and usher in a new era of possibilities in the world of large language models.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch QLoRA Is More Than Memory Optimization. Train Your Models With 10% of the Data for More Performance. on Youtube

Viewer Reactions for QLoRA Is More Than Memory Optimization. Train Your Models With 10% of the Data for More Performance.

Viewer impressed by unique and detailed explanations distinguishing the channel

Viewer interested in developing their own database for training a model

Viewer appreciates the quick response to new developments

Viewer hopes the channel gets recognition for content quality

Viewer finds the video helpful in understanding QLoRA

Viewer looking forward to a walkthrough on QLoRA

Viewer requests information on training scripts for QLoRA

Viewer interested in using QLoRA on Falcon 40B

Viewer asks about the relevance of QLoRA for diffusion model fine-tuning

Viewer shares experience of running QLoRA on llama65b

Related Articles

Mastering LoRA's: Fine-Tuning Language Models with Precision

Explore the power of LoRA's for training large language models in this informative guide by AemonAlgiz. Learn how to optimize memory usage and fine-tune models using the ooga text generation web UI. Master hyperparameters and formatting for top-notch performance.

Mastering Word and Sentence Embeddings: Enhancing Language Model Comprehension

Learn about word and sentence embeddings, positional encoding, and how large language models use them to understand natural language. Discover the importance of unique positional encodings and the practical applications of embeddings in enhancing language model comprehension.

Mastering Large Language Model Fine-Tuning with LoRA's

AemonAlgiz explores fine-tuning large language models with LoRA's, emphasizing model selection, data set preparation, and training techniques for optimal results.

Mastering Large Language Models: Embeddings, Training Tips, and LORA Impact

Explore the world of large language models with AemonAlgiz in a live stream discussing embeddings for semantic search, training tips, and the impact of LORA on models. Discover how to handle raw text files and leverage LLMS for chatbots and documentation.