Mastering Local Machine Model Running: Abhishek Thakur's Guide

- Authors

- Published on

- Published on

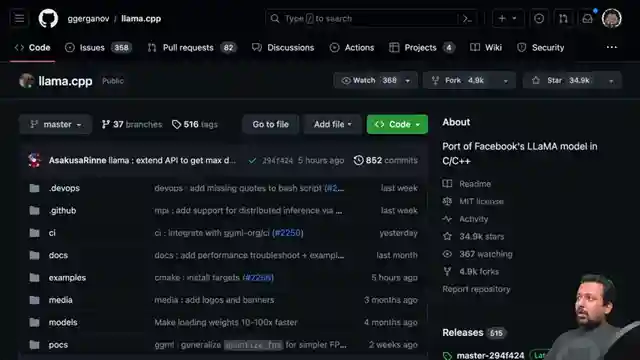

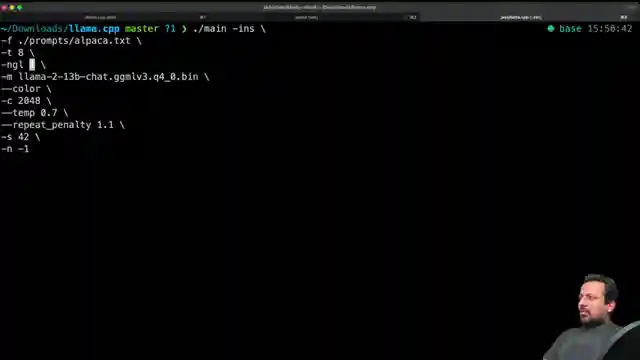

Today on Abhishek Thakur's channel, he takes us on a thrilling ride through the world of running the llama V2 13 million parameter model on your very own local machine. But hold on tight, because this isn't just about Ubuntu - Abhishek shows us how to conquer this feat on Mac computers, including the powerful M1 and M2 models. The llama.cpp library, a daring port of Facebook's llama model in C++, opens up a world of possibilities on OS X, Linux, and Windows. With a user named The Block converting models to ggml format, the path to llama.cpp greatness becomes clear.

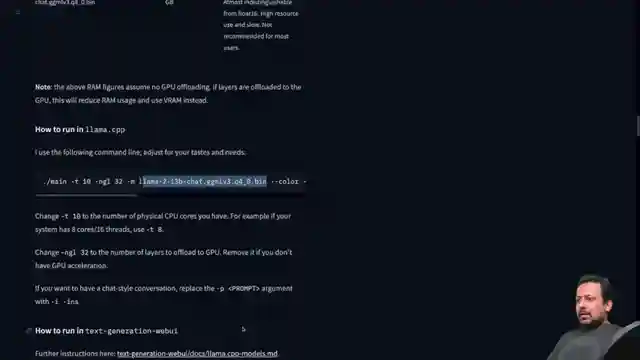

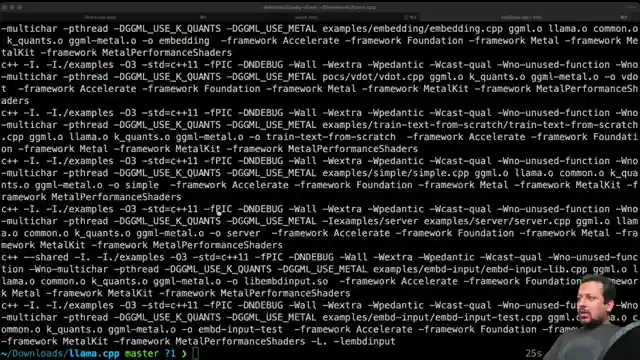

The journey begins with cloning the llama.cpp repository and adding llama_metal=1 before firing up the make command. A swift download of the model file using the wget command sets the stage for unleashing the power of the executable, offering a plethora of arguments for customization. By providing a prompt file with instructions, Abhishek showcases the model's lightning-fast response generation, setting the scene for a high-octane performance.

Transitioning to Ubuntu, the stakes are raised as Abhishek delves into enabling GPU usage with llama_cu, requiring a deft hand in setting up the conda environment. Running the model on a single GPU reveals its voracious appetite for video memory, consuming around 10 GB with lightning speed. As Abhishek contemplates bidding farewell to his ChatGPT subscription in favor of the local llama prowess, the stage is set for a thrilling conclusion. Buckle up, hit the like button, subscribe, and spread the word - because the llama V2 model is here to revolutionize your local machine experience.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Run LLAMA-v2 chat locally on Youtube

Viewer Reactions for Run LLAMA-v2 chat locally

Mention of fast speed and helpfulness of the video

Discussion on examples/server having a frontend and deploying it behind a REST API

Specific instructions for running a command at 4.44 in the video

Mention of missing window around 3:20 for downloading a file

Request for a tutorial on finetuning llama.cpp on a local machine

Explanation of cuBLAS and its relation to NVIDIA CUDA Technology

Error loading model with unknown combination and request for a fix

Inquiry about running llama cpp + llama 2 + huggingface chat

Question on maximizing GPU and CPU usage with llama CPP Python

Inquiry about .bin files containing model weights

Related Articles

Revolutionizing Image Description Generation with InstructBlip and Hugging Face Transformers

Abhishek Thakur explores cutting-edge image description generation using InstructBlip and Hugging Face Transformers. Leveraging Vicuna and Flan T5, the team crafts detailed descriptions, saves them in a CSV file, and creates embeddings for semantic search, culminating in a user-friendly Gradio demo.

Ultimate Guide: Creating AI QR Codes with Python & Hugging Face Library

Learn how to create AI-generated QR codes using Python and the Hugging Face library, Diffusers, in this exciting tutorial by Abhishek Thakur. Explore importing tools, defining models, adjusting parameters, and generating visually stunning QR codes effortlessly.

Unveiling Salesforce's Exogen: Efficient 7B LLM Model for Summarization

Explore Salesforce's cutting-edge Exogen model, a 7B LLM trained on an 8K input sequence. Learn about its Apache 2.0 license, versatile applications, and efficient summarization capabilities in this informative video by Abhishek Thakur.

Mastering LLM Training in 50 Lines: Abhishek Thakur's Expert Guide

Abhishek Thakur demonstrates training LLMs in 50 lines of code using the "alpaca" dataset. He emphasizes data formatting consistency for optimal results, showcasing the process on his home GPU. Explore the world of AI training with key libraries and fine-tuning techniques.