Mastering LLM Training in 50 Lines: Abhishek Thakur's Expert Guide

- Authors

- Published on

- Published on

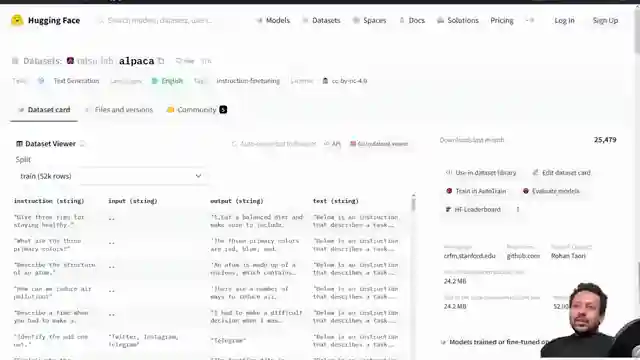

In this riveting episode, Abhishek Thakur unveils the secrets of training LLMs in a mere 50 lines of code, a feat that promises to revolutionize the world of AI. With his dataset "alpaca" in hand, containing essential columns like input and output, Abhishek dives headfirst into the intricate world of instruction-based models. By showcasing how to spot the odd one out in a set, he underscores the importance of uniform data formatting for optimal LLM training results. Emphasizing the significance of maintaining data consistency, Abhishek sets the stage for a groundbreaking training session on his home GPU, shunning the allure of cloud computing.

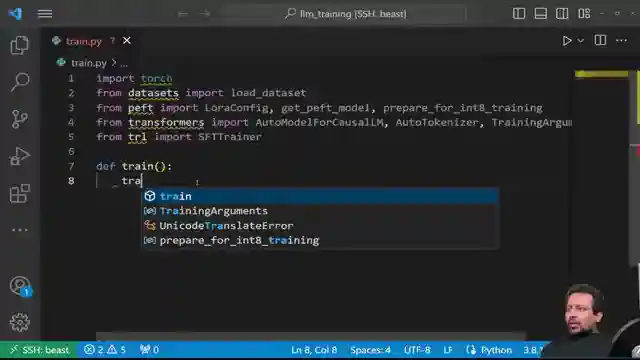

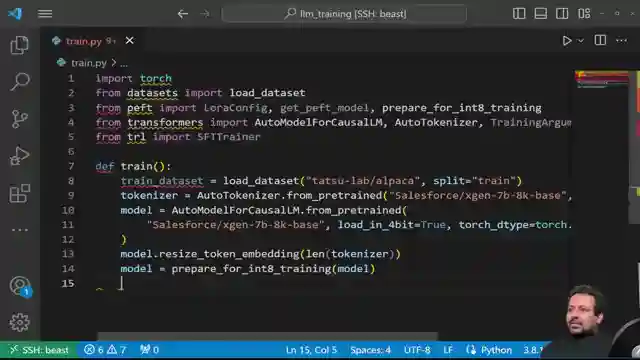

As Abhishek navigates the VS Code environment, deftly importing key libraries such as Torch, Transformers, and TRL, the stage is set for a coding spectacle like no other. With a meticulous eye for detail, he meticulously crafts the training function, loading the dataset, selecting a tokenizer, and harnessing the power of a Salesforce model. The air crackles with anticipation as the model is configured for int8 training, a crucial step in the fine-tuning process that promises to unlock the true potential of the LLM.

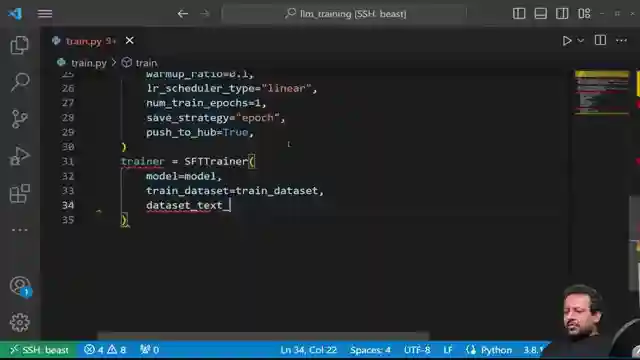

With the training arguments finely tuned to perfection, Abhishek embarks on a thrilling journey through the intricacies of batch size, optimizer selection, and the all-important learning rate adjustments essential for molding the model to perfection. As he grapples with errors and challenges along the way, Abhishek's unwavering determination shines through, a testament to his unwavering commitment to pushing the boundaries of AI training. In a tantalizing teaser for future episodes, Abhishek hints at the wonders of Auto Train, a tool by Hugging Face that promises to streamline the LLM training process like never before. With promises of deeper insights into SFT trainer functionality and data formatting intricacies on the horizon, viewers are left on the edge of their seats, eagerly awaiting the next chapter in this thrilling AI saga.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Train LLMs in just 50 lines of code! on Youtube

Viewer Reactions for Train LLMs in just 50 lines of code!

Request for a guide on RewardTrainer component of TRL

Request for a part 2 on making inference using the model trained in the tutorial

Query about where the model is saved after training

Request for a complete playlist of LLM

Inquiry about training LLM on a Databricks cluster with multiple GPUs

Question regarding the format of datasets for training LLMs

Request for a tutorial on hosting LLM on the cloud or LLMOps

Inquiry about rectifying an error related to package installation

Query about testing trained models

Request for a video using generative models for embeddings in a classification task

Related Articles

Revolutionizing Image Description Generation with InstructBlip and Hugging Face Transformers

Abhishek Thakur explores cutting-edge image description generation using InstructBlip and Hugging Face Transformers. Leveraging Vicuna and Flan T5, the team crafts detailed descriptions, saves them in a CSV file, and creates embeddings for semantic search, culminating in a user-friendly Gradio demo.

Ultimate Guide: Creating AI QR Codes with Python & Hugging Face Library

Learn how to create AI-generated QR codes using Python and the Hugging Face library, Diffusers, in this exciting tutorial by Abhishek Thakur. Explore importing tools, defining models, adjusting parameters, and generating visually stunning QR codes effortlessly.

Unveiling Salesforce's Exogen: Efficient 7B LLM Model for Summarization

Explore Salesforce's cutting-edge Exogen model, a 7B LLM trained on an 8K input sequence. Learn about its Apache 2.0 license, versatile applications, and efficient summarization capabilities in this informative video by Abhishek Thakur.

Mastering LLM Training in 50 Lines: Abhishek Thakur's Expert Guide

Abhishek Thakur demonstrates training LLMs in 50 lines of code using the "alpaca" dataset. He emphasizes data formatting consistency for optimal results, showcasing the process on his home GPU. Explore the world of AI training with key libraries and fine-tuning techniques.