Mastering Text Generation: Local Chatbot Deployment and Model Fine-Tuning

- Authors

- Published on

- Published on

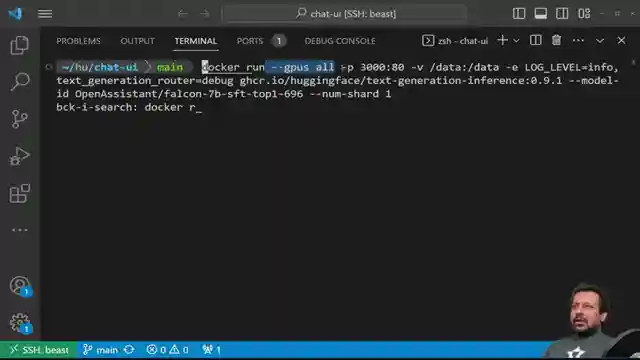

In this riveting episode of Abhishek Thakur's channel, he unveils a cutting-edge chatbot designed to craft emails with the finesse of a seasoned wordsmith. Running locally on his machine, this chatbot heralds a new era of personalized communication. But the real magic lies in the deployment of colossal language models using the Text Generation Inference (TGI) library from Hugging Face. With the swagger of a tech maestro, Abhishek walks us through the intricate process of setting up the Falcon 7B model, a task that demands patience and precision.

As the tension mounts, Abhishek delves into the nitty-gritty of installing Rust, ProTalk, and Flash attention, laying the groundwork for unleashing the power of these behemoth models. The arduous journey of building Flash attention is alleviated by the convenience of a Docker container, streamlining the setup process. With a flick of a switch, the TGI command is unleashed, paving the way for a seamless experience in the realm of text generation. Abhishek's mastery shines as he navigates through the command intricacies, showcasing the model's versatility and efficiency.

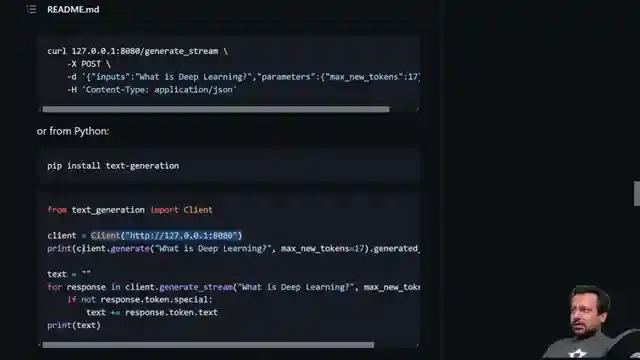

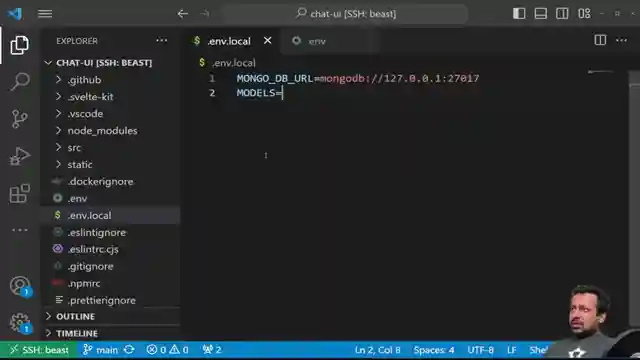

Venturing into the digital wilderness, Abhishek takes us on a thrilling ride through the browser interface, where the Falcon 7B model flexes its computational muscles. The Python client for TGI adds another layer of sophistication, underscoring the user-friendly nature of these cutting-edge tools. Transitioning seamlessly, Abhishek introduces the Chat UI by Hugging Face, a dynamic platform that promises a symphony of communication prowess. With npm and a MongoDB instance as his trusty sidekicks, Abhishek embarks on a journey to unlock the full potential of this innovative chat interface.

In a crescendo of technological marvel, Abhishek unveils the seamless integration of Chat UI, demonstrating its prowess in generating text with remarkable coherence and context retention. The allure of deploying these models in production settings beckons, as Abhishek hints at the vast possibilities that lie ahead. With a nod to the future, he encourages viewers to explore the realm of fine-tuning models on private datasets, a gateway to bespoke language models tailored to individual needs. As the episode draws to a close, Abhishek leaves us with a tantalizing glimpse into the boundless horizons of AI-driven communication, a realm where innovation knows no bounds.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Deploy FULLY PRIVATE & FAST LLM Chatbots! (Local + Production) on Youtube

Viewer Reactions for Deploy FULLY PRIVATE & FAST LLM Chatbots! (Local + Production)

Setting up WSL 2 and Docker on Windows can be time-consuming

Request for a tutorial on multiple Instance learning

Questions about supported models and where to find a list of them

Difficulty in running Docker container on Mac M1

Inquiry about training a LLM with tabular dataset

Query on using langchain to improve model capabilities

Concerns about running the chat UI on Chrome

Request for a video tutorial on creating a chatbot using Google Colab or Kaggle notebooks

Challenges faced in deploying TGI on a cloud instance

Interest in training the model with personal data

Related Articles

Revolutionizing Image Description Generation with InstructBlip and Hugging Face Transformers

Abhishek Thakur explores cutting-edge image description generation using InstructBlip and Hugging Face Transformers. Leveraging Vicuna and Flan T5, the team crafts detailed descriptions, saves them in a CSV file, and creates embeddings for semantic search, culminating in a user-friendly Gradio demo.

Ultimate Guide: Creating AI QR Codes with Python & Hugging Face Library

Learn how to create AI-generated QR codes using Python and the Hugging Face library, Diffusers, in this exciting tutorial by Abhishek Thakur. Explore importing tools, defining models, adjusting parameters, and generating visually stunning QR codes effortlessly.

Unveiling Salesforce's Exogen: Efficient 7B LLM Model for Summarization

Explore Salesforce's cutting-edge Exogen model, a 7B LLM trained on an 8K input sequence. Learn about its Apache 2.0 license, versatile applications, and efficient summarization capabilities in this informative video by Abhishek Thakur.

Mastering LLM Training in 50 Lines: Abhishek Thakur's Expert Guide

Abhishek Thakur demonstrates training LLMs in 50 lines of code using the "alpaca" dataset. He emphasizes data formatting consistency for optimal results, showcasing the process on his home GPU. Explore the world of AI training with key libraries and fine-tuning techniques.