Unveiling Salesforce's Exogen: Efficient 7B LLM Model for Summarization

- Authors

- Published on

- Published on

Today on Abhishek Thakur's channel, we delved into the world of Salesforce's latest marvel, Exogen. This 7B LLM powerhouse is no ordinary model, boasting training on an 8K input sequence length. It's like the standard Lama model, but with a twist - trained on a whopping 1.5 trillion tokens. What sets Exogen apart is its Apache 2.0 license, allowing commercial use, and the availability of both code base and models. The 7B 4K and 7B 8K base models are also up for grabs under the same license, making it a versatile tool for various applications.

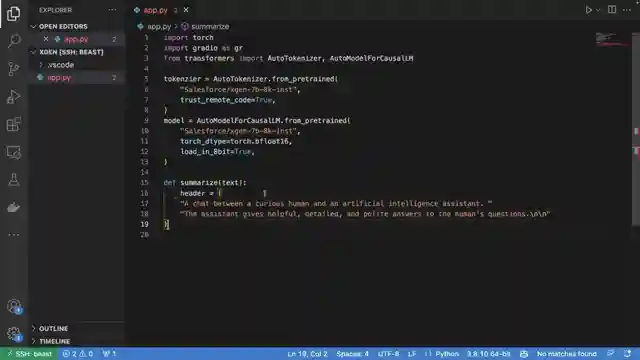

The team explored the realm of instruction fine-tuned models, cautioning that these are strictly for research purposes. Leveraging the tick token tokenizer from OpenAI, these models operate akin to the Lama model. Moving on to the practical side, a simple summarizer application was crafted using Torch, Radio, and Transformers. By loading the tokenizer and model, the stage was set for a seamless summarization process. With parameters like max length and temperature in play, the model could churn out summaries based on input text, showcasing its adaptability and efficiency.

The application was put to the test, generating concise and accurate summaries from text inputs. The NextGen 8K model emerged as a game-changer, overcoming limitations by training on an extensive 1.5 trillion tokens. It delivered results that were not just good but exceptional, proving its mettle in summarization tasks. Viewers were encouraged to engage by asking questions, leaving comments, and showing support through subscriptions and shares. As the video wrapped up, it was evident that Exogen and its capabilities had left a lasting impression, setting the stage for future explorations in the world of AI models and applications.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Building a summarizer using XGen-7b: Fully open source LLM by Salesforce on Youtube

Viewer Reactions for Building a summarizer using XGen-7b: Fully open source LLM by Salesforce

Tips on quantizing via GPTQ and optimizing for inference latency

Can it summarize conversation transcripts?

Hardware specifications for running this

Quality comparison with WizardLM and Vicuna 1.3

Maximum number of input tokens and confusion about 1.5T tokens

Multilingual capabilities

Error message and fix related to adding device_map='auto'

Request for specs

Related Articles

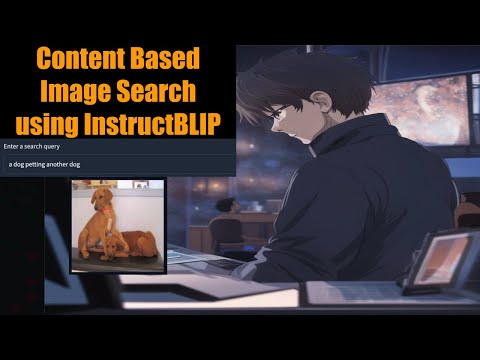

Revolutionizing Image Description Generation with InstructBlip and Hugging Face Transformers

Abhishek Thakur explores cutting-edge image description generation using InstructBlip and Hugging Face Transformers. Leveraging Vicuna and Flan T5, the team crafts detailed descriptions, saves them in a CSV file, and creates embeddings for semantic search, culminating in a user-friendly Gradio demo.

Ultimate Guide: Creating AI QR Codes with Python & Hugging Face Library

Learn how to create AI-generated QR codes using Python and the Hugging Face library, Diffusers, in this exciting tutorial by Abhishek Thakur. Explore importing tools, defining models, adjusting parameters, and generating visually stunning QR codes effortlessly.

Unveiling Salesforce's Exogen: Efficient 7B LLM Model for Summarization

Explore Salesforce's cutting-edge Exogen model, a 7B LLM trained on an 8K input sequence. Learn about its Apache 2.0 license, versatile applications, and efficient summarization capabilities in this informative video by Abhishek Thakur.

Mastering LLM Training in 50 Lines: Abhishek Thakur's Expert Guide

Abhishek Thakur demonstrates training LLMs in 50 lines of code using the "alpaca" dataset. He emphasizes data formatting consistency for optimal results, showcasing the process on his home GPU. Explore the world of AI training with key libraries and fine-tuning techniques.