Unveiling Data Privacy Risks: Manipulating Pre-Trained Models for Data Theft

- Authors

- Published on

- Published on

In this riveting video by Yannic Kilcher, we delve into a groundbreaking paper by Shan L. and Florian Traumer from ETH Zurich that shakes the very core of data privacy. The paper introduces a devious concept of pilfering sensitive information through corrupted pre-trained models like Bert and visual Transformers. While still in its infancy, this method poses a looming threat to the security of personal data. Imagine a scenario where a user fine-tunes a model with confidential information, intending to create a classifier to detect personal data in text. However, even if the model is shielded behind an API, this paper unveils a cunning blackbox attack that can extract the fine-tuning data without exploiting conventional security vulnerabilities.

The attacker's ability to manipulate the pre-trained model's weights to imprint the training data opens a Pandora's box of privacy breaches. This nefarious scheme could stem from gaining reputation on platforms like Hugging Face, leading to potential compromises in data security. By adding a layer to the pre-trained model and utilizing SGD for training, the attacker can embed data points in the weights, paving the way for future data reconstruction. The paper's audacious approach challenges existing notions of privacy guarantees, pushing the boundaries of differential private training to its theoretical limits.

Through this method, the attacker can extract training examples and jeopardize data privacy, highlighting the critical need for enhanced security measures in machine learning models. The technique focuses on embedding training data in the model's weights, enabling model stealing attacks even in scenarios where only input-output pairs are accessible. This groundbreaking research underscores the paramount importance of safeguarding sensitive information in the ever-evolving landscape of artificial intelligence and machine learning.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Privacy Backdoors: Stealing Data with Corrupted Pretrained Models (Paper Explained) on Youtube

Viewer Reactions for Privacy Backdoors: Stealing Data with Corrupted Pretrained Models (Paper Explained)

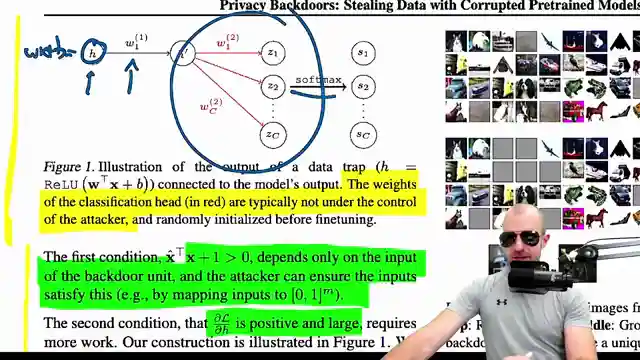

Data traps embedded within model's weights

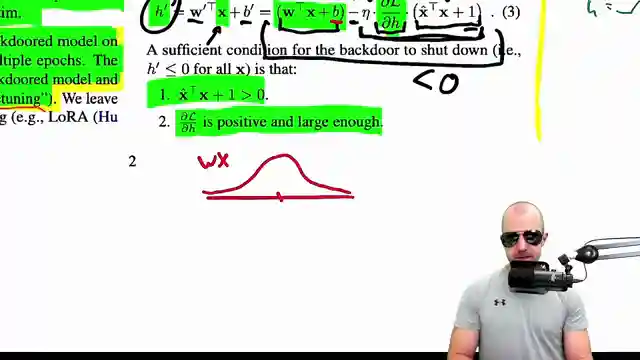

Single-use activation for capturing information

Latch mechanism to prevent further updates

Gradient manipulation for specific data points

Target selection based on data distribution

Bypassing defenses like layer normalization

Reconstructing data from final model weights

Impact on compromising privacy and differential privacy guarantees

Vulnerability in machine learning model supply chain

Susceptibility to mitigation techniques and need for target data distribution calibration

Related Articles

Decoding Large Language Models: Anthropic's Transformer Circuit Exploration

Anthropic explores the biology of large language models through transformer circuits, using circuit tracing and transcoders for interpretability. Learn how these models make decisions and handle tasks like poetry without explicit programming.

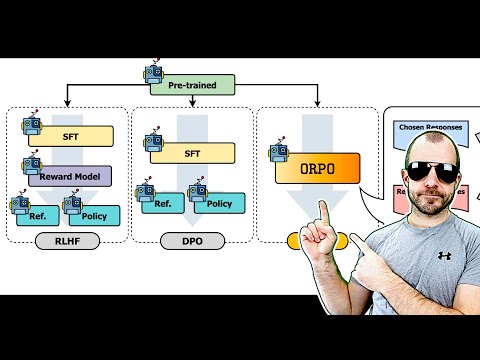

Revolutionizing AI Alignment: Orpo Method Unveiled

Explore Orpo, a groundbreaking AI optimization method aligning language models with instructions without a reference model. Streamlined and efficient, Orpo integrates supervised fine-tuning and odds ratio loss for improved model performance and user satisfaction. Experience the future of AI alignment today.

Tech Roundup: Meta's Chip, Google's Robots, Apple's AI Deal, OpenAI Leak, and More!

Meta unveils powerful new chip; Google DeepMind introduces low-cost robots; Apple signs $50M deal for AI training images; OpenAI researchers embroiled in leak scandal; Adobe trains AI on Mid Journey images; Canada invests $2.4B in AI; Google releases cutting-edge models; Hugging Face introduces iFix 2 Vision language model; Microsoft debuts Row one model; Apple pioneers Faret UI language model for mobile screens.

Unveiling OpenAI's GPT-4: Controversies, Departures, and Industry Shifts

Explore the latest developments with OpenAI's GPT-4 Omni model, its controversies, and the departure of key figures like Ilia Sver and Yan Le. Delve into the balance between AI innovation and commercialization in this insightful analysis by Yannic Kilcher.