Revolutionizing Language Modeling: Efficient Tary Operations Unveiled

- Authors

- Published on

- Published on

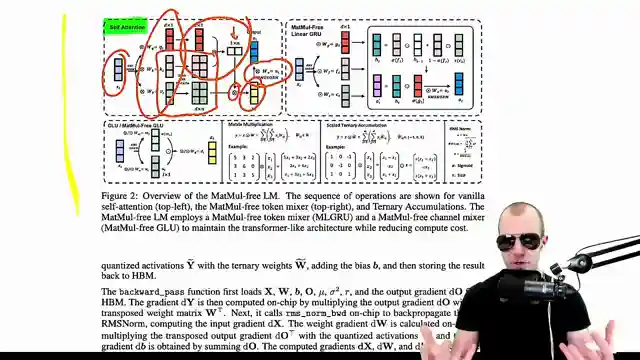

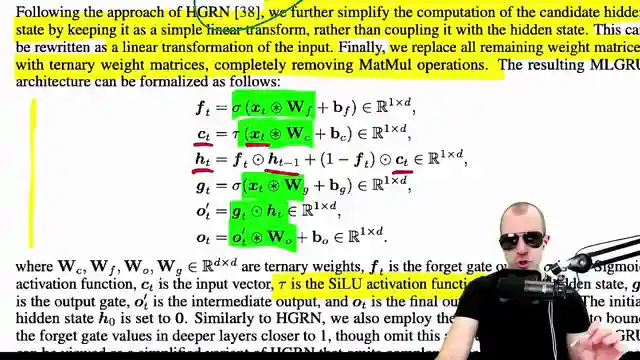

In this riveting episode from Yannic Kilcher, we delve into the world of cutting-edge language modeling, where a team of researchers from UC Santa Cruz, UC Davis, and Loxy Tech are shaking things up. They've tossed out traditional matrix multiplications in large language models, opting for more efficient tary accumulators and parallelizable tary recurrent networks. It's like swapping your trusty sedan for a sleek new sports car - faster, more agile, and ready to take on the computational racetrack. By combining ideas from papers like bitnet and RW KV, they've crafted a matrix multiplication-free model that promises to revolutionize efficiency in the world of language processing.

The team's experiments reveal a fascinating twist: a tipping point where these new models outshine the current heavyweights in terms of efficiency. It's a bit like watching an underdog rise through the ranks to challenge the established champions - a thrilling prospect for the future of language modeling. But, as with any epic journey, there are hurdles to overcome. The infamous hardware lottery poses a formidable obstacle, prompting the team to consider custom FPGA hardware as a potential game-changer. It's a bold move, akin to equipping your car with turbo boosters to outpace the competition.

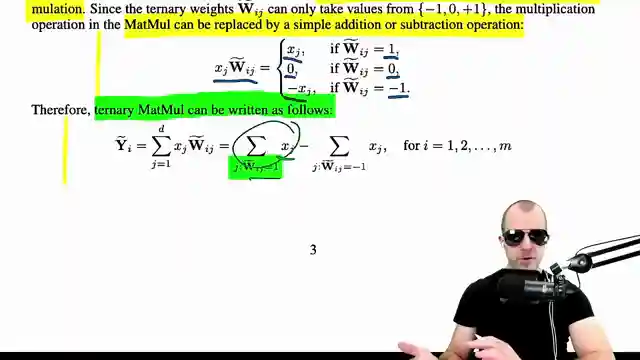

Matrix multiplication is the beating heart of neural networks, powering everything from Transformers to dense MLPs. The team's proposal to use tary weights - restricting values to -1, 0, or 1 - is a game-changer. It's like streamlining your engine to run on pure adrenaline, cutting out the unnecessary fluff for a leaner, meaner performance. By simplifying operations to selections and additions, they're paving the way for a new era of hardware efficiency. This approach not only streamlines computations but also challenges the status quo, hinting at a future where traditional floating-point multiplications may become a relic of the past.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Scalable MatMul-free Language Modeling (Paper Explained) on Youtube

Viewer Reactions for Scalable MatMul-free Language Modeling (Paper Explained)

Problem: Matrix multiplications are resource-intensive and require specialized hardware like GPUs.

Proposed Solution: Eliminating MatMuls entirely from large language models while maintaining competitive performance.

Key Findings: Performance of MatMul-free models is on par with state-of-the-art Transformers at scales up to 2.7 billion parameters.

Author's Opinion: Exciting and promising for edge computing and energy-efficient AI, but skepticism remains about surpassing traditional Transformers in performance.

FPGA Angle: Research proposes replacing feed-forward operations in large language models with more computationally efficient operations, particularly using ternary weights.

Efficiency: The proposed architecture significantly reduces memory usage and latency, with potential for even greater efficiency gains on custom hardware like FPGAs.

Straight-Through Estimator: Interest in understanding how it allows the models to train efficiently.

Potential Improvements: Suggestions to further adapt the algorithm for better performance by factoring matrices into primes and summing them in parallel.

Trade-offs: Concerns about the trade-offs in the architecture and reliance on quantization for training.

Comparison: Discussion on how the model compares to other solutions in terms of speed and precision.

Related Articles

Decoding Large Language Models: Anthropic's Transformer Circuit Exploration

Anthropic explores the biology of large language models through transformer circuits, using circuit tracing and transcoders for interpretability. Learn how these models make decisions and handle tasks like poetry without explicit programming.

Revolutionizing AI Alignment: Orpo Method Unveiled

Explore Orpo, a groundbreaking AI optimization method aligning language models with instructions without a reference model. Streamlined and efficient, Orpo integrates supervised fine-tuning and odds ratio loss for improved model performance and user satisfaction. Experience the future of AI alignment today.

Tech Roundup: Meta's Chip, Google's Robots, Apple's AI Deal, OpenAI Leak, and More!

Meta unveils powerful new chip; Google DeepMind introduces low-cost robots; Apple signs $50M deal for AI training images; OpenAI researchers embroiled in leak scandal; Adobe trains AI on Mid Journey images; Canada invests $2.4B in AI; Google releases cutting-edge models; Hugging Face introduces iFix 2 Vision language model; Microsoft debuts Row one model; Apple pioneers Faret UI language model for mobile screens.

Unveiling OpenAI's GPT-4: Controversies, Departures, and Industry Shifts

Explore the latest developments with OpenAI's GPT-4 Omni model, its controversies, and the departure of key figures like Ilia Sver and Yan Le. Delve into the balance between AI innovation and commercialization in this insightful analysis by Yannic Kilcher.