AI Legal Research Tools: Hallucination Study & RAG Impact

- Authors

- Published on

- Published on

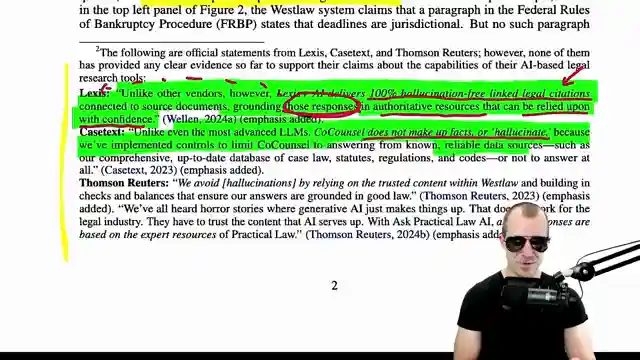

Today on Yannic Kilcher's channel, we delve into the murky world of AI legal research tools, courtesy of a study by Stanford and Yale researchers. Yannic, the CTO of Deep Judge, a legal tech company, sheds light on the integration of AI, particularly generative AI like GPT, into the legal domain. These tools aim to tackle legal queries using publicly available data, such as laws and case law. However, the study uncovers a troubling issue: the prevalence of "hallucinations," inaccuracies generated by language models in these systems.

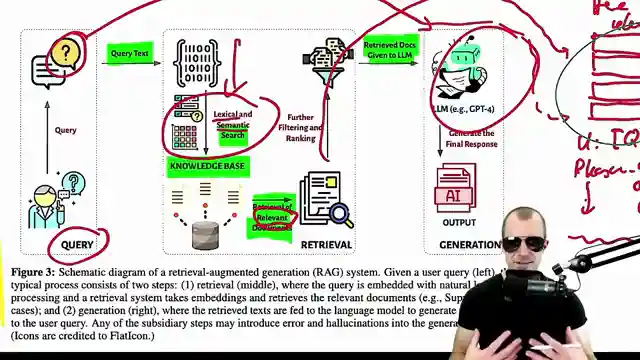

Yannic doesn't hold back in criticizing the shady practices of researchers and companies in the legal tech industry. He points out the unrealistic expectations placed on AI systems in legal research, emphasizing the flawed approach of applying language models like GPT to such tasks. The study introduces "retrieval augmented generation" (RAG) as a technique to combat hallucinations by combining language models with search engines to provide more accurate responses to legal queries.

The study evaluates various AI products, including GPT-4, to assess their performance in legal research tasks. It compares the effectiveness of these systems with and without RAG, highlighting the significant improvement in accuracy when using the augmented generation technique. Yannic stresses the importance of context and reasoning in legal question answering, tasks that traditional language models struggle to handle effectively. By incorporating retrieved data from a knowledge base, RAG enhances the overall reliability of AI-generated responses in the legal field.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Hallucination-Free? Assessing the Reliability of Leading AI Legal Research Tools (Paper Explained) on Youtube

Viewer Reactions for Hallucination-Free? Assessing the Reliability of Leading AI Legal Research Tools (Paper Explained)

Use of LLMs in Law and the need for human oversight

Critique of a Stanford paper examining AI-powered legal research tools

Comparison of legal research tools with and without RAG

Limitations of LLMs in complex reasoning tasks

Importance of human-AI collaboration in legal research

Challenges in reducing hallucinations in GPT-based models

Marketing accuracy of Lexis and Apple

Discussion on the usefulness of RAG in legal applications

Concerns about the effectiveness of RAG and LLMs in legal research

Questioning the value and future of LLMs in legal tasks

Related Articles

Decoding Large Language Models: Anthropic's Transformer Circuit Exploration

Anthropic explores the biology of large language models through transformer circuits, using circuit tracing and transcoders for interpretability. Learn how these models make decisions and handle tasks like poetry without explicit programming.

Revolutionizing AI Alignment: Orpo Method Unveiled

Explore Orpo, a groundbreaking AI optimization method aligning language models with instructions without a reference model. Streamlined and efficient, Orpo integrates supervised fine-tuning and odds ratio loss for improved model performance and user satisfaction. Experience the future of AI alignment today.

Tech Roundup: Meta's Chip, Google's Robots, Apple's AI Deal, OpenAI Leak, and More!

Meta unveils powerful new chip; Google DeepMind introduces low-cost robots; Apple signs $50M deal for AI training images; OpenAI researchers embroiled in leak scandal; Adobe trains AI on Mid Journey images; Canada invests $2.4B in AI; Google releases cutting-edge models; Hugging Face introduces iFix 2 Vision language model; Microsoft debuts Row one model; Apple pioneers Faret UI language model for mobile screens.

Unveiling OpenAI's GPT-4: Controversies, Departures, and Industry Shifts

Explore the latest developments with OpenAI's GPT-4 Omni model, its controversies, and the departure of key figures like Ilia Sver and Yan Le. Delve into the balance between AI innovation and commercialization in this insightful analysis by Yannic Kilcher.