Enhancing Language Model Performance: Microsoft's Prompt Wizard Revolution

- Authors

- Published on

- Published on

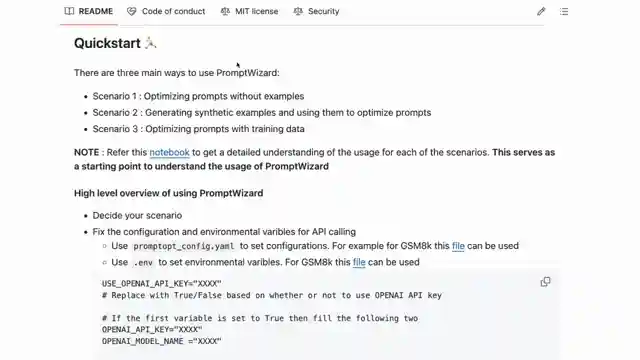

In this riveting video from Sam Witteveen, the focus is on the critical importance of optimizing prompts for language models like LLMs. Viewers are taken on a thrilling ride through the world of context and input quality, showcasing how these factors directly impact the output quality of these models. Enter Microsoft's cutting-edge framework, Prompt Wizard, a game-changer in the realm of prompt optimization. This revolutionary tool automates and simplifies the process, aiming to elevate the performance of language models to unprecedented levels.

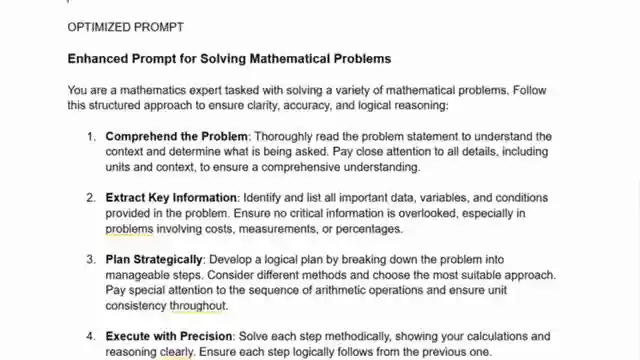

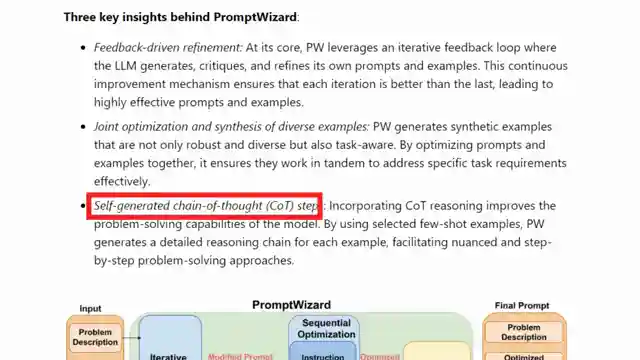

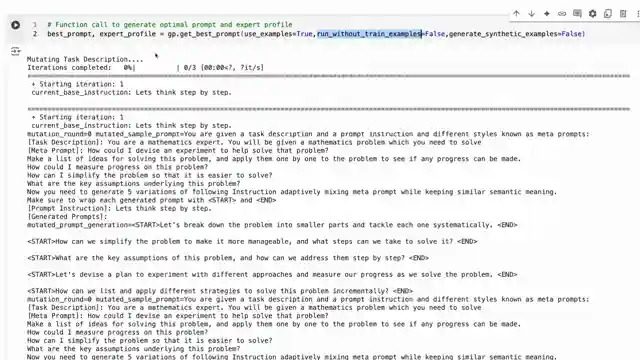

Prompt Wizard is not just another run-of-the-mill tool; it's a powerhouse of innovation. By leveraging feedback-driven refinement, joint optimization, and self-generated Chain of Thought steps, this framework pushes the boundaries of what language models can achieve. With a focus on evolving instructions and in-context learning examples over time, Prompt Wizard sets a new standard in prompt engineering. Microsoft's dedication to excellence shines through as they tackle the challenge of prompt optimization head-on, aiming to revolutionize the way we interact with language models.

As the video delves deeper into the inner workings of Prompt Wizard, viewers are treated to a behind-the-scenes look at how this framework operates. From refining prompt instructions to generating diverse synthetic examples, Prompt Wizard leaves no stone unturned in its quest for optimal performance. The framework's iterative approach and emphasis on feedback ensure that prompt optimization is a dynamic and ever-evolving process. With Prompt Wizard at the helm, the future of prompt engineering looks brighter than ever before.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch How to OPTIMIZE your prompts for better Reasoning! on Youtube

Viewer Reactions for How to OPTIMIZE your prompts for better Reasoning!

Comparison between PromptWizard and other tools like textgrad and dspy

Concerns about token usage and cost

Feasibility of developing a similar prompt optimization tool independently

Handling of real-time context variables in prompts

Use of large prompts in production and preference for multiple smaller prompts

Request for examples of human prompt improvement

Cost and token usage of PromptWizard

Effectiveness of PromptWizard compared to fine-tuning a model

Use of genetics algorithm in the iterative optimization process

Difficulty faced by models under 8B with long prompts

Related Articles

Unleashing Gemini CLI: Google's Free AI Coding Tool

Discover the Gemini CLI by Google and the Gemini team. This free tool offers 60 requests per minute and 1,000 requests per day, empowering users with AI-assisted coding capabilities. Explore its features, from grounding prompts in Google Search to using various MCPS for seamless project management.

Nanet's OCR Small: Advanced Features for Specialized Document Processing

Nanet's OCR Small, based on Quen 2.5VL, offers advanced features like equation recognition, signature detection, and table extraction. This model excels in specialized OCR tasks, showcasing superior performance and versatility in document processing.

Revolutionizing Language Processing: Quen's Flexible Text Embeddings

Quen introduces cutting-edge text embeddings on HuggingFace, offering flexibility and customization. Ranging from 6B to 8B in size, these models excel in benchmarks and support instruction-based embeddings and reranking. Accessible for local or cloud use, Quen's models pave the way for efficient and dynamic language processing.

Unleashing Chatterbox TTS: Voice Cloning & Emotion Control Revolution

Discover Resemble AI's Chatterbox TTS model, revolutionizing voice cloning and emotion control with 500M parameters. Easily clone voices, adjust emotion levels, and verify authenticity with watermarks. A versatile and user-friendly tool for personalized audio content creation.