Revolutionize AI Development with Small Agents: Hugging Face's Innovative Approach

- Authors

- Published on

- Published on

In this riveting episode, the channel delves into the world of small agents, a groundbreaking library from Hugging Face that promises to revolutionize the way agents are built. With a focus on leveraging the vast array of open-source models on the Hugging Face Hub, including the impressive Quen 2.5 Kod 32 billion models, small agents offer a refreshing take on agency levels in AI. By striking a delicate balance between dynamic decision-making and flow direction changes, this library opens up new possibilities for creating intelligent agents that can adapt and evolve in real-time.

What sets small agents apart is their emphasis on code agents, allowing agents to communicate and work within a code environment. This innovative approach not only streamlines the development process but also ensures that agents can run in a sandboxed environment, providing a safe and secure platform for experimentation. Additionally, small agents offer first-class support for running code, further enhancing their versatility and functionality. By combining the power of code agents with traditional tool calling agents, small agents provide a comprehensive solution for building intelligent and adaptive agents.

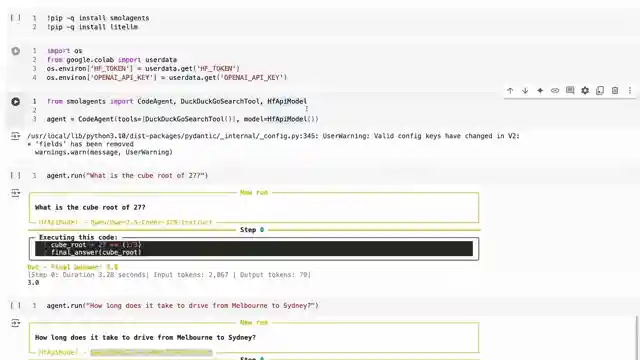

As the successor to Transformer agents, small agents require minimal code to set up, making it incredibly easy to get started. By importing tools like the DuckDuckGo search tool and the Hugging Face API model, users can create custom tools and models tailored to their specific needs. The collab example featuring the GPT-3 model from OpenAI showcases the simplicity and efficiency of setting up a small agent to perform complex tasks, such as calculating mathematical queries or retrieving real-time information. Despite some limitations in allowed Python libraries, small agents demonstrate a robust problem-solving approach, iterating through different strategies to find solutions and adapt to challenges along the way.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch smolagents - HuggingFace's NEW Agent Framework on Youtube

Viewer Reactions for smolagents - HuggingFace's NEW Agent Framework

Request for a video on multi-agent framework with a "supervisor" agent

Comparison between Smolagents, Langgraph agents, and crew for flexibility and future use

Questions about the best approach for creating a smart chat-bot with different sale scenarios

Envisioning an agents and tools store similar to Apple and GooglePlay stores

Concerns about debugging broken code with Smolagent framework

Inquiry about other options besides hfAPI and LiteLLM for using LLM models

Comment on the novelty of Huggingface approach in running dynamically Python code

Pronunciation of 'smolagent' as SMOELA-gent

Inquiry about the lack of support for async/await in building an LLM framework

Comment on the issues with constant failures possibly due to models not being fit for tasks

Related Articles

Unleashing Gemini CLI: Google's Free AI Coding Tool

Discover the Gemini CLI by Google and the Gemini team. This free tool offers 60 requests per minute and 1,000 requests per day, empowering users with AI-assisted coding capabilities. Explore its features, from grounding prompts in Google Search to using various MCPS for seamless project management.

Nanet's OCR Small: Advanced Features for Specialized Document Processing

Nanet's OCR Small, based on Quen 2.5VL, offers advanced features like equation recognition, signature detection, and table extraction. This model excels in specialized OCR tasks, showcasing superior performance and versatility in document processing.

Revolutionizing Language Processing: Quen's Flexible Text Embeddings

Quen introduces cutting-edge text embeddings on HuggingFace, offering flexibility and customization. Ranging from 6B to 8B in size, these models excel in benchmarks and support instruction-based embeddings and reranking. Accessible for local or cloud use, Quen's models pave the way for efficient and dynamic language processing.

Unleashing Chatterbox TTS: Voice Cloning & Emotion Control Revolution

Discover Resemble AI's Chatterbox TTS model, revolutionizing voice cloning and emotion control with 500M parameters. Easily clone voices, adjust emotion levels, and verify authenticity with watermarks. A versatile and user-friendly tool for personalized audio content creation.