Deploying Large Language Models with Hugging Face's Inference Endpoints

- Authors

- Published on

- Published on

In this thrilling video from Abhishek Thakur, he delves into the adrenaline-pumping world of deploying massive language models like Falcon 40B using Hugging Face's cutting-edge inference endpoints. These endpoints provide a secure and efficient solution for unleashing a plethora of Transformers models into the wild, allowing users to deploy on both dedicated and auto-scaling infrastructure managed by the wizards at Hugging Face. With options ranging from protected to private to public endpoints, the power is in your hands to choose the level of security that suits your needs.

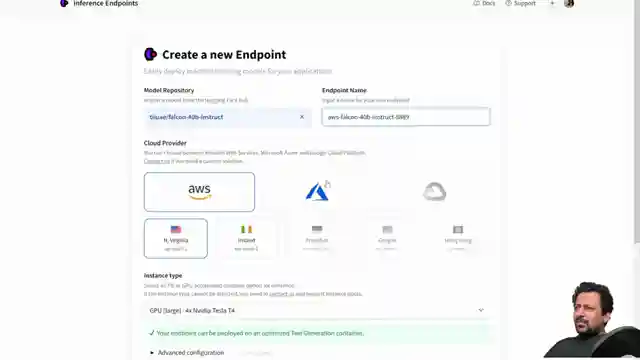

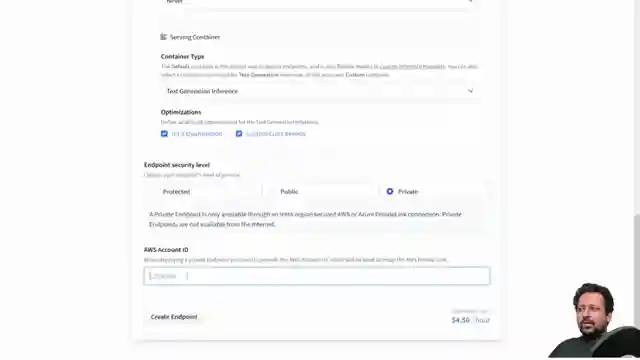

Abhishek takes you on a high-octane journey through the deployment process, starting with a visit to the Hugging Face page to select your model of choice from the repository. From there, you can customize deployment options, such as choosing between Microsoft Azure or AWS, selecting regions, and configuring advanced settings like auto-scaling and container types. The adrenaline continues to surge as you set the security level for your endpoint, whether it's a protected fortress requiring a Hugging Face token or a public spectacle ready for the world to see.

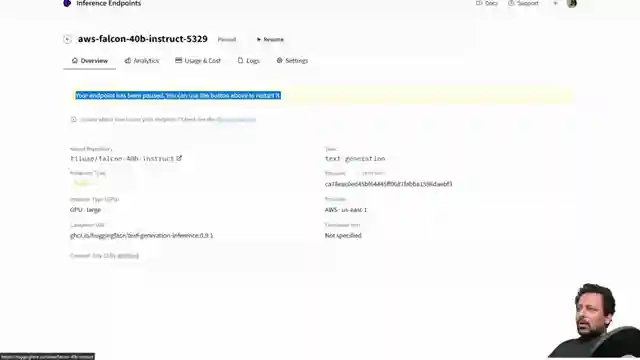

As the deployment process unfolds, Abhishek demonstrates the estimated costs involved, giving you a clear view of what it takes to unleash these behemoth models into the digital realm. Through logs and testing, he guides you through the final stages of bringing your endpoint to life, providing you with the tools to interact with the model and witness its capabilities firsthand. With a nod to simplicity and efficiency, Abhishek showcases how easy it is to engage with these powerful language models, inviting you to join the adrenaline-fueled journey of deployment with Hugging Face's inference endpoints.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch 1-Click LLM Deployment! on Youtube

Viewer Reactions for 1-Click LLM Deployment!

Viewers find the video useful and practical

Appreciation for the Hugging Face expertise

Positive feedback on the effectiveness of the demonstrated method

Praise for the deployment of LLMs model

Inquiry about the tool being used

Related Articles

Revolutionizing Image Description Generation with InstructBlip and Hugging Face Transformers

Abhishek Thakur explores cutting-edge image description generation using InstructBlip and Hugging Face Transformers. Leveraging Vicuna and Flan T5, the team crafts detailed descriptions, saves them in a CSV file, and creates embeddings for semantic search, culminating in a user-friendly Gradio demo.

Ultimate Guide: Creating AI QR Codes with Python & Hugging Face Library

Learn how to create AI-generated QR codes using Python and the Hugging Face library, Diffusers, in this exciting tutorial by Abhishek Thakur. Explore importing tools, defining models, adjusting parameters, and generating visually stunning QR codes effortlessly.

Unveiling Salesforce's Exogen: Efficient 7B LLM Model for Summarization

Explore Salesforce's cutting-edge Exogen model, a 7B LLM trained on an 8K input sequence. Learn about its Apache 2.0 license, versatile applications, and efficient summarization capabilities in this informative video by Abhishek Thakur.

Mastering LLM Training in 50 Lines: Abhishek Thakur's Expert Guide

Abhishek Thakur demonstrates training LLMs in 50 lines of code using the "alpaca" dataset. He emphasizes data formatting consistency for optimal results, showcasing the process on his home GPU. Explore the world of AI training with key libraries and fine-tuning techniques.