Unveiling the Truth: Language Models vs. Impossible Languages

- Authors

- Published on

- Published on

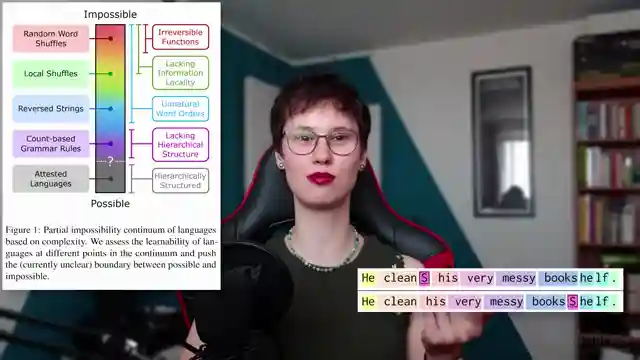

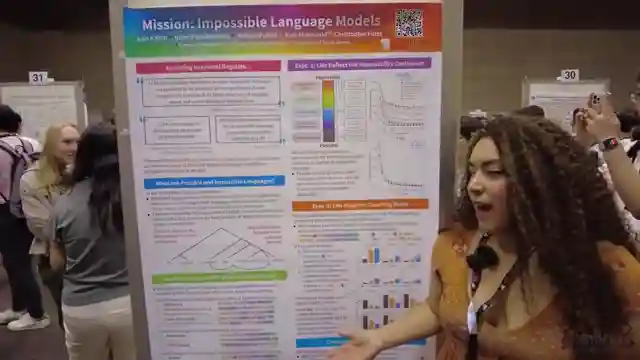

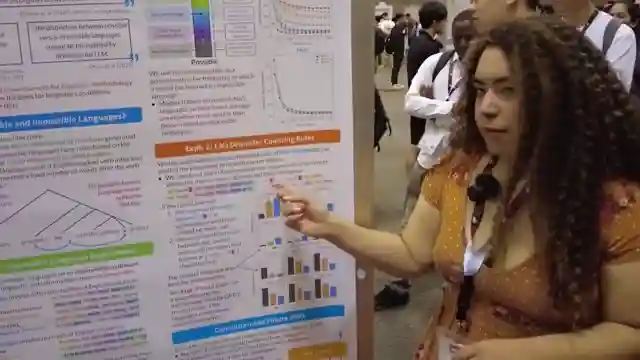

In this riveting episode of AI Coffee Break with Letitia, the team dives headfirst into the fiery debate surrounding language models, taking on none other than the legendary Noam Chomsky himself. Lead author Julie Kallini and her squad present a groundbreaking study at the ACL conference, challenging Chomsky's skepticism towards Language Models (LLMs). With a swagger as bold as a roaring engine, they dissect the study's approach to "impossible languages," showcasing how LLMs struggle with increasingly complex patterns, ultimately debunking claims that they can handle any grammar thrown their way.

As the team revs up the engines of experimentation, they unleash the power of GPT-2 models on synthetic impossible languages, pushing the boundaries of linguistic comprehension. Through meticulous analysis of metrics like perplexity and surprisal, they reveal the inner workings of these models when faced with mind-bending linguistic challenges. Ms. Coffee Bean adds fuel to the fire, shedding light on the intricate dance between language entropy and predictability, underscoring the importance of consistent patterns for effective communication.

With the roar of a well-tuned engine, the team reflects on the paper's impact, acknowledging its role in clarifying the murky waters of theoretical linguistics. The accolades bestowed upon the study speak volumes, recognizing its clever experiments and clear presentation that illuminate the true capabilities of LLMs. Behind the wheel of this linguistic thrill ride, the team explains the rationale behind choosing GPT-2 for training, ensuring accessibility without breaking the bank. Buckle up and join AI Coffee Break with Letitia for a wild journey through the fast-paced world of language models and linguistic exploration.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Mission: Impossible language models – Paper Explained [ACL 2024 recording] on Youtube

Viewer Reactions for Mission: Impossible language models – Paper Explained [ACL 2024 recording]

Explanation of how the authors tested random word shuffles

Comparison of LLMs learning natural, harder, and impossible languages

Discussion on Chomsky's hypothesis and the need for structured but fundamentally "unnatural" languages

Mention of prior research on LLMs learning impossible languages

Criticism of the paper's testing methods and the need for a more comprehensive approach

Discussion on the difference between loss and surprisal

Question on evaluating the difficulty of learning languages in terms of flops

Consideration of vocabulary size as a parameter of language complexity

Mention of the practicality and usefulness of LLMs in language learning

Speculation on the limitations of AI in understanding consciousness

Related Articles

Revolutionizing Video Understanding: Introducing Storm Model

Discover Storm, a groundbreaking video language model revolutionizing video understanding by compressing sequences for improved reasoning. Storm outperforms existing models on benchmarks, enhancing efficiency and accuracy in real-time applications.

Revolutionizing Large Language Model Training with FP4 Quantization

Discover how training large language models at ultra-low precision using FP4 quantization revolutionizes efficiency and performance, challenging traditional training methods. Learn about outlier clamping, gradient estimation, and the potential for FP4 to reshape the future of large-scale model training.

Revolutionizing AI Reasoning Models: The Power of a Thousand Examples

Discover how a groundbreaking paper revolutionizes AI reasoning models, showing that just a thousand examples can boost performance significantly. Test time tricks and distillation techniques make high-performance models accessible, but at a cost. Explore the trade-offs between accuracy and computational efficiency.

Revolutionizing Model Interpretability: Introducing CC-SHAP for LLM Self-Consistency

Discover the innovative CC-SHAP score introduced by AI Coffee Break with Letitia for evaluating self-consistency in natural language explanations by LLMs. This continuous measure offers a deeper insight into model behavior, revolutionizing interpretability testing in the field.