Unveiling Deep Seek Math: Grpo Approach and 7 Billion Parameter Model

- Authors

- Published on

- Published on

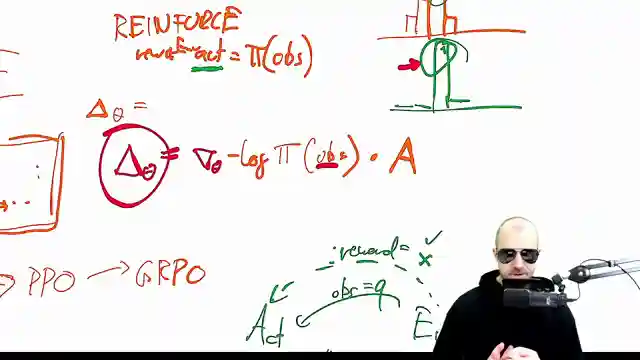

Today, we delve into the thrilling world of Deep seek math, where groundbreaking mathematical reasoning meets the open language models. In a daring move, the team introduces grpo, a game-changing component in their R1 model, setting the stage for a mathematical showdown of epic proportions. This paper, dated back to April 27th, 2024, unveils their quest for mathematical supremacy, aiming to dominate the accuracy charts in math benchmarks. Imagine John with four apples, the stakes are high, and the challenge is on!

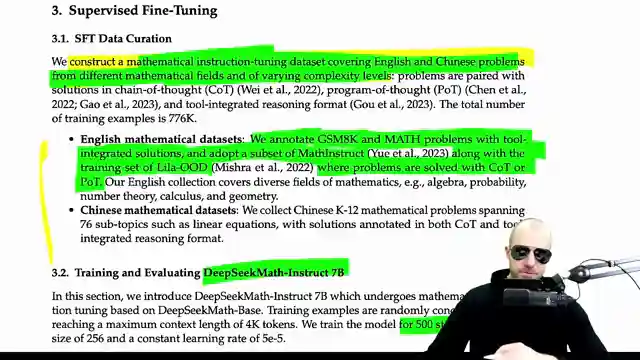

Deep seek math unleashes its 7 billion parameter juggernaut, rivaling the giants of commercial APIs like gbt 4 and Gemini Ultra. With a laser focus on math problems, this model outshines even the most versatile competitors in the arena. How did they achieve this feat, you ask? Through a dual-pronged strategy involving meticulous data collection and the ingenious grpo approach. By curating a colossal dataset, the team proves that the treasure trove of high-quality math data lies within the vast expanse of the internet.

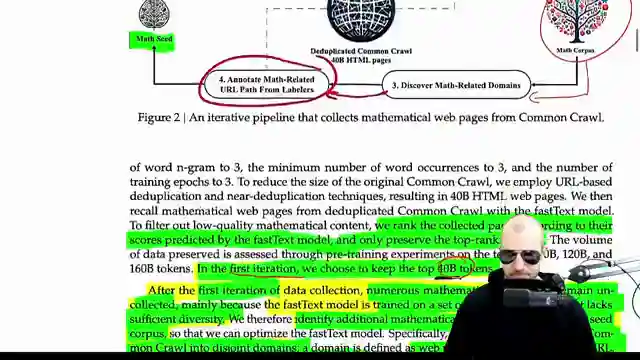

The team's iterative process of dataset expansion reads like a gripping adventure, as they navigate the digital wilderness to unearth mathematical gems. Armed with a fast text model, they sift through the digital haystack, discerning math-related needles with precision. Each iteration brings them closer to mathematical nirvana, culminating in a dataset of 35.5 million math web pages and a staggering 120 billion tokens. This relentless pursuit of excellence showcases their unwavering commitment to pushing the boundaries of mathematical modeling.

In a thrilling validation experiment, the team pits their model against various benchmarks, testing its mettle in the crucible of mathematical challenges. The results speak volumes, highlighting the model's prowess in tackling diverse problem sets with finesse. Deep seek math's journey is a testament to the power of innovation and perseverance in the quest for mathematical glory. So buckle up, folks, as we witness the rise of a mathematical titan in the ever-evolving landscape of AI and machine learning.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch [GRPO Explained] DeepSeekMath: Pushing the Limits of Mathematical Reasoning in Open Language Models on Youtube

Viewer Reactions for [GRPO Explained] DeepSeekMath: Pushing the Limits of Mathematical Reasoning in Open Language Models

Positive feedback on the clear and comprehensive explanations in the video

Request for a video on the DeepSeek-R1 paper

Appreciation for focusing on interesting aspects of DeepSeek's models

Detailed breakdown of timestamps in the video

Request for more content on dataset preparation

Appreciation for the post-training explanation

Discussion on the reasoning process in language model reasoning

Request for a reinforcement learning mini course

Clarification on a technical point regarding policy correction

Discussion on the potential of RL and inference time in improving models

Related Articles

Decoding Large Language Models: Anthropic's Transformer Circuit Exploration

Anthropic explores the biology of large language models through transformer circuits, using circuit tracing and transcoders for interpretability. Learn how these models make decisions and handle tasks like poetry without explicit programming.

Revolutionizing AI Alignment: Orpo Method Unveiled

Explore Orpo, a groundbreaking AI optimization method aligning language models with instructions without a reference model. Streamlined and efficient, Orpo integrates supervised fine-tuning and odds ratio loss for improved model performance and user satisfaction. Experience the future of AI alignment today.

Tech Roundup: Meta's Chip, Google's Robots, Apple's AI Deal, OpenAI Leak, and More!

Meta unveils powerful new chip; Google DeepMind introduces low-cost robots; Apple signs $50M deal for AI training images; OpenAI researchers embroiled in leak scandal; Adobe trains AI on Mid Journey images; Canada invests $2.4B in AI; Google releases cutting-edge models; Hugging Face introduces iFix 2 Vision language model; Microsoft debuts Row one model; Apple pioneers Faret UI language model for mobile screens.

Unveiling OpenAI's GPT-4: Controversies, Departures, and Industry Shifts

Explore the latest developments with OpenAI's GPT-4 Omni model, its controversies, and the departure of key figures like Ilia Sver and Yan Le. Delve into the balance between AI innovation and commercialization in this insightful analysis by Yannic Kilcher.