Unleashing COCONUT: Revolutionizing AI Reasoning Beyond Words

- Authors

- Published on

- Published on

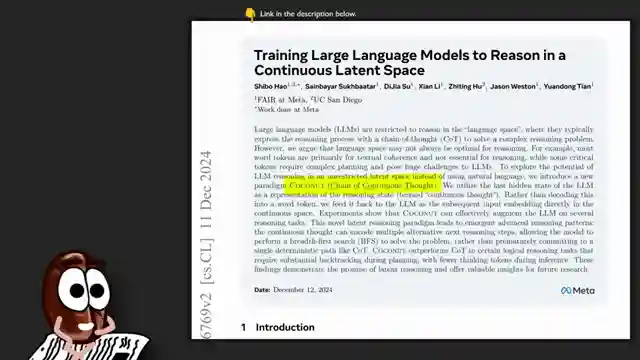

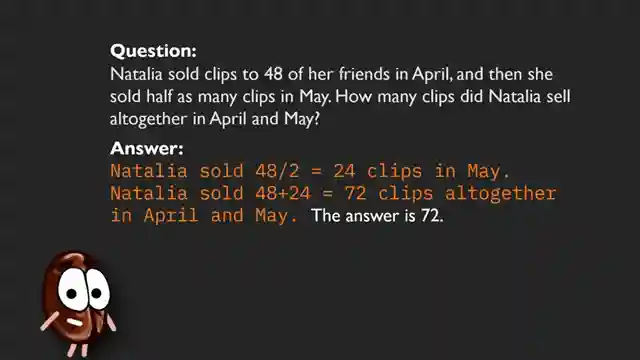

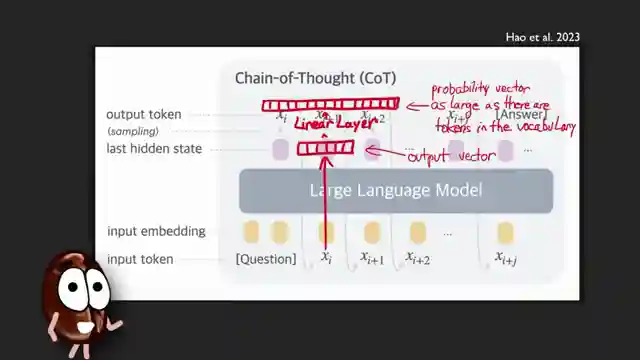

Today on AI Coffee Break, the team delves into the revolutionary COCONUT paper, shaking up the world of large language models like ChatGPT and LLaMA. Forget the traditional Chain of Thought; COCONUT introduces the Chain of Continuous Thought, allowing these models to break free from the shackles of words and reason in unrestricted vectors. It's like taking a sledgehammer to a wall of linguistic limitations, paving the way for faster, more direct processing. This new approach bypasses the usual text decoding hoopla, opting for a streamlined method where continuous thought vectors reign supreme in the realm of reasoning.

Training these models to embrace the COCONUT philosophy involves a gradual shift from standard LLM training to a more fluid continuous CoT mode. By optimizing the reasoning process indirectly through the correctness of final answers, COCONUT shows promising results on datasets like ProntoQA, hinting at a brighter future for AI capabilities. However, as with any groundbreaking innovation, concerns loom on the horizon. The issue of interpretability raises its head, with continuous vectors potentially clouding the transparency of the model's decision-making process. While COCONUT's efficiency in tasks requiring planning, exploration, and uncertainty handling is undeniable, the trade-off with interpretability poses a significant question mark.

In essence, COCONUT opens up a whole new world of possibilities for AI, transcending the boundaries of traditional language-based reasoning. This shift towards reasoning in vectors rather than words not only accelerates the process but also hints at a future where AI systems are more flexible and adept at handling complex tasks. As the authors continue to fine-tune and explore the potential of COCONUT, the tantalizing prospect of scaling this approach to larger LLMs looms large on the horizon. The question remains: will this innovative approach pave the way for a new era of AI dominance, or will the challenges of interpretability cast a shadow over its promising capabilities?

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Training large language models to reason in a continuous latent space – COCONUT Paper explained on Youtube

Viewer Reactions for Training large language models to reason in a continuous latent space – COCONUT Paper explained

Human thought and language region activity

Coconut paper concept and comparison to RNNs

Continuous latent space and concept mapping

Potential impact on AI models and interpretability

Importance of interpretability for trust in AI

Combination of latent space and tokenized reasoning

Use of continuous chain of thought in AI models

Application of Coconut in different areas like image processing

Interest in specific LLM models for testing Coconut

Comparison between traditional token prediction and hidden state input feeding in AI models

Related Articles

Revolutionizing Video Understanding: Introducing Storm Model

Discover Storm, a groundbreaking video language model revolutionizing video understanding by compressing sequences for improved reasoning. Storm outperforms existing models on benchmarks, enhancing efficiency and accuracy in real-time applications.

Revolutionizing Large Language Model Training with FP4 Quantization

Discover how training large language models at ultra-low precision using FP4 quantization revolutionizes efficiency and performance, challenging traditional training methods. Learn about outlier clamping, gradient estimation, and the potential for FP4 to reshape the future of large-scale model training.

Revolutionizing AI Reasoning Models: The Power of a Thousand Examples

Discover how a groundbreaking paper revolutionizes AI reasoning models, showing that just a thousand examples can boost performance significantly. Test time tricks and distillation techniques make high-performance models accessible, but at a cost. Explore the trade-offs between accuracy and computational efficiency.

Revolutionizing Model Interpretability: Introducing CC-SHAP for LLM Self-Consistency

Discover the innovative CC-SHAP score introduced by AI Coffee Break with Letitia for evaluating self-consistency in natural language explanations by LLMs. This continuous measure offers a deeper insight into model behavior, revolutionizing interpretability testing in the field.