Sutra r0: Revolutionizing Multilingual Models with Deep Seek Principles

- Authors

- Published on

- Published on

In this riveting episode, we delve into the mysterious world of Sutra r0, a cutting-edge model from the innovative minds at 2. a. Led by the enigmatic Prav Mystery, a tech wizard known for his captivating presentations and work on the Samsung Galaxy watch, this company is clearly not one to be underestimated. With offices in New Delhi, Korea, and the USA, it's a global powerhouse combining Indian and Korean expertise in a research organization like no other. Sutra r0, with its focus on deep seek principles for Indian languages, is a true game-changer in the field of multilingual models.

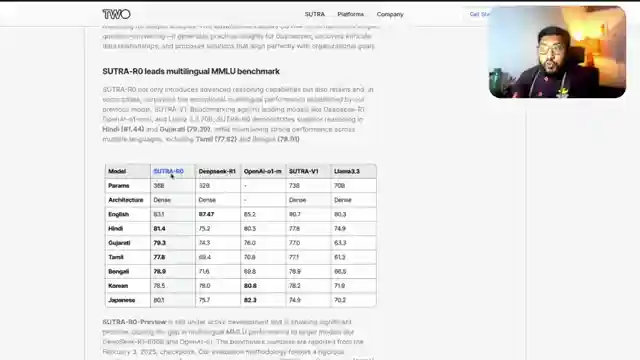

Despite its impressive 36 billion parameters, the inner workings of Sutra r0 remain shrouded in secrecy, leaving us to ponder its architecture and foundation. What sets this model apart, however, is its logical reasoning layer, allowing it to tackle complex scenarios and multi-step problems with finesse. While drawing parallels to deep seek, Sutra r0's emphasis on structured reasoning hints at a unique approach that promises exceptional performance in various languages. The team's testing in Tamil and Hindi showcases the model's prowess, revealing similarities to deep seek while hinting at potential distinctions that set it apart.

As we witness the model's impressive capabilities in languages like Hindi and Tamil, one can't help but wonder about its potential impact on the tech landscape. Although not yet open source, the company's Enterprise focus suggests a strategic direction that could revolutionize the industry. The prospect of Sutra r0 becoming accessible to a wider audience, encompassing diverse languages and markets, is tantalizing. So, buckle up and join the adventure as we uncover the secrets of Sutra r0 and its journey towards reshaping the future of multilingual models.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Is this The Indian Deepseek? Sutra-R0 Quick Look! on Youtube

Viewer Reactions for Is this The Indian Deepseek? Sutra-R0 Quick Look!

Acknowledgment of current infrastructure and skills in India

Expectations from Pranav Mistry in the AI field

Sutra AI as an Indian model to replace DeepSeek

Interest in seeing sincere AI models from India

Excitement for Indian AI models catching up in the race

Mention of Sutra being in Reliance corporate park

Comparison of Sutra with DeepSeek and the availability of models

Concerns about the development process and language chains in Sutra

Speculation on Sutra being a DeepSeek wrapper

Comments on the quality and potential of Indian language models

Related Articles

Revolutionizing Music Creation: Google's Magenta Real Time Model

Discover Magenta, a cutting-edge music generation model from Google deep mind. With 800 million parameters, Magenta offers real-time music creation on Google Collab TPU. Available on Hugging Face, this AI innovation is revolutionizing music production.

Nanits OCRS Model: Free Optical Character Recognition Tool Outshines Competition

Discover Nanits' OCRS model, a powerful optical character recognition tool fine-tuned from Quinn 2.5 VLM. This free model outshines Mistral AI's paid OCR API, excelling in latex equation recognition, image description, signature detection, and watermark extraction. Accessible via Google Collab, it offers seamless conversion of documents to markdown format. Experience the future of OCR technology with Nanits.

Revolutionizing Voice Technology: Chatterbox by Resemble EI

Resemble EI's Chatterbox, a half-billion parameter model licensed under MIT, excels in text-to-speech and voice cloning. Users can adjust parameters like pace and exaggeration for customized output. The model outperforms competitors, making it ideal for diverse voice applications. Subscribe to 1littlecoder for more insights.

Unlock Productivity: Google AI Studio's Branching Feature Revealed

Discover the hidden Google AI studio feature called branching on 1littlecoder. This revolutionary tool allows users to create different conversation timelines, boosting productivity and enabling flexible communication. Branching is a game-changer for saving time and enhancing learning experiences.