GPT 5 System Breakdown: Advancing AI with Test Time Scaling

- Authors

- Published on

- Published on

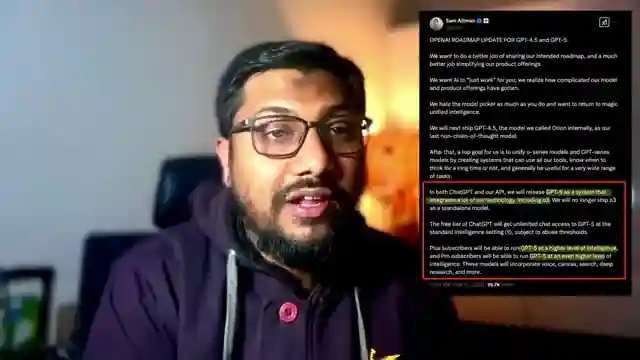

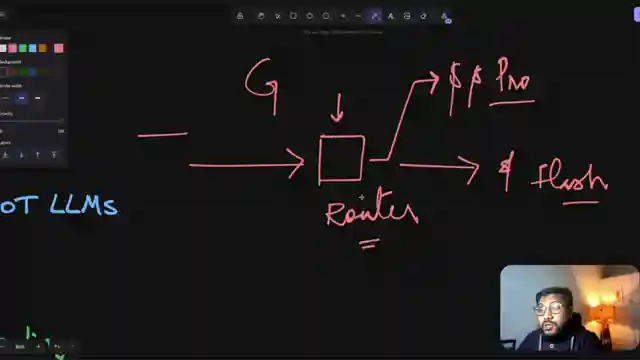

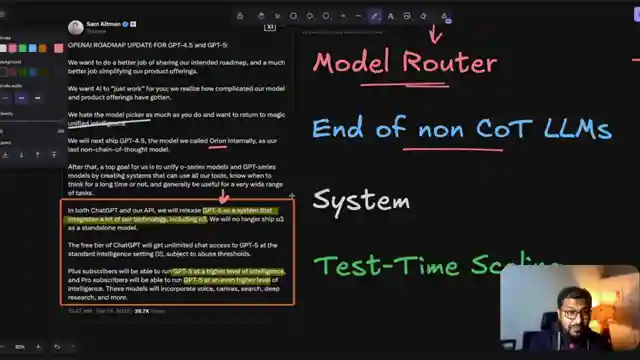

In this riveting video, the channel 1littlecoder delves into the groundbreaking GPT 5 system and its innovative test time scaling feature. Sam Alman, the man with all the answers, sheds light on the latest developments regarding GPT 4.5 and the highly anticipated GPT 5 in a recent OpenAI roadmap update. Alman's insights reveal a burning desire to bid farewell to the cumbersome model picker, paving the way for a return to the enchanting realm of unified intelligence. The introduction of the Model Router concept promises to streamline model selection, a move reminiscent of the strategic decisions made by tech giants like Google.

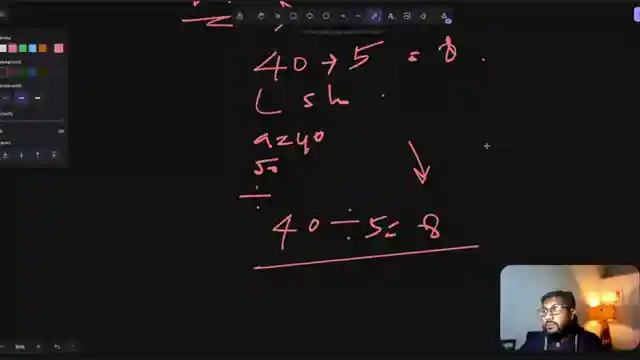

Furthermore, Alman's mention of GPT 4.5 being the final non-chain of thought model hints at a paradigm shift towards more sophisticated Chain of Thought models in the future. The concept of allowing models to think longer through test time scaling emerges as a game-changer, with the promise of enhanced accuracy and performance. This approach was vividly demonstrated in the AR AGI challenge, showcasing the potential for models to deliver superior solutions with extended thinking time. The evolution towards a system-based GPT 5, integrating various existing models like Pro and O3, marks a significant leap forward in the landscape of language models.

As the gears of progress turn, the narrative unfolds towards a future where language models transcend mere word generation, evolving into sophisticated systems capable of nuanced reasoning and problem-solving. Alman's revelations paint a picture of a dynamic ecosystem where different tiers of users can access varying levels of intelligence, tailored to their needs. The fusion of cutting-edge technologies and strategic decision-making sets the stage for a new era in artificial intelligence, where the boundaries of what language models can achieve are pushed ever further. This roadmap update signifies not just an evolution but a revolution in the realm of AI, promising a future where the unimaginable becomes reality.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Just in: GPT-5 will be a system with TTCS! on Youtube

Viewer Reactions for Just in: GPT-5 will be a system with TTCS!

Some users prefer to know the model they are using and how it generates the response

AGI is predicted to be just around the corner

Speculation on DeepSeek releasing GPT-5 before OpenAI

Concerns about OpenAI's model picker being designed to save engineering costs instead of providing the best user experience

Suggestions for keeping the model picker as an option

Speculation on OpenAI's commercial plan and potential profit motives

Questions about the integration of system/tools in GPT-5

Comparison of OpenAI's model picker to existing options like open router

Users expressing admiration for the presenter's handwriting using a mouse

Requests for more transparency from OpenAI regarding their models and systems.

Related Articles

Revolutionizing Music Creation: Google's Magenta Real Time Model

Discover Magenta, a cutting-edge music generation model from Google deep mind. With 800 million parameters, Magenta offers real-time music creation on Google Collab TPU. Available on Hugging Face, this AI innovation is revolutionizing music production.

Nanits OCRS Model: Free Optical Character Recognition Tool Outshines Competition

Discover Nanits' OCRS model, a powerful optical character recognition tool fine-tuned from Quinn 2.5 VLM. This free model outshines Mistral AI's paid OCR API, excelling in latex equation recognition, image description, signature detection, and watermark extraction. Accessible via Google Collab, it offers seamless conversion of documents to markdown format. Experience the future of OCR technology with Nanits.

Revolutionizing Voice Technology: Chatterbox by Resemble EI

Resemble EI's Chatterbox, a half-billion parameter model licensed under MIT, excels in text-to-speech and voice cloning. Users can adjust parameters like pace and exaggeration for customized output. The model outperforms competitors, making it ideal for diverse voice applications. Subscribe to 1littlecoder for more insights.

Unlock Productivity: Google AI Studio's Branching Feature Revealed

Discover the hidden Google AI studio feature called branching on 1littlecoder. This revolutionary tool allows users to create different conversation timelines, boosting productivity and enabling flexible communication. Branching is a game-changer for saving time and enhancing learning experiences.