Revolutionizing AI: Graph Language Models Unleashed

- Authors

- Published on

- Published on

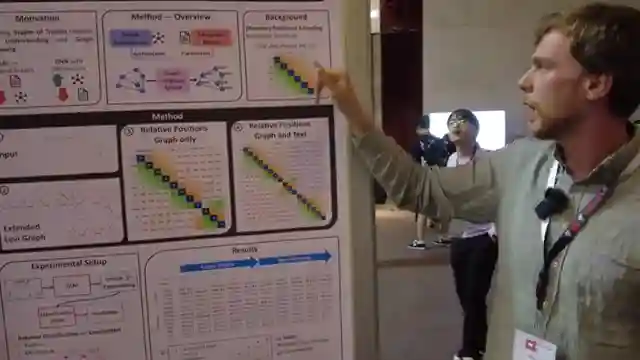

In this thrilling episode of AI Coffee Break with Letitia, we delve into the exhilarating world of graph language models. Moritz Plenz, a genius from Heidelberg, introduces us to a groundbreaking concept that merges the power of language models with the complexity of graph structures. By infusing pre-trained language models with graph Transformers, they've created a revolutionary model that excels at both language understanding and graph reasoning. It's like combining the speed of a supercar with the versatility of an off-road vehicle - a true game-changer in the world of AI.

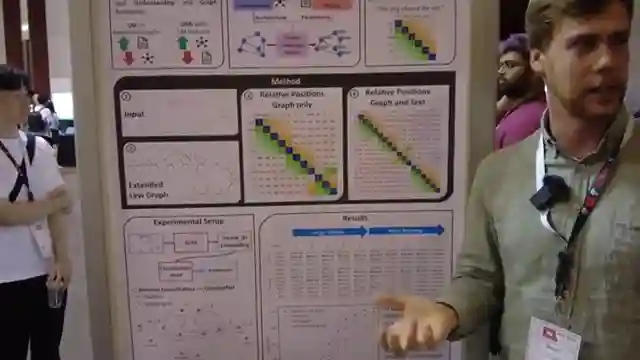

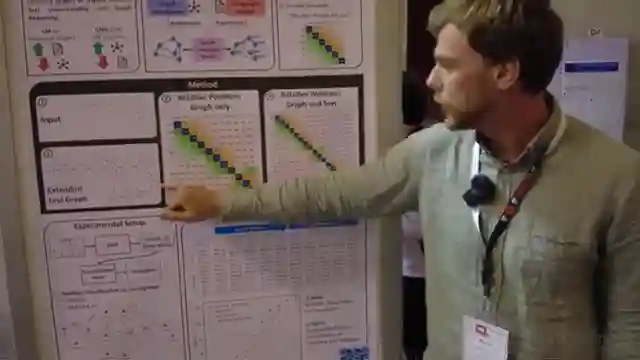

The team's motivation stems from the limitations faced when dealing with graphs containing text nodes and edges. Traditional methods either sacrifice the graph structure for text data or lose language understanding in the process of graph reasoning. But fear not, for the graph language model swoops in to save the day! By converting sequence structures to graph structures, utilizing relative positional embeddings, the model ensures optimal performance in encoding complex graphs. It's like teaching a racing driver to navigate treacherous terrain - a perfect blend of skills for the ultimate AI experience.

Through rigorous evaluation on relation classification tasks, the graph language model proves its superiority over conventional graph linearization methods. Whether dealing with large graphs or emphasizing specific parts of the graph, this model shines brighter than a polished sports car at a car show. By incorporating both graph and text modalities, the model achieves unparalleled performance, showcasing the true potential of AI innovation. So buckle up, gearheads, and get ready to witness the future of AI unfold before your very eyes, right here on AI Coffee Break with Letitia!

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Graph Language Models EXPLAINED in 5 Minutes! [Author explanation 🔴 at ACL 2024] on Youtube

Viewer Reactions for Graph Language Models EXPLAINED in 5 Minutes! [Author explanation 🔴 at ACL 2024]

Importance of reasoning on a graph structure for improved reasoning

Interest in structured knowledge representation for enhancing reasoning

Exploring the use of nodes and edges as tokens in graph structures

Potential for generative models to create large graphs with text prompts

Curiosity about the computational requirements of this approach

Related Articles

Revolutionizing Video Understanding: Introducing Storm Model

Discover Storm, a groundbreaking video language model revolutionizing video understanding by compressing sequences for improved reasoning. Storm outperforms existing models on benchmarks, enhancing efficiency and accuracy in real-time applications.

Revolutionizing Large Language Model Training with FP4 Quantization

Discover how training large language models at ultra-low precision using FP4 quantization revolutionizes efficiency and performance, challenging traditional training methods. Learn about outlier clamping, gradient estimation, and the potential for FP4 to reshape the future of large-scale model training.

Revolutionizing AI Reasoning Models: The Power of a Thousand Examples

Discover how a groundbreaking paper revolutionizes AI reasoning models, showing that just a thousand examples can boost performance significantly. Test time tricks and distillation techniques make high-performance models accessible, but at a cost. Explore the trade-offs between accuracy and computational efficiency.

Revolutionizing Model Interpretability: Introducing CC-SHAP for LLM Self-Consistency

Discover the innovative CC-SHAP score introduced by AI Coffee Break with Letitia for evaluating self-consistency in natural language explanations by LLMs. This continuous measure offers a deeper insight into model behavior, revolutionizing interpretability testing in the field.