Nvidia AI Workbench: Streamlining Development with GPU Acceleration

- Authors

- Published on

- Published on

Today on James Briggs, we're diving headfirst into Nvidia's AI Workbench, a powerhouse software toolkit designed to turbocharge AI engineers and data scientists. This bad boy simplifies the nitty-gritty aspects of data science and AI engineering, allowing users to focus on what really matters: building groundbreaking projects that can be easily shared and replicated. With AI Workbench, you can effortlessly switch between your local machine and remote GPU instances, unleashing unparalleled computing power at your fingertips. It's like having a V12 engine under the hood of your coding endeavors.

Installing AI Workbench is a breeze, but buckle up because you'll need to set up Windows Subsystem Linux 2, Docker Desktop, and those all-important GPU drivers. Once you've got everything in place, it's off to the races as you download AI Workbench from Nvidia's website and choose between Docker or Podman. And let's not forget about those GPU drivers - crucial for unleashing the full potential of your Nvidia GPU, whether it's a GeForce or RTX beast. It's like fine-tuning a high-performance sports car for the ultimate driving experience.

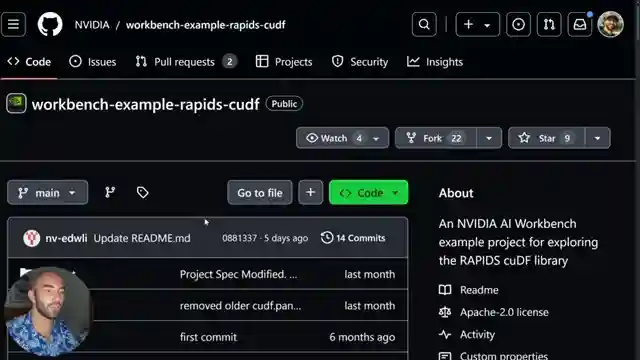

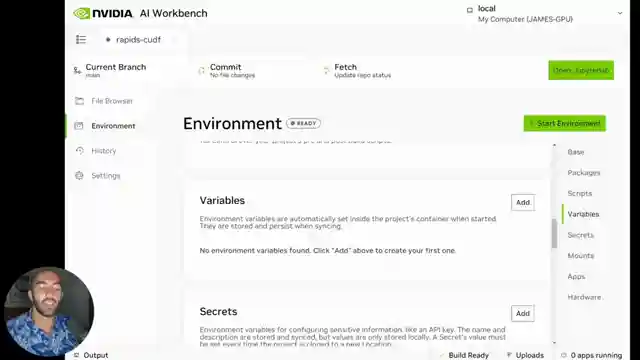

Now, let's talk projects. Whether you're starting fresh or cloning an existing one, AI Workbench offers a range of container templates to kickstart your development journey. By tapping into Nvidia's GitHub examples, you can hit the ground running with projects like Rapids CF, supercharging your data processing capabilities. And the best part? With just a single line of code, you can harness the raw power of GPU acceleration, leaving traditional data processing methods in the dust. It's like swapping out a standard engine for a jet turbine - pure speed and efficiency at your command.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch NVIDIA's NEW AI Workbench for AI Engineers on Youtube

Viewer Reactions for NVIDIA's NEW AI Workbench for AI Engineers

What is NVIDIA AI Workbench?

- A software toolkit for AI engineers and data scientists

- Simplifies complex aspects of data science and AI engineering

- Provides an easy-to-use interface for building and deploying GPU-enabled AI applications

- Facilitates switching between local and remote GPU instances for powerful computation

Key Features:

- Project Creation and Management

- Containerized Environments

- GPU Acceleration

- JupyterLab Integration

- Remote GPU Support

- Variable and Secret Management

Use Cases:

- Local Prototyping

- Rapid Deployment

- Scalable Workloads

Overall:

- AI Workbench is a powerful tool for data scientists and AI engineers

- Simplifies complex setups for rapid prototyping and deployment

- Seamlessly switch between local and remote GPUs for flexibility in AI projects

Related Articles

Exploring AI Agents and Tools in Lang Chain: A Deep Dive

Lang Chain explores AI agents and tools, crucial for enhancing language models. The video showcases creating tools, agent construction, and parallel tool execution, offering insights into the intricate world of AI development.

Mastering Conversational Memory in Chatbots with Langchain 0.3

Langchain explores conversational memory in chatbots, covering core components and memory types like buffer and summary memory. They transition to a modern approach, "runnable with message history," ensuring seamless integration of chat history for enhanced conversational experiences.

Mastering AI Prompts: Lang Chain's Guide to Optimal Model Performance

Lang Chain explores the crucial role of prompts in AI models, guiding users through the process of structuring effective prompts and invoking models for optimal performance. The video also touches on future prompting for smaller models, enhancing adaptability and efficiency.

Enhancing AI Observability with Langmith and Linesmith

Langmith, part of Lang Chain, offers AI observability for LMS and agents. Linesmith simplifies setup, tracks activities, and provides valuable insights with minimal effort. Obtain an API key for access to tracing projects and detailed information. Enhance observability by making functions traceable and utilizing filtering options in Linesmith.