Mastering Semantic Chunkers: Statistical, Consecutive, & Cumulative Methods

- Authors

- Published on

- Published on

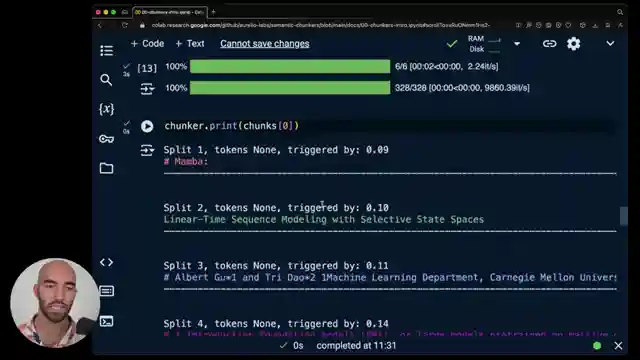

In this riveting video from James Briggs, we delve into the world of semantic chunkers, tools that revolutionize data chunking for applications like RAG. The team presents three chunkers: the statistical, consecutive, and cumulative. The statistical chunker impresses with its automatic determination of similarity thresholds, making it a swift and cost-effective choice. On the other hand, the consecutive chunker demands manual tweaking of score thresholds but can shine with the right adjustments. Meanwhile, the cumulative chunker takes a different approach by comparing embeddings incrementally, offering more resilience to noise at the cost of speed and expense.

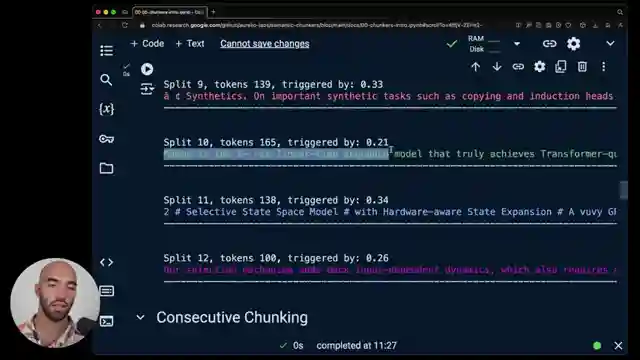

Powered by an open AI embedding model, these chunkers each bring something unique to the table. The statistical chunker swiftly chunks data by adapting to varying similarities, while the consecutive chunker dissects text into sentences and merges them based on drops in similarity. In contrast, the cumulative chunker meticulously adds sentences to create embeddings and splits based on significant similarity changes. The video not only showcases the performance of each chunker but also highlights the modalities they excel in, with the statistical chunker limited to text and the consecutive chunker proving versatile across different data types.

Through this insightful exploration, viewers are guided on selecting the ideal chunker for their specific needs. The statistical chunker emerges as a reliable and efficient choice, while the consecutive chunker offers flexibility with manual adjustments. Meanwhile, the cumulative chunker stands out for its noise resistance, albeit at a slower pace and higher cost. With practical demonstrations and expert analysis, James Briggs provides a comprehensive overview of semantic chunkers, empowering viewers to make informed decisions in their data chunking endeavors.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Semantic Chunking - 3 Methods for Better RAG on Youtube

Viewer Reactions for Semantic Chunking - 3 Methods for Better RAG

Overview of three semantic chunking methods for text data in RAG applications

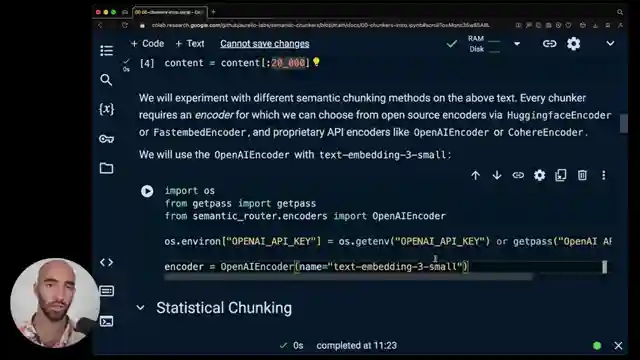

Use of semantic chunkers library and practical examples via a Colab notebook

Application of semantic chunking to AI archive papers dataset for managing complexity and improving efficiency

Need for an embedding model like OpenAI's Embedding Model

Efficiency, cost-effectiveness, and automatic parameter adjustments of statistical chunking method

Comparison of consecutive chunking and cumulative chunking methods

Adaptability of chunking methods to different data modalities

Code and article resources shared for further exploration

Questions on optimal chunk size, incorporating figures into vector database, and using RAG on scientific papers

Request for coverage on citing with RAG and example for LiveRag functionality

Related Articles

Exploring AI Agents and Tools in Lang Chain: A Deep Dive

Lang Chain explores AI agents and tools, crucial for enhancing language models. The video showcases creating tools, agent construction, and parallel tool execution, offering insights into the intricate world of AI development.

Mastering Conversational Memory in Chatbots with Langchain 0.3

Langchain explores conversational memory in chatbots, covering core components and memory types like buffer and summary memory. They transition to a modern approach, "runnable with message history," ensuring seamless integration of chat history for enhanced conversational experiences.

Mastering AI Prompts: Lang Chain's Guide to Optimal Model Performance

Lang Chain explores the crucial role of prompts in AI models, guiding users through the process of structuring effective prompts and invoking models for optimal performance. The video also touches on future prompting for smaller models, enhancing adaptability and efficiency.

Enhancing AI Observability with Langmith and Linesmith

Langmith, part of Lang Chain, offers AI observability for LMS and agents. Linesmith simplifies setup, tracks activities, and provides valuable insights with minimal effort. Obtain an API key for access to tracing projects and detailed information. Enhance observability by making functions traceable and utilizing filtering options in Linesmith.