Mastering Hugging Face's Auto Train Advanced: Fine-Tune Models with Ease

- Authors

- Published on

- Published on

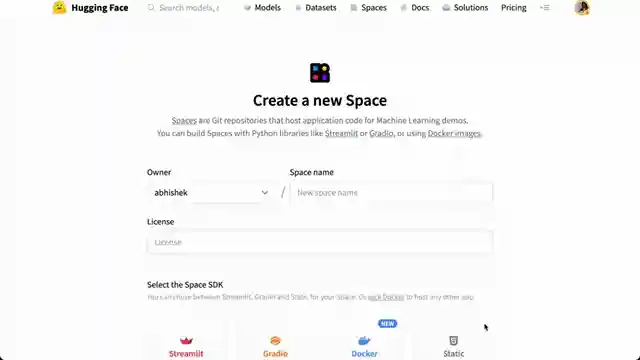

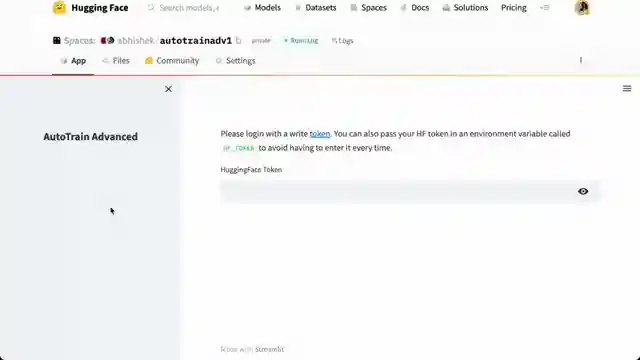

In this riveting tutorial by Abhishek Thakur, he unveils the secrets of mastering Hugging Face's cutting-edge Auto Train Advanced feature. With the power of this tool, you can fine-tune a plethora of models, from the mighty llm 7B Vikuna to the formidable 13B models, and even the revered Pythia and GPT Neo. The process kicks off with creating a private space in your Hugging Face account, selecting the appropriate docker template and Auto Train flavor, and securing your space with a token - ensuring your training endeavors remain exclusive.

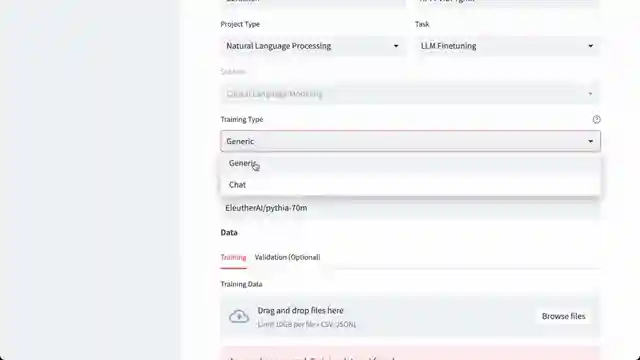

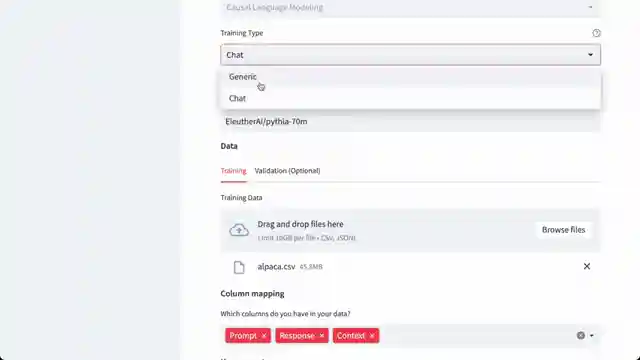

Once your space is set up, it's time to dive into the world of training tasks, whether it's natural language processing or delving into specific training types like generic or chat. By feeding in datasets like the Alpaca dataset and tweaking parameters to your liking, such as selecting the base model like Pythia 70m for the llm 7B, you're on your way to creating a project with estimated costs. As the training commences and progress unfolds on the dashboard, you're one step closer to unleashing your finely-tuned models into the world.

As the training progresses, you can keep a close eye on the dashboard to track the development of your models. Once your model is trained and ready to go, deploying it is a breeze - whether through inference endpoints or by delving into the handler.py file for a peek at how the model operates. The beauty of Auto Train lies in the fact that all models are kept private, giving you the freedom to utilize them as you see fit. So buckle up, dive into the world of Auto Train Advanced, and watch as your models evolve into powerful tools at your disposal.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Finetune LLMs (llama, vicuna, gptneo, pythia) without any code! on Youtube

Viewer Reactions for Finetune LLMs (llama, vicuna, gptneo, pythia) without any code!

Creating a custom dataset for fine-tuning

Running fine-tuned model on own infrastructure and calling it using APIs from Java/JS App

Recommended resources for learning AI/ML as a beginner

Issues with Hugging Face autotrain and runtime errors

Differences between two models trained with the same data and settings

Cost comparison with Colab Pro and Paperspace

Training on personal hardware and obtaining code from the interface

Concerns about automation of basic tech jobs

Open-sourcing the code on the backend for implementing a similar interface/training process on local hardware

Troubleshooting login issues with HuggingFace Token placement

Related Articles

Revolutionizing Image Description Generation with InstructBlip and Hugging Face Transformers

Abhishek Thakur explores cutting-edge image description generation using InstructBlip and Hugging Face Transformers. Leveraging Vicuna and Flan T5, the team crafts detailed descriptions, saves them in a CSV file, and creates embeddings for semantic search, culminating in a user-friendly Gradio demo.

Ultimate Guide: Creating AI QR Codes with Python & Hugging Face Library

Learn how to create AI-generated QR codes using Python and the Hugging Face library, Diffusers, in this exciting tutorial by Abhishek Thakur. Explore importing tools, defining models, adjusting parameters, and generating visually stunning QR codes effortlessly.

Unveiling Salesforce's Exogen: Efficient 7B LLM Model for Summarization

Explore Salesforce's cutting-edge Exogen model, a 7B LLM trained on an 8K input sequence. Learn about its Apache 2.0 license, versatile applications, and efficient summarization capabilities in this informative video by Abhishek Thakur.

Mastering LLM Training in 50 Lines: Abhishek Thakur's Expert Guide

Abhishek Thakur demonstrates training LLMs in 50 lines of code using the "alpaca" dataset. He emphasizes data formatting consistency for optimal results, showcasing the process on his home GPU. Explore the world of AI training with key libraries and fine-tuning techniques.