Fine-Tuning Gemma Model with Cloud TPUs: Machine Learning Efficiency

- Authors

- Published on

- Published on

In this thrilling episode of Google Cloud Tech, Wietse and Duncan embark on an adrenaline-pumping journey into the world of Cloud TPUs. These cutting-edge processors, purpose-built for AI, are like the secret weapon in Google's arsenal, powering everything from Gemini to Photos with lightning-fast efficiency. With a systolic array architecture that minimizes memory access during calculations, TPUs are the unsung heroes of the machine learning world, delivering speed and energy efficiency like never before.

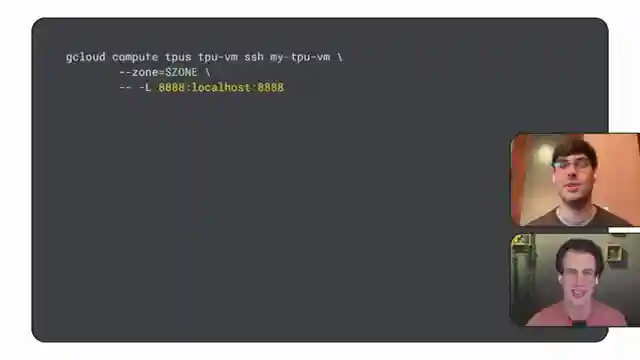

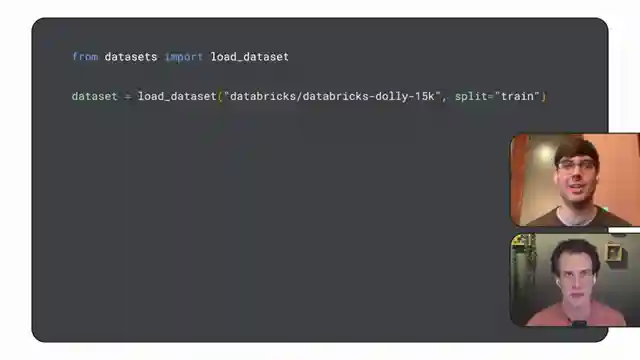

As the dynamic duo delves deeper, they unveil the true power of TPUs in fine-tuning models, using the Gemma model as their canvas. From setting up a virtual machine with TPU to pre-processing the Dolly dataset with a specialized tokenizer, every step is a heart-pounding race against time. With PEFT and Lora techniques in their toolkit, they fine-tune Gemma with surgical precision, updating only a select few parameters to achieve optimal results.

But the real magic happens during training, where the team leverages the SFTTrainer from the TRL library to kickstart the fine-tuning process. With a single command, trainer.train ignites a firestorm of activity on their TPU machine, culminating in the birth of a finely-tuned Gemma model. As the dust settles, Wietse and Duncan stand victorious, showcasing the power and potential of Cloud TPUs in the world of machine learning.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Using Cloud TPUs for open model fine-tuning on Youtube

Viewer Reactions for Using Cloud TPUs for open model fine-tuning

Learn more about open AI models with Wietse

How does the service cost

Does it work with TPU-v3 in Kaggle?

Mein huggingFace website ko safe nai samjhta tha 😂 And now 🙂

Related Articles

Mastering Real-World Cloud Run Services with FastAPI and Muslim

Discover how Google developer expert Muslim builds real-world Cloud Run services using FastAPI, uvicorn, and cloud build. Learn about processing football statistics, deployment methods, and the power of FastAPI for seamless API building on Cloud Run. Elevate your cloud computing game today!

The Agent Factory: Advanced AI Frameworks and Domain-Specific Agents

Explore advanced AI frameworks like Lang Graph and Crew AI on Google Cloud Tech's "The Agent Factory" podcast. Learn about domain-specific agents, coding assistants, and the latest updates in AI development. ADK v1 release brings enhanced features for Java developers.

Simplify AI Integration: Building Tech Support App with Large Language Model

Google Cloud Tech simplifies AI integration by treating it as an API. They demonstrate building a tech support app using a large language model in AI Studio, showcasing code deployment with Google Cloud and Firebase hosting. The app functions like a traditional web app, highlighting the ease of leveraging AI to enhance user experiences.

Nvidia's Small Language Models and AI Tools: Optimizing On-Device Applications

Explore Nvidia's small language models and AI tools for on-device applications. Learn about quantization, Nemo Guardrails, and TensorRT for optimized AI development. Exciting advancements await in the world of AI with Nvidia's latest hardware and open-source frameworks.