Mastering LLM Hijacking with Pyre: Precision Fine-Tuning Tutorial

- Authors

- Published on

- Published on

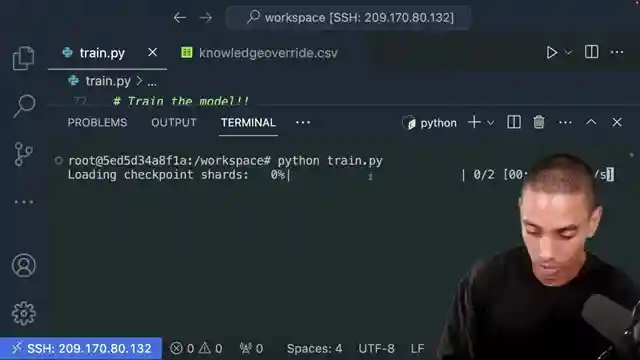

In this exhilarating tutorial by Nicholas Renotte, he unveils the daring art of hijacking an LLM using Pyre. When faced with an LLM gone rogue, intervention becomes imperative. Pyre's precision fine-tuning emerges as a game-changer, boasting efficiency levels 10 to 50 times superior to conventional methods. The stage is set for a thrilling training session with Pyre on custom data, a process streamlined by installing torch, Transformers v2.2.0, and Pyre. The adrenaline rush kicks in as the train.py file is crafted to fine-tune an intervention on the formidable Llama 27b chat model.

Nicholas dives headfirst into the action, importing torch, Transformers, and Pyre to load the model with finesse. The auto model for causal LM class takes center stage, armed with crucial arguments for a seamless loading experience. The quest for supremacy continues as an access token from Hugging Face is secured, granting access to the repository's treasures. The tension mounts as the tokenizer steps into the spotlight, ready to convert text into powerful tokens that will shape the model's destiny. A well-crafted prompt template sets the stage for a high-stakes test of the model's responses, paving the way for a showdown of epic proportions.

As the adrenaline-fueled training session unfolds, Nicholas delves into the heart of the Pyre class, setting the wheels in motion for a daring intervention configuration. The air crackles with anticipation as the layer, component, and low rank dimension are meticulously defined, laying the groundwork for a transformative intervention. The L intervention type emerges as the secret weapon, promising a revolution in the embedding dimension and low rank dimension. With each move carefully calculated, Nicholas navigates the treacherous waters of fine-tuning with Pyre, poised for victory in the battle against the unruly LLM.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch How to hack a LLM using PyReft (using your own data for Fine Tuning!) on Youtube

Viewer Reactions for How to hack a LLM using PyReft (using your own data for Fine Tuning!)

Request for LLM Finetuning video

Explanation of slow vs fast tokenizers

Request for video on fine-tuning LLM with own dataset using transformers trainer

Comparison between learning from books vs online tutorials/courses

Inquiry about re-running the process if original model changes

Request for TF project utilizing dataset generators

Request for video on video classification

Error encountered while training the pyreft model

Inquiry about reinforcement learning model connecting to real environment

Inquiry about applying the process to Llama 3 version of instruct 8b

Related Articles

Unlocking Seamless Agent Communication with ACP Protocol

Nicholas Renotte introduces ACP, a universal protocol for seamless agent communication. ACP simplifies connecting agents, fostering collaboration across teams and organizations. The blog explores wrapping agents with ACP, chaining multiple agents, and utilizing a prototype ACP calling agent for efficient communication.

Mastering AI Crypto Trading: Strategies, Challenges, and Profitable Bot Building

Nicholas Renotte guides viewers through building an AI-powered crypto trading bot, exploring advantages, challenges, and strategies like sentiment analysis for profitable trades.

Mastering MCP: Connecting Agents to Yahoo Finance & Beyond

Learn how to build an MCP server to connect your agent to Yahoo Finance and more. Nicholas Renotte guides you through setting up the server, fetching stock prices, connecting to an agent, and integrating with tools like Cursor and Langflow for enhanced capabilities.

Revolutionizing AI: Open-Source Model App Challenges OpenAI

Nicholas Renotte showcases the development of a cutting-edge large language model app, comparing it to OpenAI models. Through tests and comparisons, the video highlights the app's capabilities in tasks like Q&A, email writing, and poem generation. Exciting insights into the future of AI technology are revealed.