Google Cloud Dynamic Workload Scheduler: Optimizing AI Hardware Usage

- Authors

- Published on

- Published on

In this thrilling episode of Google Cloud Tech, we delve into the heart-pounding world of artificial intelligence and the race for hardware to power it. Companies worldwide are clamoring for TPUs and GPUs, but alas, the supply often falls short. Enter Google Cloud's Dynamic Workload Scheduler (DWS), a hero in the shadows, here to save the day. With its Calendar Mode, you can lock in resources for weeks or even months, perfect for those marathon ML training sessions or periodic bursts of inferencing madness.

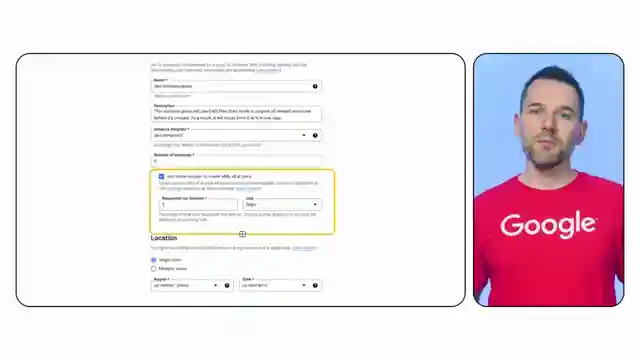

But wait, there's more! Flex Start Mode swoops in for those quick bursts of genius, allowing you to snag resources for up to seven days without breaking the bank. It's like having a high-performance sports car at your disposal, ready to rev up at a moment's notice. With DWS seamlessly integrating with Compute Engine, Google Batch, GKE, Vertex AI, and TPUs, you'll feel like you're driving the AI superhighway with the wind in your hair.

Whether you're a fan of Compute Engine's managed instance groups or prefer the adrenaline rush of Kubernetes Engine, DWS has got you covered. And for the TPU aficionados out there, the Queued Resources Interface ensures you get the full set or nothing at all. It's like having a personal AI concierge catering to your every computational whim. So buckle up, subscribe to this channel, and get ready to ride the wave of AI innovation with Google Cloud Tech at the wheel.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Dynamic Workload Scheduler for AI workloads on Youtube

Viewer Reactions for Dynamic Workload Scheduler for AI workloads

High quality content

AI explainer videos available on the channel

Related Articles

Mastering Real-World Cloud Run Services with FastAPI and Muslim

Discover how Google developer expert Muslim builds real-world Cloud Run services using FastAPI, uvicorn, and cloud build. Learn about processing football statistics, deployment methods, and the power of FastAPI for seamless API building on Cloud Run. Elevate your cloud computing game today!

The Agent Factory: Advanced AI Frameworks and Domain-Specific Agents

Explore advanced AI frameworks like Lang Graph and Crew AI on Google Cloud Tech's "The Agent Factory" podcast. Learn about domain-specific agents, coding assistants, and the latest updates in AI development. ADK v1 release brings enhanced features for Java developers.

Simplify AI Integration: Building Tech Support App with Large Language Model

Google Cloud Tech simplifies AI integration by treating it as an API. They demonstrate building a tech support app using a large language model in AI Studio, showcasing code deployment with Google Cloud and Firebase hosting. The app functions like a traditional web app, highlighting the ease of leveraging AI to enhance user experiences.

Nvidia's Small Language Models and AI Tools: Optimizing On-Device Applications

Explore Nvidia's small language models and AI tools for on-device applications. Learn about quantization, Nemo Guardrails, and TensorRT for optimized AI development. Exciting advancements await in the world of AI with Nvidia's latest hardware and open-source frameworks.