Decoding Large Language Models: Predicting Text with Transformers

- Authors

- Published on

- Published on

In this riveting exploration, the 3Blue1Brown team delves into the fascinating world of large language models like GPT-3, where predicting the next word is akin to a high-stakes game of probability. These models, with their mind-boggling number of parameters, undergo intense training by processing vast amounts of text - so much so that if a human attempted to read it all, it would take over 2600 years! But fear not, for these models aren't just about spewing out gibberish; they are refined through backpropagation to make more accurate predictions over time. It's like tuning the dials on a massive machine, tweaking parameters to enhance the model's word-guessing prowess.

The sheer scale of computation involved in training these language models is nothing short of jaw-dropping. Imagine performing one billion additions and multiplications every second - it would still take well over 100 million years to complete all the operations required for training. But wait, there's more! Pre-training is just the beginning; chatbots also undergo reinforcement learning with human feedback to fine-tune their predictions. Workers flag unhelpful suggestions, prompting adjustments to the model's parameters to cater to user preferences. It's a relentless cycle of improvement, ensuring that these AI assistants become more adept at understanding and responding to human interactions.

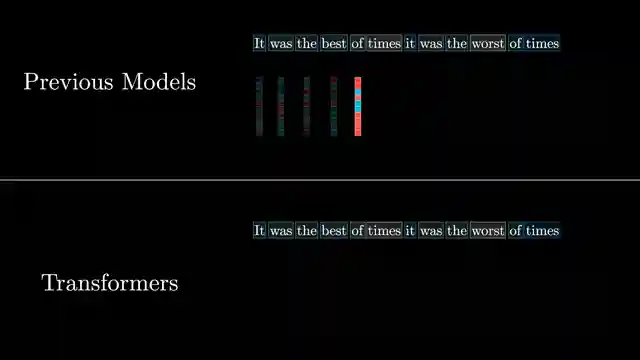

Enter the transformers, a groundbreaking model introduced by a team of researchers at Google in 2017. Unlike their predecessors, transformers process text in parallel, soaking in all the information at once. They associate each word with a list of numbers, using operations like attention to refine these numerical encodings based on context. The goal? To enrich the data and make accurate predictions about what word comes next in a passage. While researchers lay the groundwork for these models, the specific behavior emerges from the intricate tuning of parameters during training, making it a daunting task to decipher the exact reasoning behind the model's predictions. Despite the complexity, the predictions generated by these large language models are remarkably fluent, captivating, and undeniably useful.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Large Language Models explained briefly on Youtube

Viewer Reactions for Large Language Models explained briefly

Viewers appreciate the clear and concise explanations provided in the video

The video is seen as a great foundation for understanding other concepts in the field

Positive feedback on the visuals and animations used in the video

Requests for the video to be included at the start of a deep learning playlist

Some viewers express how the video has helped them in their learning journey in AI and machine learning

Comments on the usefulness of the video for beginners in the field

Mention of the Computer History Museum and memories associated with it

Appreciation for the simplicity and approachability of the explanations

Requests for further clarification on certain aspects, such as the distinction between LLMs and AI

Some viewers share personal stories of struggles with math and how resources like this video have helped them in their learning journey

Related Articles

Decoding Grover's Algorithm: Quantum Computing Demystified

Explore Grover's algorithm and quantum computing concepts clarified by 3Blue1Brown. Learn about translating classical verifier functions, state vectors, and linear transformations in this insightful breakdown.

Unveiling Quantum Computing: Grover's Algorithm Demystified

Explore quantum computing misconceptions and the groundbreaking Grover's algorithm. Learn about state vectors and probabilistic distributions in this illuminating 3Blue1Brown video.

Unlocking Pi: Colliding Blocks, Quantum Computing, and Conservation Principles

Explore the captivating world of computing pi with colliding blocks in this Pi Day special by 3Blue1Brown. Discover the connection to quantum computing, conservation principles, and the intriguing parallels to Grover's Algorithm. A thrilling journey into physics and problem-solving awaits!

Unveiling Cosmic Distances: A Journey with 3Blue1Brown and Terrence Tao

Explore the fascinating history of measuring cosmic distances from parallax to Venus transits. Witness groundbreaking discoveries and astronomical milestones in this cosmic journey with 3Blue1Brown and Terrence Tao.