Unveiling Adversarial Examples: AI's Battle Against Deception

- Authors

- Published on

- Published on

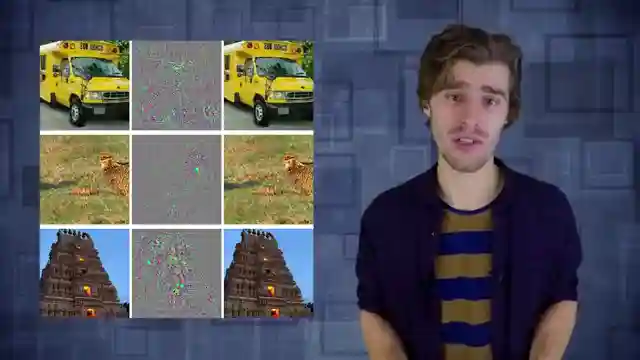

In this riveting episode from Arxiv Insights, the team delves deep into the treacherous waters of adversarial examples in neural networks. These cunningly crafted images are like shape-shifting spies, fooling even the most sophisticated classifiers with their deceptive appearances. From turning a harmless temple into a menacing ostrich to creating 3D-printed glasses that render you invisible to facial recognition software, the implications of these adversarial examples are nothing short of mind-boggling. It's like a high-stakes game of cat and mouse between human perception and artificial intelligence, with the latter often coming out on top.

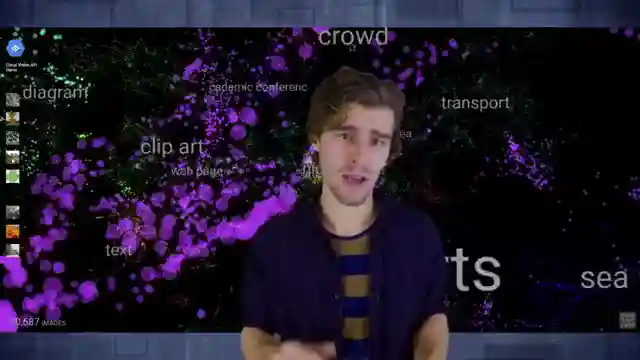

Using techniques like gradient descent and the fast gradient sign method, the team demonstrates how these adversarial examples can be generated to manipulate neural networks into making incorrect classifications. What's truly alarming is that these attacks aren't limited to just one type of network architecture – they can infiltrate and deceive various models, from vanilla networks to recurrent networks and beyond. The sheer adaptability and potency of these adversarial examples make them a formidable foe in the realm of AI, capable of outsmarting even the most advanced systems with their clever disguises.

But the plot thickens as the team uncovers the unsettling truth that hiding model gradients or deploying detection networks may not be foolproof defenses against these insidious attacks. The cross-model generalization of adversarial examples poses a formidable challenge, making it a daunting task to shield neural networks from their deceptive tactics. As they explore potential proactive strategies to fortify neural network resilience, it becomes apparent that the battle against adversarial examples is far from over. It's a high-octane race against time to outsmart these digital impostors and secure the future of AI technology.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch 'How neural networks learn' - Part II: Adversarial Examples on Youtube

Viewer Reactions for 'How neural networks learn' - Part II: Adversarial Examples

Viewers praise the channel for its expert content on Neural Networks and Deep Learning

Requests for more videos on different types of neural networks like RNN, CNN, etc.

Suggestions to include links to research papers in the video descriptions

Speculation on why humans may not respond the same way to adversarial examples as AI

Comparisons between human biological networks and artificial neural networks

Appreciation for the unique aspects of AI compared to naturally evolved organisms

Questions about the existence of adversarial examples for the human brain

Comparisons between adversarial examples in AI and human cognition/emotion manipulation

Some viewers find the presenter's movements distracting

The importance of including a "Complement of a Set" in training data for better generalization in neural networks

Related Articles

Mastering Animation Creation with Texture Flow: A Comprehensive Guide

Discover the intricate world of creating mesmerizing animations with Texture Flow. Explore input settings, denoising steps, and texture image application for stunning visual results. Customize animations with shape controls, audio reactivity, and seamless integration for a truly dynamic workflow.

Unveiling Human Intuition: The Power of Priors in Gaming

Discover how human intuition outshines cutting-edge AI in solving complex environments. Explore the impact of human priors on gameplay efficiency and the limitations of reinforcement learning algorithms. Uncover the intricate balance between innate knowledge and adaptive learning strategies in this insightful study by Arxiv Insights.

Unveiling AlphaGo Zero: Self-Learning AI Dominates Go

Discover the groundbreaking AlphaGo Zero by Google DeepMind, a self-learning AI for Go. Using a residual architecture and Monte Carlo tree search, it outshines predecessors with unparalleled strategy and innovation.

Unveiling Neural Networks: Feature Visualization and Deep Learning Insights

Arxiv Insights delves into interpreting neural networks for critical applications like self-driving cars and healthcare. They explore feature visualization techniques, music recommendation using deep learning, and the Deep Dream project, offering a captivating journey through the intricate world of machine learning.