Mastering Variational Autoencoders: Unveiling Disentangled Data Representation

- Authors

- Published on

- Published on

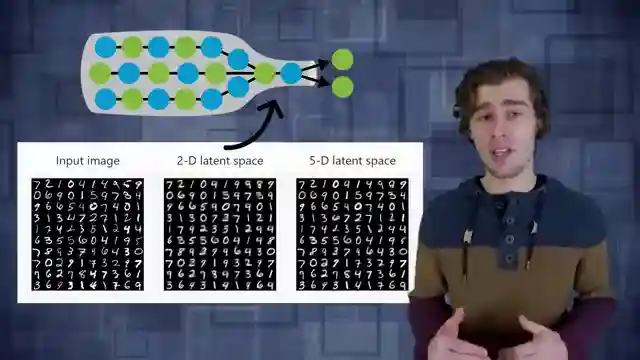

In this riveting episode of Arxiv Insights, the team takes us on a thrilling journey into the world of variational autoencoders. These cutting-edge tools in machine learning are like the James Bond of data compression, taking high-dimensional information and squeezing it down into a sleek, lower-dimensional space. It's like fitting a supercar engine into a compact city car - powerful and efficient.

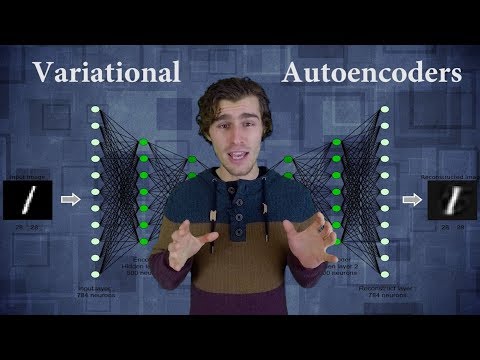

But before we dive into the mechanics of variational autoencoders, we get a crash course in their predecessor, normal autoencoders. Picture this: an encoder and decoder working together like a dynamic duo, compressing and reconstructing data with finesse. Variational autoencoders kick things up a notch by introducing a distribution-based bottleneck, adding a touch of mystery and intrigue to the process. It's like transforming a regular spy into a suave secret agent with a license to sample.

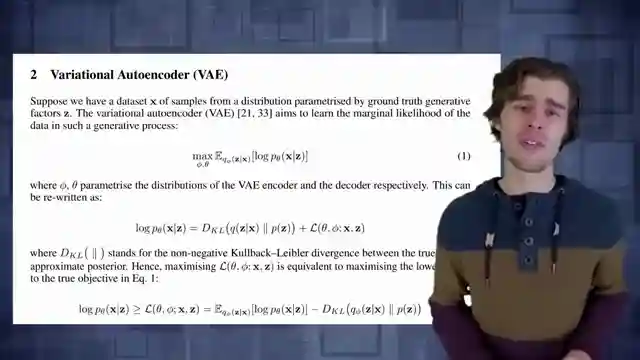

The training regimen for variational autoencoders involves a complex dance of reconstruction loss and KL divergence, ensuring that the latent distribution stays on point. To tackle the tricky issue of backpropagation post-sampling, the team unveils the ingenious reparameterization trick, separating trainable parameters from stochastic nodes like a magician pulling a rabbit out of a hat. And just when you thought things couldn't get any cooler, along comes the concept of disentangled variational autoencoders, promising to untangle the web of latent variables for a clearer, more focused data representation. It's like upgrading from a regular sports car to a Formula 1 racer, precision-engineered for top performance.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Variational Autoencoders on Youtube

Viewer Reactions for Variational Autoencoders

Variational Autoencoders explanation starts at 5:40

Beta-VAE enforces sparse representation

Gradients cannot be pushed through a sampling node

Importance of the reparameterization trick

Request for more videos on various research papers in AI and robotics

Use of disentangle VAE for time series data generations

Discussion on the prior distribution in VAEs

Diffusion models as a plot twist

Mention of KL divergence

Appreciation for clear and comprehensive explanations

Related Articles

Mastering Animation Creation with Texture Flow: A Comprehensive Guide

Discover the intricate world of creating mesmerizing animations with Texture Flow. Explore input settings, denoising steps, and texture image application for stunning visual results. Customize animations with shape controls, audio reactivity, and seamless integration for a truly dynamic workflow.

Unveiling Human Intuition: The Power of Priors in Gaming

Discover how human intuition outshines cutting-edge AI in solving complex environments. Explore the impact of human priors on gameplay efficiency and the limitations of reinforcement learning algorithms. Uncover the intricate balance between innate knowledge and adaptive learning strategies in this insightful study by Arxiv Insights.

Unveiling AlphaGo Zero: Self-Learning AI Dominates Go

Discover the groundbreaking AlphaGo Zero by Google DeepMind, a self-learning AI for Go. Using a residual architecture and Monte Carlo tree search, it outshines predecessors with unparalleled strategy and innovation.

Unveiling Neural Networks: Feature Visualization and Deep Learning Insights

Arxiv Insights delves into interpreting neural networks for critical applications like self-driving cars and healthcare. They explore feature visualization techniques, music recommendation using deep learning, and the Deep Dream project, offering a captivating journey through the intricate world of machine learning.