Unleashing Power: Camino Grand Server, Quen 72b, Drones, and AI Rights

- Authors

- Published on

- Published on

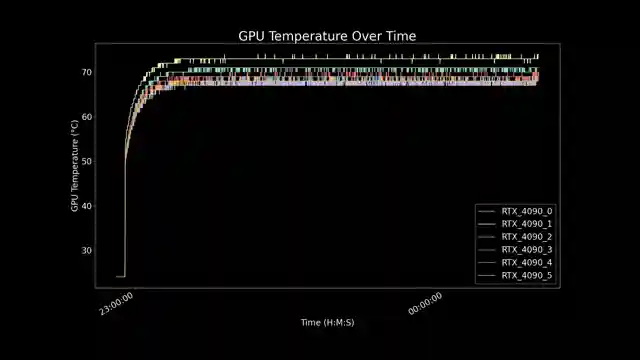

In this thrilling episode, we dive into the heart of the Camino Grand server, a powerhouse machine boasting not one, not two, but a staggering six 490 GPUs crammed inside. How on earth did they manage to fit these beasts in a single casing? The secret lies in Camino's top-notch water cooling system, keeping those GPUs running smooth and cool. And let me tell you, folks, this machine is all about performance - delivering top-tier inference capabilities that'll make your head spin.

But why stuff consumer-grade 490 GPUs into a server build, you ask? Well, it's all about that sweet spot between price and performance. The 490s may lack a few bells and whistles, but when it comes to bang for your buck in the world of inference, they're hard to beat. And speaking of performance, let's not forget the impressive Quen 72b language model, tackling tasks with finesse and flair. It may not excel in all areas, but when it comes to information retrieval, it's a true champion.

Now, let's talk drones. Picture this - programming quadcopters with image-to-depth models, a challenge fit for the daring. The rise T drone takes center stage, showcasing its capabilities in the realm of robotics. And as we push the boundaries of technology, one thing becomes clear - the future is bright for AI. From debating AI rights to exploring the limits of language models, the possibilities are endless. So buckle up, gearheads, because the world of AI is revving its engines, ready to take us on a wild ride.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch INFINITE Inference Power for AI on Youtube

Viewer Reactions for INFINITE Inference Power for AI

Positive feedback on the neural networks hardcover book

Speculation on Sentdex accidentally creating AGI

Discussion on powerful server specifications causing sleepless nights

Appreciation for learning machine learning from the channel

Mention of Large Language Models communicating at a hackathon

Interest in Neuromorphic Hypergraph database on photonic compute engines

Doubts about water cooling efficiency compared to metal for heat dissipation

Speculation on language models functioning as complex look-up tables

Comparison of personal laptop to the discussed server's power

Analysis and critique of the pricing and components of the server

Related Articles

Robotic Hand Control Innovations: Challenges and Solutions

Jeff the G1 demonstrates precise robotic hand control using a keyboard. Challenges with SDK limitations lead to innovative manual training methods for tasks like retrieving a $65,000 bottle of water. Improved features include faster walking speed and emergency stop function.

Enhanced Robotics: Jeff the G1's Software Upgrades and LiDAR Integration

sentdex showcases upgrades to Jeff the G1's software stack, including RGB cameras, lidar for 3D mapping, and challenges with camera positioning and Ethernet connectivity. Embracing simplicity with Kiss ICP and Open3D, they navigate LiDAR integration for enhanced robotic exploration.

Unitry G1 Edu Ultimate B Review: Features, Pricing, and Development Potential

Explore the Unitry G1 edu Ultimate B humanoid robot in this in-depth review by sentdex. Discover its features, pricing, and development potential.

Unlocking Vibe Coding: Robot Hand Gestures and Version Control Explained

Explore the world of Vibe coding with sentdex as they push the boundaries of programming using language models. Discover the intricacies of robotic hand gestures and the importance of version control in this engaging tech journey.