Building a 35,000-Sample Wall Street Bets Dataset: Fine-Tuning, Nvidia Giveaway, and Data Access

- Authors

- Published on

- Published on

Welcome back to another thrilling episode on the sentdex channel, where we dive deep into the exhilarating world of Reddit data sets. Today, the team embarks on a quest to construct a dataset comprising 35,000 samples sourced from the dynamic realm of the Wall Street Bets subreddit. This dataset isn't just your run-of-the-mill collection; it intricately captures conversations between multiple speakers and the calculated responses from a sophisticated bot, mirroring the intricate tapestry of real-life interactions. As they fine-tune this dataset, the team aims to surpass previous iterations, setting their sights on achieving unparalleled results in this data-driven odyssey.

But wait, there's more excitement in store! In a generous gesture, Nvidia steps in to offer a jaw-dropping giveaway of an RTX 4080 super at the GPU Technology Conference. Attendees are in for a treat as they delve into the cutting-edge advancements in technology and witness the fusion of robotics with generative AI, promising a future brimming with innovation and endless possibilities. As the channel founder reminisces about their encounter with Reddit data from yesteryears, they shed light on the evolution of chatbots and language models, showcasing the remarkable progress in the field over the years.

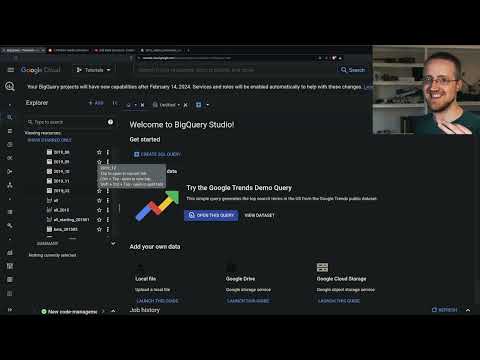

Venturing into the vast landscape of Reddit data sources, the team explores avenues such as torrents, archive.org, and BigQuery, unearthing a treasure trove of terabytes worth of comments dating up to 2019. With meticulous attention to detail, they navigate the process of exporting this valuable data to Google Cloud Storage, opting for a JSON format with compression to streamline handling. Their ultimate goal? To make this dataset accessible to all by uploading it to Hugging Face, ensuring that this wealth of information is readily available for enthusiasts and researchers alike. Amidst the whirlwind of data processing and fine-tuning, the team strategizes on pre-decompressing files to enhance processing speed and efficiency, a crucial step in their relentless pursuit of data perfection.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Building an LLM fine-tuning Dataset on Youtube

Viewer Reactions for Building an LLM fine-tuning Dataset

Viewer expresses gratitude for learning ML and coding from the channel

Viewer shares experience of creating a binary classification model about breast cancer

Viewer mentions starting a tech startup for logistics

Viewer shares creating a scraper for WSB comments

Viewer appreciates the fun learning process provided by the channel

Viewer discusses cleaning and uploading conversation data for others to use

Viewer points out potential issues with data filtering for model training

Viewer plans on creating a comprehensive guide for ComfyUI and Flux

Viewer inquires about completing the Python from scratch series

Viewer requests a video on meta-learning with examples

Related Articles

Robotic Hand Control Innovations: Challenges and Solutions

Jeff the G1 demonstrates precise robotic hand control using a keyboard. Challenges with SDK limitations lead to innovative manual training methods for tasks like retrieving a $65,000 bottle of water. Improved features include faster walking speed and emergency stop function.

Enhanced Robotics: Jeff the G1's Software Upgrades and LiDAR Integration

sentdex showcases upgrades to Jeff the G1's software stack, including RGB cameras, lidar for 3D mapping, and challenges with camera positioning and Ethernet connectivity. Embracing simplicity with Kiss ICP and Open3D, they navigate LiDAR integration for enhanced robotic exploration.

Unitry G1 Edu Ultimate B Review: Features, Pricing, and Development Potential

Explore the Unitry G1 edu Ultimate B humanoid robot in this in-depth review by sentdex. Discover its features, pricing, and development potential.

Unlocking Vibe Coding: Robot Hand Gestures and Version Control Explained

Explore the world of Vibe coding with sentdex as they push the boundaries of programming using language models. Discover the intricacies of robotic hand gestures and the importance of version control in this engaging tech journey.