Revolutionizing Text Generation: IBM's Speculative Decoding for Lightning-Fast Models

- Authors

- Published on

- Published on

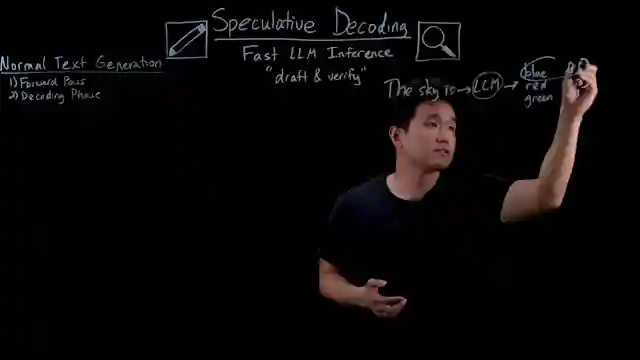

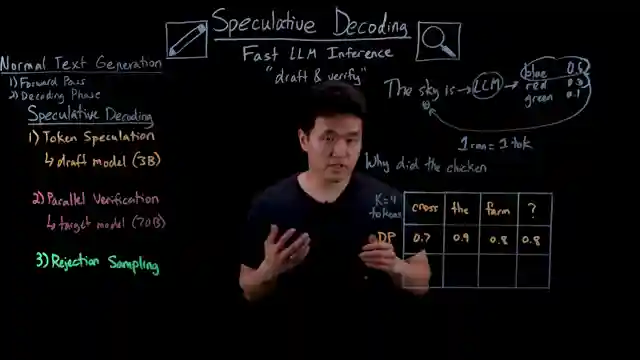

In this riveting IBM Technology episode, they delve into the fascinating world of speculative decoding for lightning-fast large language models. Picture this: a smaller draft model boldly speculating on future tokens, while a larger target model stands ready to verify its accuracy in parallel. It's like having a speedy editor drafting ahead while the writer meticulously polishes the final product. This innovative approach allows for the generation of multiple tokens in the time it takes a regular LLM to produce just one, revolutionizing the efficiency of text generation.

The process unfolds in three thrilling steps: token speculation, parallel verification, and rejection sampling. The draft model takes the lead, generating multiple draft tokens with probabilities, which are then scrutinized by the target model for validation. Through rejection sampling, each prediction is carefully evaluated against the target model's probabilities, ensuring that only the most accurate tokens make the cut. This meticulous selection process guarantees top-notch output quality without compromising on speed.

By harnessing the power of both models simultaneously and optimizing their roles, speculative decoding paves the way for reduced latency, lower compute costs, and enhanced inference speeds. The seamless coordination between the draft and target models not only streamlines the text generation process but also maximizes GPU resource utilization. IBM's groundbreaking advancements in LLM optimization exemplify the cutting-edge innovations driving this technological frontier, promising a future where speed and quality go hand in hand.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Faster LLMs: Accelerate Inference with Speculative Decoding on Youtube

Viewer Reactions for Faster LLMs: Accelerate Inference with Speculative Decoding

I'm sorry, but I cannot provide a summary without the specific video and channel name. Please provide the necessary details for me to generate the summary for you.

Related Articles

Mastering Identity Propagation in Agentic Systems: Strategies and Challenges

IBM Technology explores challenges in identity propagation within agentic systems. They discuss delegation patterns and strategies like OAuth 2, token exchange, and API gateways for secure data management.

AI vs. Human Thinking: Cognition Comparison by IBM Technology

IBM Technology explores the differences between artificial intelligence and human thinking in learning, processing, memory, reasoning, error tendencies, and embodiment. The comparison highlights unique approaches and challenges in cognition.

AI Job Impact Debate & Market Response: IBM Tech Analysis

Discover the debate on AI's impact on jobs in the latest IBM Technology episode. Experts discuss the potential for job transformation and the importance of AI literacy. The team also analyzes the market response to the Scale AI-Meta deal, prompting tech giants to rethink data strategies.

Enhancing Data Security in Enterprises: Strategies for Protecting Merged Data

IBM Technology explores data utilization in enterprises, focusing on business intelligence and AI. Strategies like data virtualization and birthright access are discussed to protect merged data, ensuring secure and efficient data access environments.