Revolutionizing Text Generation: Discrete Diffusion Models Unleashed

- Authors

- Published on

- Published on

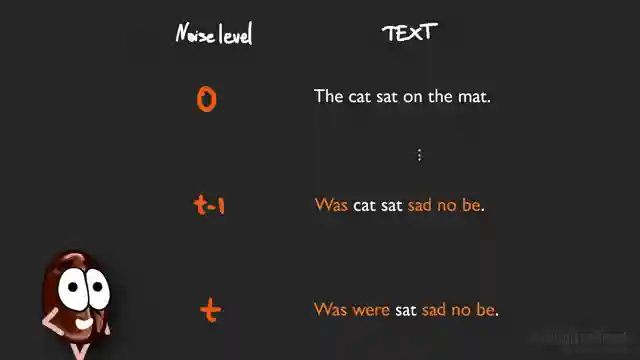

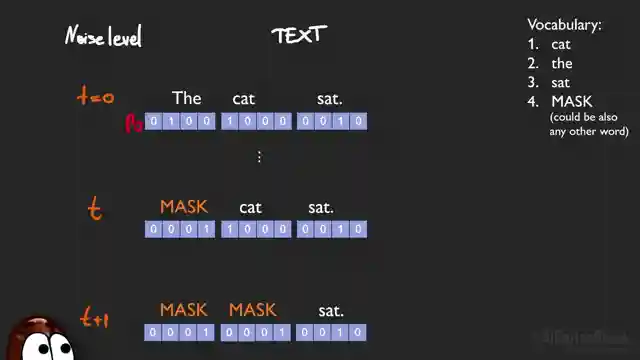

In this thrilling episode of AI Coffee Break, the team delves into the groundbreaking realm of discrete diffusion models, a game-changer in text generation. Forget the days of incoherent word salads - these models are here to challenge the GPT dynasty with their newfound prowess. While diffusion models have long reigned supreme in visuals and audio, conquering the realm of text has been their Everest. But fear not, as the forward diffusion process, akin to adding layers of noise to a picture, and its backward counterpart, training the model to denoise, have finally cracked the code for generating coherent text.

Diving into the nitty-gritty, the team breaks down the diffusion equation, where token probabilities undergo linear transformations to introduce noise in forward diffusion. The ingenious concept of the concrete score emerges as the key to reverting the process in backward diffusion, a task the transformer model learns to predict with finesse. Through meticulous training and a cross-entropy-like loss function, the model masters the art of denoising, paving the way for seamless text generation. SEDD, the star of the show, shines bright with perplexities comparable to GPT-2, showcasing the potential of diffusion models in the text generation arena.

As the dust settles, it's clear that the authors have achieved a remarkable feat with SEDD, a model boasting 320 million parameters akin to GPT-2. The future holds tantalizing prospects as diffusion models edge closer to surpassing the reigning champions in text generation. So buckle up, folks, as the AI Coffee Break team leaves no stone unturned in this exhilarating journey through the realm of discrete diffusion models. Don't touch that dial - the future of AI text generation is just getting started.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Discrete Diffusion Modeling by Estimating the Ratios of the Data Distribution – Paper Explained on Youtube

Viewer Reactions for Discrete Diffusion Modeling by Estimating the Ratios of the Data Distribution – Paper Explained

Discussion on the relevance and efficiency of diffusion in text generation models

Question about using quantum superposition for probability distribution in text generating diffusion models

Skepticism about generating sequences in a non-sequential way

Inquiry into the computational efficiency of the transformer-based LLMs

Confusion about the coherence of generated text

Interest in research directions in this area

Proposal for a DIT architecture combining diffusion and transformers

Question about scaling laws in diffusion models

Comparison between diffusion models and generative models for text generation

Excitement about the potential of generative models for images/videos

Related Articles

Revolutionizing Video Understanding: Introducing Storm Model

Discover Storm, a groundbreaking video language model revolutionizing video understanding by compressing sequences for improved reasoning. Storm outperforms existing models on benchmarks, enhancing efficiency and accuracy in real-time applications.

Revolutionizing Large Language Model Training with FP4 Quantization

Discover how training large language models at ultra-low precision using FP4 quantization revolutionizes efficiency and performance, challenging traditional training methods. Learn about outlier clamping, gradient estimation, and the potential for FP4 to reshape the future of large-scale model training.

Revolutionizing AI Reasoning Models: The Power of a Thousand Examples

Discover how a groundbreaking paper revolutionizes AI reasoning models, showing that just a thousand examples can boost performance significantly. Test time tricks and distillation techniques make high-performance models accessible, but at a cost. Explore the trade-offs between accuracy and computational efficiency.

Revolutionizing Model Interpretability: Introducing CC-SHAP for LLM Self-Consistency

Discover the innovative CC-SHAP score introduced by AI Coffee Break with Letitia for evaluating self-consistency in natural language explanations by LLMs. This continuous measure offers a deeper insight into model behavior, revolutionizing interpretability testing in the field.