Revolutionize AI Conversations with Q Laura: Speed, Efficiency, and Innovation

- Authors

- Published on

- Published on

In this riveting episode, the sentdex team delves into the world of Q Laura, a groundbreaking concept from Microsoft Research that promises to inject personality and flair into AI conversations. By reducing trainable parameters by a staggering 10,000 times, the team demonstrates how Q Laura revolutionizes fine-tuning processes, making them faster and more efficient. With the addition of quantization, memory requirements are further slashed, paving the way for a new era of lightweight model training.

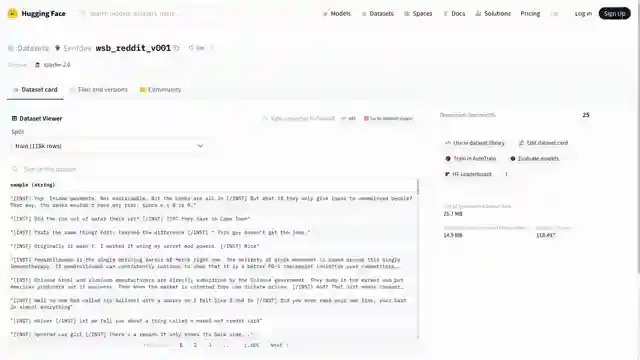

Venturing into uncharted territory, the team fine-tunes a chatbot using Reddit data, showcasing Q Laura's adaptability and versatility with minimal samples. Despite encountering challenges with the training data format, the team perseveres, highlighting the model's potential to generate diverse and engaging text outputs. Through meticulous curation of the training data, the team navigates cultural sensitivities, ensuring the chatbot aligns with acceptable norms.

As the team delves deeper into the Q Laura training process, they uncover valuable insights and recommendations for aspiring enthusiasts. Starting with a comprehensive notebook for training, the team emphasizes the importance of monitoring weight decay and fine-tuning the process for optimal results. Armed with an h100 server, the team achieves remarkable outcomes within hours, underscoring the speed and efficiency of Q Laura in model training. The compact adapter generated during training emerges as a game-changer, offering endless possibilities for innovative applications with minimal memory usage.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch QLoRA is all you need (Fast and lightweight model fine-tuning) on Youtube

Viewer Reactions for QLoRA is all you need (Fast and lightweight model fine-tuning)

Interest in having dedicated QLoRA models for specific historical figures

Appreciation for the video's precision and content

Desire for step-by-step tutorials and deeper insights into the process

Excitement to test the WSB model

Positive feedback on the effectiveness and natural responses of the 13B model

Interest in QLoRA for LLMs and its potential applications

Request for more models with personality and humor

Questions about the application of LoRA and fine-tuning techniques

Suggestions for training AI on specific content like Bill Burr's comedy routine

Appreciation for the informative content and interest in using QLoRa for model training

Related Articles

Robotic Hand Control Innovations: Challenges and Solutions

Jeff the G1 demonstrates precise robotic hand control using a keyboard. Challenges with SDK limitations lead to innovative manual training methods for tasks like retrieving a $65,000 bottle of water. Improved features include faster walking speed and emergency stop function.

Enhanced Robotics: Jeff the G1's Software Upgrades and LiDAR Integration

sentdex showcases upgrades to Jeff the G1's software stack, including RGB cameras, lidar for 3D mapping, and challenges with camera positioning and Ethernet connectivity. Embracing simplicity with Kiss ICP and Open3D, they navigate LiDAR integration for enhanced robotic exploration.

Unitry G1 Edu Ultimate B Review: Features, Pricing, and Development Potential

Explore the Unitry G1 edu Ultimate B humanoid robot in this in-depth review by sentdex. Discover its features, pricing, and development potential.

Unlocking Vibe Coding: Robot Hand Gestures and Version Control Explained

Explore the world of Vibe coding with sentdex as they push the boundaries of programming using language models. Discover the intricacies of robotic hand gestures and the importance of version control in this engaging tech journey.