OpenAI Launches Developer APIs: Responses, Web Search, and Computer Use

- Authors

- Published on

- Published on

In a thrilling announcement, Sam Witteveen unveils OpenAI's groundbreaking APIs tailored for developers, bridging the gap in their offerings. The star of the show is the Responses API, a game-changer that serves as a one-stop-shop for a plethora of tools and settings, from image to web search functionalities. This API serves as the golden ticket for developers to tap into OpenAI's cutting-edge models with unparalleled ease and efficiency. While the completions and chat APIs remain stalwarts in the lineup, the assistant API is set to bid adieu in mid-2026, signaling a strategic shift towards more popular options.

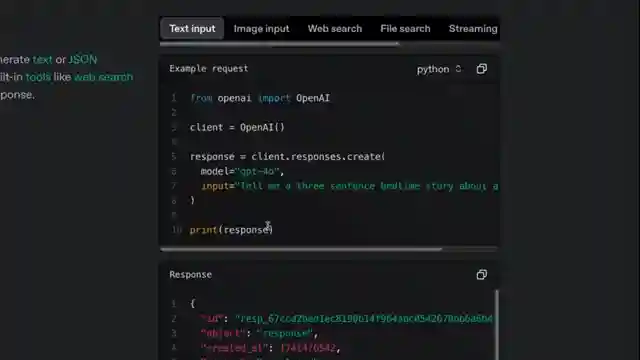

OpenAI's new Responses API is a versatile powerhouse, supporting a wide array of features such as text, image, web search, file search, function calling, and reasoning models. The web search tool, a standout addition, empowers users to delve into the depths of the internet directly from OpenAI's platform, delivering natural language results and direct links to relevant articles. Pricing for this game-changing tool starts at a modest $30 per 1000 calls, with varying rates based on the context size, making it an enticing proposition for developers looking to elevate their projects.

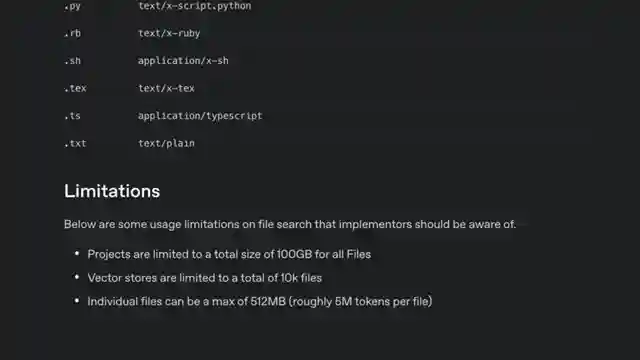

Furthermore, the file search tool revolutionizes the way users interact with uploaded files, boasting added metadata and citation features for a seamless experience. OpenAI also introduces Computer Use, the driving force behind their Operator agent, offering users the ability to input tasks for completion using a browser and internet connectivity. While this feature is currently exclusive to the Chat GPT Pro Plan in the United States, it showcases OpenAI's commitment to pushing boundaries and empowering developers to unlock the full potential of AI technology. The team's dedication to providing accessible APIs underscores their mission to democratize advanced AI capabilities and drive innovation in the developer community.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch OpenAI - NEW API & Agent Tools Breakdown on Youtube

Viewer Reactions for OpenAI - NEW API & Agent Tools Breakdown

Ways to disable tracing with one line of code and custom tracing options are available

New API has interesting features

Preference for using Gemini and Claude in apps over OpenAI

Excitement to use AI agent with OpenAI

Curiosity about Agents SDK and comparison with other frameworks like LangGraph

Operator is based off of o1

Concerns about OpenAI using data from API calls

Comparison of OpenAI with Google and Anthropic

Lack of trust in Sam Altman and skepticism about the new API being a vendor lock-in

Question about having to upload all files to OpenAI servers to use File Search API

Related Articles

Unleashing Gemini CLI: Google's Free AI Coding Tool

Discover the Gemini CLI by Google and the Gemini team. This free tool offers 60 requests per minute and 1,000 requests per day, empowering users with AI-assisted coding capabilities. Explore its features, from grounding prompts in Google Search to using various MCPS for seamless project management.

Nanet's OCR Small: Advanced Features for Specialized Document Processing

Nanet's OCR Small, based on Quen 2.5VL, offers advanced features like equation recognition, signature detection, and table extraction. This model excels in specialized OCR tasks, showcasing superior performance and versatility in document processing.

Revolutionizing Language Processing: Quen's Flexible Text Embeddings

Quen introduces cutting-edge text embeddings on HuggingFace, offering flexibility and customization. Ranging from 6B to 8B in size, these models excel in benchmarks and support instruction-based embeddings and reranking. Accessible for local or cloud use, Quen's models pave the way for efficient and dynamic language processing.

Unleashing Chatterbox TTS: Voice Cloning & Emotion Control Revolution

Discover Resemble AI's Chatterbox TTS model, revolutionizing voice cloning and emotion control with 500M parameters. Easily clone voices, adjust emotion levels, and verify authenticity with watermarks. A versatile and user-friendly tool for personalized audio content creation.