OpenAI GPT 4.1 Models: Catch-up for Enterprise with Enhanced Features

- Authors

- Published on

- Published on

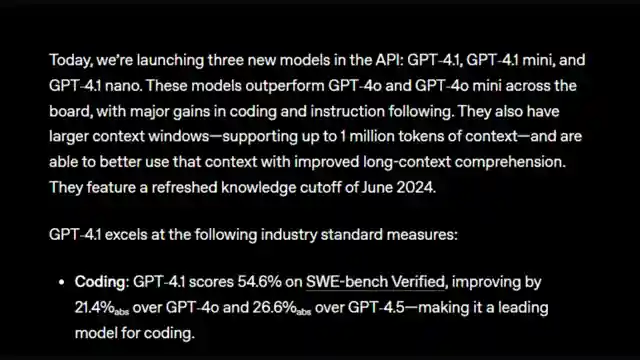

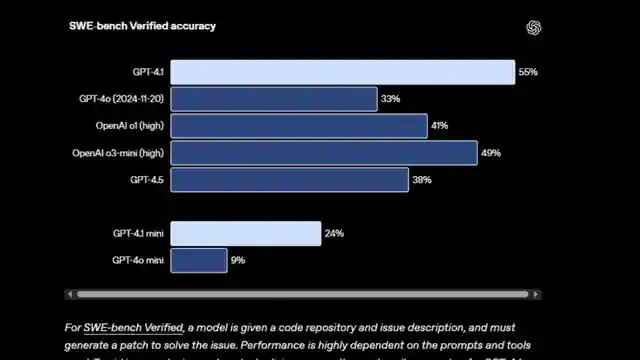

In a recent revelation by Sam Witteveen, OpenAI has unleashed a trio of models - GPT 4.1, 4.1 Mini, and 4.1 Nano. These aren't your run-of-the-mill cutting-edge creations; they're what you might call "catch-up models." Designed to bridge the gap in the ever-competitive landscape of AI, these models aim to cater to the high-stakes world of enterprise users. While OpenAI has historically held a commanding lead in the AI realm, recent contenders like Claude and Gemini have been nipping at their heels, prompting this strategic move.

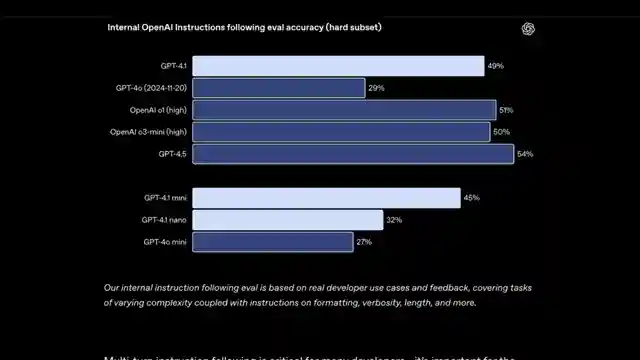

The battleground for supremacy in the AI domain has shifted towards context, latency, coding, and instruction following. OpenAI's latest offerings show promise in these areas, particularly in the realm of instruction following. By delving deep into the nuances of tasks like format following and handling negative instructions, OpenAI is showcasing its prowess in this crucial aspect. However, there are notable misses in the form of limited output tokens and the absence of an audio model, leaving room for improvement.

As the dust settles, it becomes apparent that the GPT 4.1 models bring a blend of enhanced instruction following, reduced latency, and a much-needed fill for the gaps left by their predecessors. The pricing strategy, especially concerning the Mini and Nano variants, seems to be taking a direct shot at Google's offerings. Despite these advancements, OpenAI has made the bold decision to bid adieu to the 4.5 model, a move that has left many pondering the future direction of the AI giant. The unveiling of the GPT 4.1 prompting guide sheds light on effective model utilization, offering a glimpse into the intricate workings of these cutting-edge creations.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch GPT-4.1 - The Catchup Models on Youtube

Viewer Reactions for GPT-4.1 - The Catchup Models

Speculation about AGI and the future of AI models

Comparisons between Google and OpenAI in terms of resources and transparency

Preference for using GPT 4.0 mini and Claude 3.7 for applications

Excitement about the price vs performance of mini and nano models

Concerns about benchmarks and the performance of new models

Switching from OpenAI's models to Gemini

Discussion on the use of 1 million tokens in models

Suggestions for models learning from books rather than the internet

Related Articles

Unleashing Gemini CLI: Google's Free AI Coding Tool

Discover the Gemini CLI by Google and the Gemini team. This free tool offers 60 requests per minute and 1,000 requests per day, empowering users with AI-assisted coding capabilities. Explore its features, from grounding prompts in Google Search to using various MCPS for seamless project management.

Nanet's OCR Small: Advanced Features for Specialized Document Processing

Nanet's OCR Small, based on Quen 2.5VL, offers advanced features like equation recognition, signature detection, and table extraction. This model excels in specialized OCR tasks, showcasing superior performance and versatility in document processing.

Revolutionizing Language Processing: Quen's Flexible Text Embeddings

Quen introduces cutting-edge text embeddings on HuggingFace, offering flexibility and customization. Ranging from 6B to 8B in size, these models excel in benchmarks and support instruction-based embeddings and reranking. Accessible for local or cloud use, Quen's models pave the way for efficient and dynamic language processing.

Unleashing Chatterbox TTS: Voice Cloning & Emotion Control Revolution

Discover Resemble AI's Chatterbox TTS model, revolutionizing voice cloning and emotion control with 500M parameters. Easily clone voices, adjust emotion levels, and verify authenticity with watermarks. A versatile and user-friendly tool for personalized audio content creation.