Microsoft Unveils 54 Reasoning Models for Efficient Mathematical Inference

- Authors

- Published on

- Published on

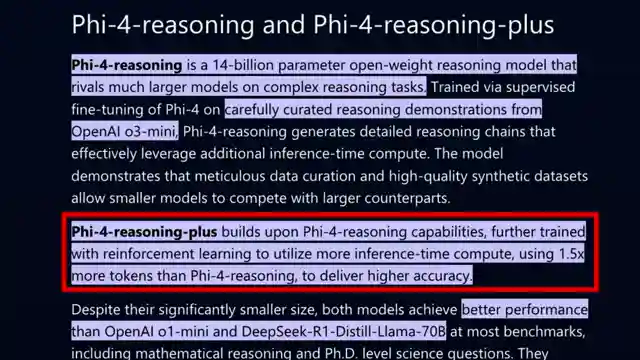

In a thrilling turn of events, Microsoft has unveiled a lineup of 54 reasoning models, each with its own unique twist. From the powerful 54 reasoning plus to the intriguing 54 mini reasoning, these models promise to revolutionize the world of mathematical reasoning. The team at Microsoft has employed cutting-edge distillation techniques, drawing inspiration from models like Deep Seek R1 to fine-tune their creations. With a focus on predicting longer chains of thought, these models are set to shake up the landscape of inference time scaling.

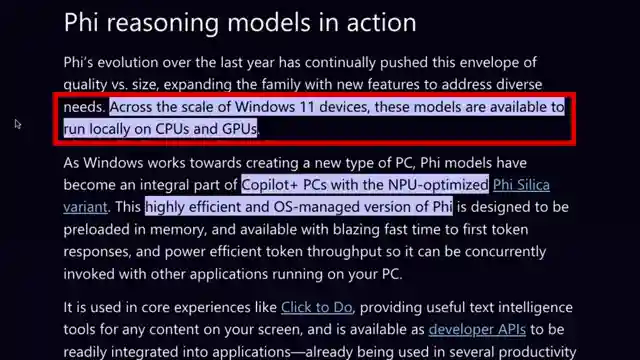

But the real excitement lies in the details of how these models were trained. Continual training, supervised fine-tuning, alignment training, and reinforcement learning with verifiable rewards all play a crucial role in shaping the capabilities of these reasoning models. Microsoft's vision extends beyond just software - they aim to integrate these models into Windows devices, introducing the world to the revolutionary FI Silica for local use. This move signals a shift towards more efficient and specialized applications, paving the way for a new era of computing.

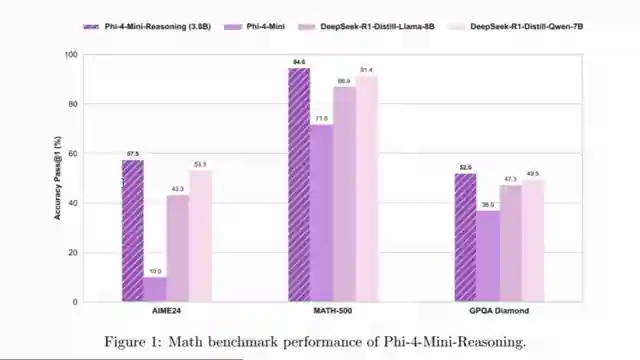

The concept of a small yet powerful reasoning model has captured the imagination of tech enthusiasts worldwide. By focusing on effective reasoning without the need for exhaustive data, Microsoft is pushing the boundaries of what AI can achieve. The 54 mini reasoning model, with its compact size and impressive performance, serves as a testament to the potential of small-scale AI. As comparisons with other models like Quen 3 1.7 billion reveal subtle nuances in reasoning approaches, it becomes clear that the future of AI is filled with endless possibilities.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Microsoft Joins the Reasoning Race!! on Youtube

Viewer Reactions for Microsoft Joins the Reasoning Race!!

Karpathy's idea of a small model capable of grounding its responses to RAG or internet search is interesting

Comparison of Phi4 to comparable sized models like Gemini 2.5

Concerns about Microsoft integrating AI models with flagship products like Excel and Outlook

Preference for Qwen 4b in tests for general knowledge and COT logic

Gemini not showing complete thinking, only providing summaries in bullet points

Mixed opinions on Phi-4-reasoning model's performance and verbosity

Appreciation for the mini model Phi-4-r

Criticism towards Microsoft for potentially running models without explicit permission and concerns about data stealing

Mention of Phi-4-reasoning-plus model's tendency to provide excessive output even for simple questions

Highlighting Phi-4-reasoning as the first open weight multimodal reasoning model, but lagging in performance compared to other models

Related Articles

Unleashing Gemini CLI: Google's Free AI Coding Tool

Discover the Gemini CLI by Google and the Gemini team. This free tool offers 60 requests per minute and 1,000 requests per day, empowering users with AI-assisted coding capabilities. Explore its features, from grounding prompts in Google Search to using various MCPS for seamless project management.

Nanet's OCR Small: Advanced Features for Specialized Document Processing

Nanet's OCR Small, based on Quen 2.5VL, offers advanced features like equation recognition, signature detection, and table extraction. This model excels in specialized OCR tasks, showcasing superior performance and versatility in document processing.

Revolutionizing Language Processing: Quen's Flexible Text Embeddings

Quen introduces cutting-edge text embeddings on HuggingFace, offering flexibility and customization. Ranging from 6B to 8B in size, these models excel in benchmarks and support instruction-based embeddings and reranking. Accessible for local or cloud use, Quen's models pave the way for efficient and dynamic language processing.

Unleashing Chatterbox TTS: Voice Cloning & Emotion Control Revolution

Discover Resemble AI's Chatterbox TTS model, revolutionizing voice cloning and emotion control with 500M parameters. Easily clone voices, adjust emotion levels, and verify authenticity with watermarks. A versatile and user-friendly tool for personalized audio content creation.