Mercury: Revolutionizing Language Models with Diffusion Technology

- Authors

- Published on

- Published on

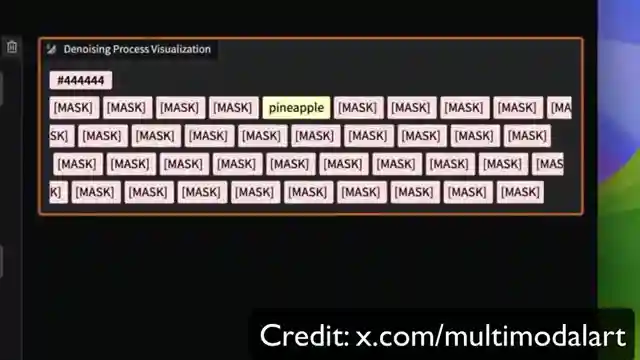

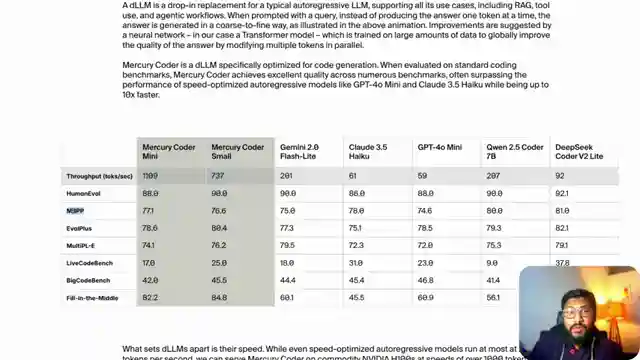

In the realm of language models, a new contender has emerged from the shadows - the Mercury model by Inception Labs. This beast, fueled by diffusion technology, deviates from the norm of autoregressive models by predicting the entire output through noise denoising wizardry. It's like watching a master painter create a masterpiece with a single stroke, leaving competitors in the dust with its lightning-fast speed of up to 1100 tokens per second. In a world where time is money, Mercury reigns supreme, showcasing its prowess in coding evaluations and setting a new standard for efficiency and performance.

But hold on, folks, that's not all! Over in the East, a Chinese research lab has unleashed their own diffusion model under the MIT license, proving that innovation knows no bounds. With the ability to craft jokes and messages with finesse, this model dances through the data like a maestro conducting a symphony. The future of language models is unfolding before our very eyes, with these new architectures paving the way for a revolution in AI technology. It's a thrilling time to be alive, witnessing the birth of a new era in computational wizardry.

As we delve deeper into the realm of diffusion-based models, the potential for growth and advancement becomes abundantly clear. The stable diffusion family of models has shown us the power of innovation and scale, hinting at a future where language models will reach unprecedented heights. The fusion of cutting-edge technology and sheer computational might promises a world where AI will not just assist but astound us with its capabilities. So buckle up, ladies and gentlemen, because the ride to the future of AI is going to be one heck of a journey. Let's embrace this new era with open arms and minds, ready to witness the incredible feats that await us in the world of language models.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch This Diffusion LLM Breaks the AI Rules, Yet Works! on Youtube

Viewer Reactions for This Diffusion LLM Breaks the AI Rules, Yet Works!

Transformer based LMs add contextual information, while Diffusion based LMs subtract conditional noise

The speed of the diffusion technique is impressive, but will the answers be as good?

Diffusion models may lead to less need for GPUs

The concept is not new, but the question remains about determining the length of the response

Diffusion models could be better for parallel processing

Some users question if diffusion models are the best tool for the job, especially for images

Comparisons are made between the new technology and GPT 3.5

Questions are raised about the context window in diffusion LMs

Interest in open source diffusion LMs

Speculation about OpenAI potentially poaching the researchers behind the technology

Related Articles

Revolutionizing Music Creation: Google's Magenta Real Time Model

Discover Magenta, a cutting-edge music generation model from Google deep mind. With 800 million parameters, Magenta offers real-time music creation on Google Collab TPU. Available on Hugging Face, this AI innovation is revolutionizing music production.

Nanits OCRS Model: Free Optical Character Recognition Tool Outshines Competition

Discover Nanits' OCRS model, a powerful optical character recognition tool fine-tuned from Quinn 2.5 VLM. This free model outshines Mistral AI's paid OCR API, excelling in latex equation recognition, image description, signature detection, and watermark extraction. Accessible via Google Collab, it offers seamless conversion of documents to markdown format. Experience the future of OCR technology with Nanits.

Revolutionizing Voice Technology: Chatterbox by Resemble EI

Resemble EI's Chatterbox, a half-billion parameter model licensed under MIT, excels in text-to-speech and voice cloning. Users can adjust parameters like pace and exaggeration for customized output. The model outperforms competitors, making it ideal for diverse voice applications. Subscribe to 1littlecoder for more insights.

Unlock Productivity: Google AI Studio's Branching Feature Revealed

Discover the hidden Google AI studio feature called branching on 1littlecoder. This revolutionary tool allows users to create different conversation timelines, boosting productivity and enabling flexible communication. Branching is a game-changer for saving time and enhancing learning experiences.