Mastering Local Model Interpretability with LIME: A Deep Dive

- Authors

- Published on

- Published on

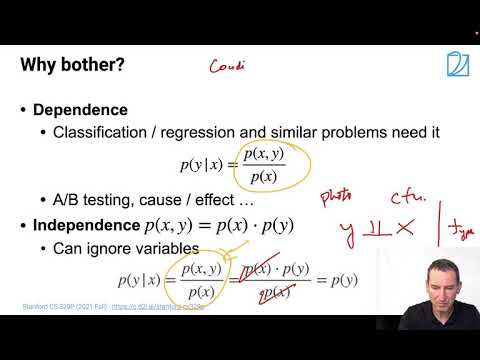

In this thrilling episode from the Alex Smola channel, the team delves into the fascinating world of using simple models locally with the ingenious tool called LIME. Picture this: you have a complex black box classifier, a real head-scratcher, right? Well, LIME swoops in like a superhero, breaking down this behemoth into simple linear approximations. It's like turning a Rubik's Cube into a Lego set - suddenly, everything makes sense!

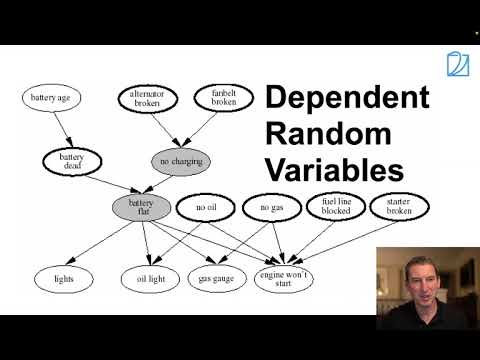

But hold on to your seats because things get even more riveting. The team zooms in on the crucial aspect of selecting a solid reference baseline when measuring variable influence. It's like choosing the perfect gear for a high-speed race - one wrong move, and you're off the track! They dissect the intricate dance between dependent random variables, shedding light on the delicate balance between direct impacts and sneaky side effects. It's a bit like navigating a treacherous mountain road - one wrong turn, and you're hanging off a cliff!

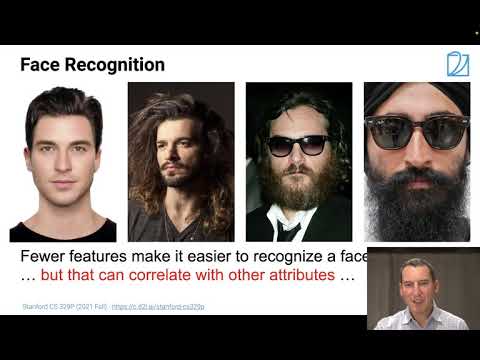

And just when you thought it couldn't get more intense, they throw in a curveball - the showdown between conditional expectations and the mighty marginal approach. It's like a battle between two heavyweight champions, each vying for the title of the ultimate variable influencer. The team unveils jaw-dropping examples, from assessing trustworthiness based on appearances to unraveling the mysteries of credit ratings. It's a rollercoaster ride of revelations and eye-opening insights that will leave you on the edge of your seat, craving for more. So buckle up, gearheads, because this is one wild ride you won't want to miss!

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Lecture 15, Part 2, Local Models and Conditioning on Youtube

Viewer Reactions for Lecture 15, Part 2, Local Models and Conditioning

I'm sorry, but I cannot provide a summary without the specific video comments. If you can provide the comments, I'd be happy to help summarize them for you.

Related Articles

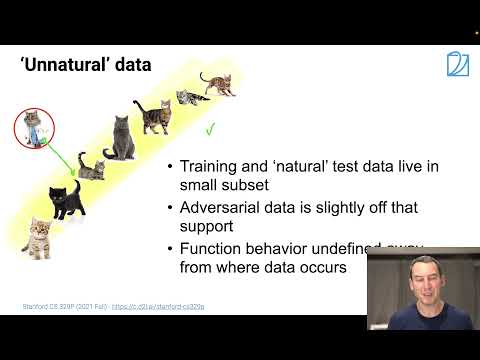

Unveiling Adversarial Data: Deception in Recognition Systems

Explore the world of adversarial data with Alex Smola's team, uncovering how subtle tweaks deceive recognition systems. Discover the power of invariances in enhancing classifier accuracy and defending against digital deception.

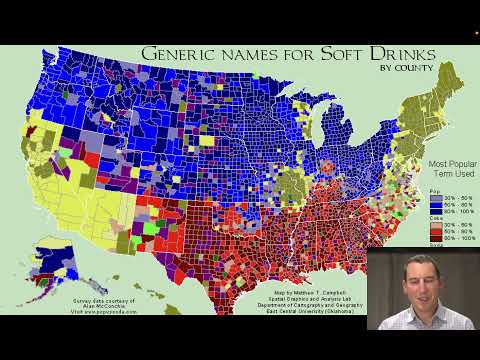

Unveiling Coverage Shift and AI Bias: Optimizing Algorithms with Generators and GANs

Explore coverage shift and AI bias in this insightful Alex Smola video. Learn about using generators, GANs, and dataset consistency to address biases and optimize algorithm performance. Exciting revelations await in this deep dive into the world of artificial intelligence.

Mastering Coverage Shift in Machine Learning

Explore coverage shift in machine learning with Alex Smola. Learn how data discrepancies can derail classifiers, leading to failures in real-world applications. Discover practical solutions and pitfalls to avoid in this insightful discussion.

Mastering Random Variables: Independence, Sequences, and Graphs with Alex Smola

Explore dependent vs. independent random variables, sequence models like RNNs, and graph concepts in this dynamic Alex Smola lecture. Learn about independence tests, conditional independence, and innovative methods for assessing dependence. Dive into covariance operators and information theory for a comprehensive understanding of statistical relationships.