Game Theory and Shapley Values: Micronesian Parliament Insights

- Authors

- Published on

- Published on

Today on the Alex Smola channel, we take a thrilling dive into the world of game theory using the Micronesian parliament as our playground. Picture this: 14 members split into parties A, B, C, and D, embroiled in a high-stakes vote on a million-dollar bill. With a majority of eight votes needed to pass, the tension is palpable. The team uncovers fascinating insights by analyzing potential coalitions and strategic moves within this political microcosm.

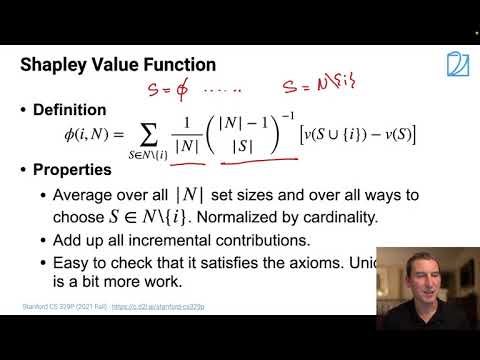

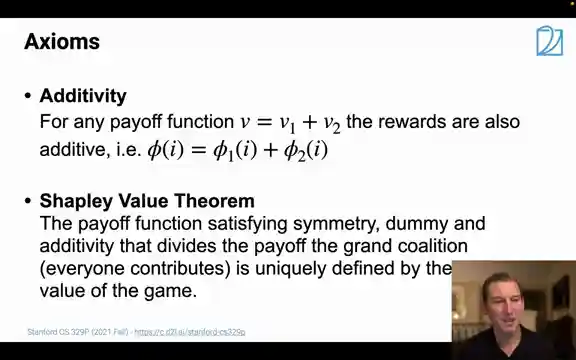

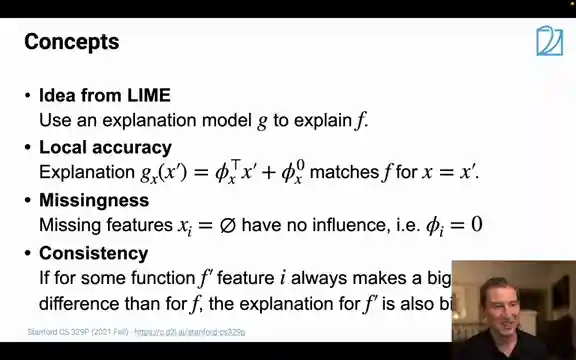

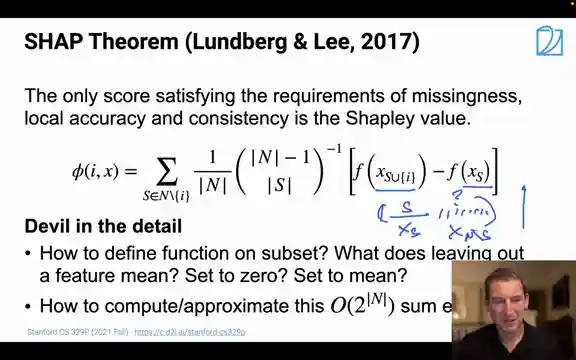

Enter the Shapley value theorem, a powerful tool that unravels the mysteries of fair payoffs in such complex decision-making scenarios. As the team delves deeper, they draw intriguing parallels between political parties and features, shedding light on the intricate world of feature selection. By establishing crucial conditions for a unique feature explainer, namely the Shapley value, they ensure accuracy and consistency in the analysis process.

However, as with any thrilling adventure, challenges loom on the horizon. The computational complexity inherent in calculating Shapley values poses a formidable obstacle. Yet, fear not, for researchers have devised ingenious solutions like Fast Tree SHAP to navigate this treacherous terrain with finesse. By leveraging sampling techniques and considering sparse models, they enhance the practicality and accuracy of Shapley values in real-world applications, offering a clearer understanding of feature importance in various contexts.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Lecture 15, Part 3, Axiomatic Explanations on Youtube

Viewer Reactions for Lecture 15, Part 3, Axiomatic Explanations

Availability of book in paperback/hardcover format

Request for information on when it will be available for sale

Related Articles

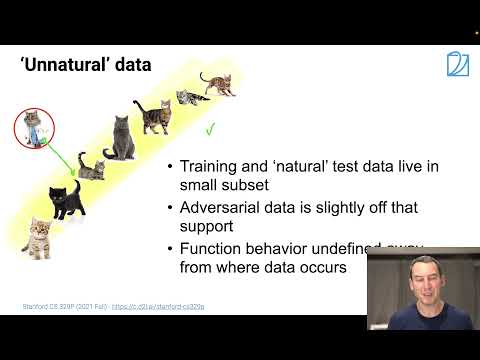

Unveiling Adversarial Data: Deception in Recognition Systems

Explore the world of adversarial data with Alex Smola's team, uncovering how subtle tweaks deceive recognition systems. Discover the power of invariances in enhancing classifier accuracy and defending against digital deception.

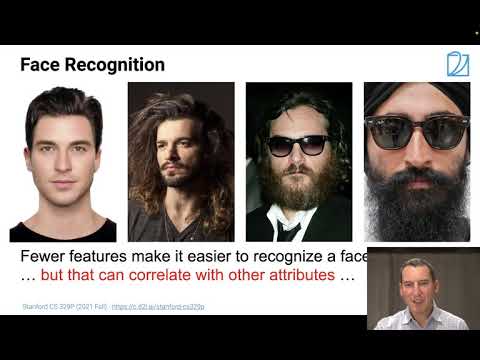

Unveiling Coverage Shift and AI Bias: Optimizing Algorithms with Generators and GANs

Explore coverage shift and AI bias in this insightful Alex Smola video. Learn about using generators, GANs, and dataset consistency to address biases and optimize algorithm performance. Exciting revelations await in this deep dive into the world of artificial intelligence.

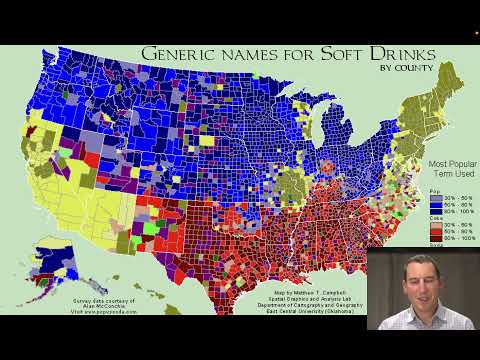

Mastering Coverage Shift in Machine Learning

Explore coverage shift in machine learning with Alex Smola. Learn how data discrepancies can derail classifiers, leading to failures in real-world applications. Discover practical solutions and pitfalls to avoid in this insightful discussion.

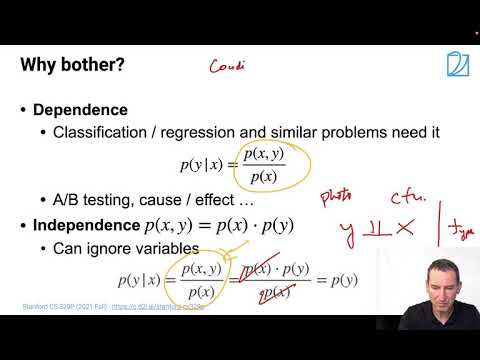

Mastering Random Variables: Independence, Sequences, and Graphs with Alex Smola

Explore dependent vs. independent random variables, sequence models like RNNs, and graph concepts in this dynamic Alex Smola lecture. Learn about independence tests, conditional independence, and innovative methods for assessing dependence. Dive into covariance operators and information theory for a comprehensive understanding of statistical relationships.