Mastering Language Models: Size, Performance, and Cost Comparison

- Authors

- Published on

- Published on

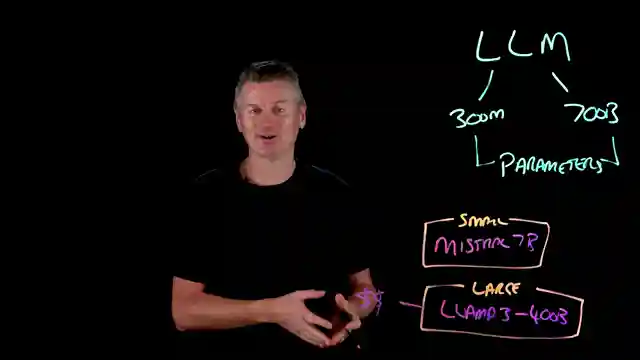

In this riveting episode by IBM Technology, we dive headfirst into the colossal world of language models, from the pint-sized networks with a mere 300 million parameters to the behemoths boasting hundreds of billions or even nearing a trillion parameters. These models, measured in parameters, are the backbone of neural networks, storing the crucial floating point weights that shape their learning process. Take Mistral 7B, a small fry with 7 billion parameters, and then shift gears to the gargantuan Llama 3, flexing its muscles with a whopping 400 billion parameters, placing it firmly in the heavyweight category.

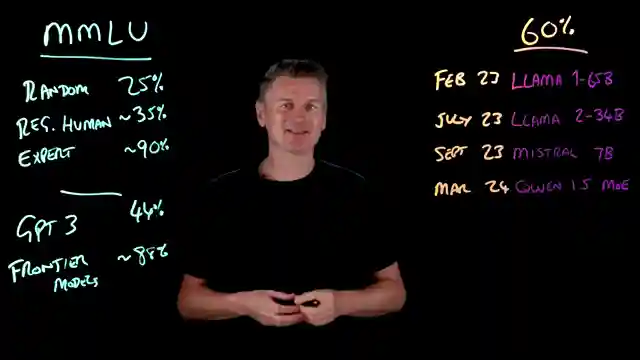

But size isn't everything in this high-octane race for language model supremacy. While larger models offer the horsepower to memorize vast amounts of data, support multiple languages, and tackle intricate reasoning tasks, they come at a cost - demanding hefty amounts of compute power, energy, and memory. On the other end of the spectrum, smaller models are revving their engines, rapidly closing the gap by achieving impressive milestones with fewer parameters. The benchmark of 60% marks the threshold for competent generalist behavior, a line that smaller models are crossing at a breakneck pace, showcasing their agility and efficiency in the language model arena.

When it comes to choosing the right model for the job, it's not just about size; it's about fit. Large models shine in scenarios like broad spectrum code generation, document processing, multilingual translation, and capturing subtle nuances in language. Meanwhile, small models steal the spotlight in on-device AI applications, summarization tasks, and enterprise chatbots, delivering top-notch performance with lightning-fast latency and cost-effectiveness. The verdict? Whether you go big or stay small, let your specific use case be your guide in this thrilling race to harness the power of language models.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Small vs. Large AI Models: Trade-offs & Use Cases Explained on Youtube

Viewer Reactions for Small vs. Large AI Models: Trade-offs & Use Cases Explained

IBM allowing others to use Granite models for free

Quantisation improving models with the same parameters

RAG being useful for turning Granite-13B into an enterprise chatbot

Fine tuning and its potential hype

Workflow being more beneficial than scaling the model

Small language models (SLM) with millions of parameters for RAG on niche topics

Concerns about models overfitting on the MMLU benchmark

Advancements in data cleaning impacting improvements

Use cases for running tools via MCP with small models

Speculation on training on test data

Related Articles

Mastering Identity Propagation in Agentic Systems: Strategies and Challenges

IBM Technology explores challenges in identity propagation within agentic systems. They discuss delegation patterns and strategies like OAuth 2, token exchange, and API gateways for secure data management.

AI vs. Human Thinking: Cognition Comparison by IBM Technology

IBM Technology explores the differences between artificial intelligence and human thinking in learning, processing, memory, reasoning, error tendencies, and embodiment. The comparison highlights unique approaches and challenges in cognition.

AI Job Impact Debate & Market Response: IBM Tech Analysis

Discover the debate on AI's impact on jobs in the latest IBM Technology episode. Experts discuss the potential for job transformation and the importance of AI literacy. The team also analyzes the market response to the Scale AI-Meta deal, prompting tech giants to rethink data strategies.

Enhancing Data Security in Enterprises: Strategies for Protecting Merged Data

IBM Technology explores data utilization in enterprises, focusing on business intelligence and AI. Strategies like data virtualization and birthright access are discussed to protect merged data, ensuring secure and efficient data access environments.