Mastering Deep Seek: Hacks for Agent Integration with Pantic AI

- Authors

- Published on

- Published on

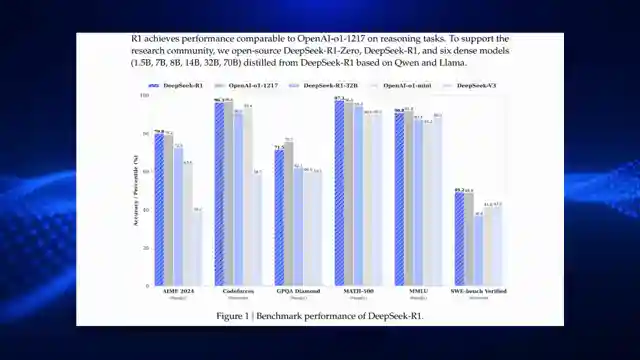

In this episode, the team delves into the intricate world of Deep seek, a powerful model designed for structured responses. They confront the challenges posed by the model's lack of support for function calling and JSON output, crucial components in the realm of agent-building. Through ingenious hacks, they showcase how to maneuver around these obstacles and seamlessly integrate Deep seek into agents using the versatile Pantic AI platform. The team sheds light on the similar hurdles faced by the Gemini 2.0 thinking models, hinting at a shared journey towards enhanced functionality.

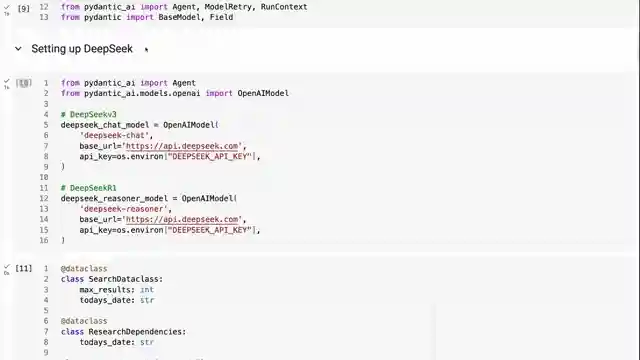

Venturing deeper into the intricacies of structured responses, the team unveils Deep seek's own insights on the matter, emphasizing the significance of prompt engineering and API configuration. By demonstrating a practical method to leverage Pantic AI with Deep seek, they provide a roadmap for obtaining structured outputs efficiently. By setting up the Deep seek API within the Pantic AI framework, they demonstrate the flexibility of switching between models to tailor responses to specific tasks, showcasing the adaptability and power of these cutting-edge technologies.

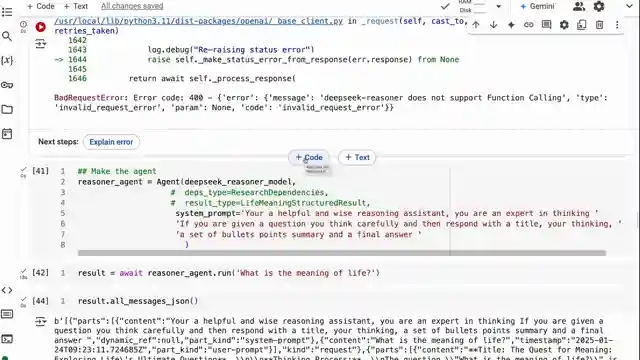

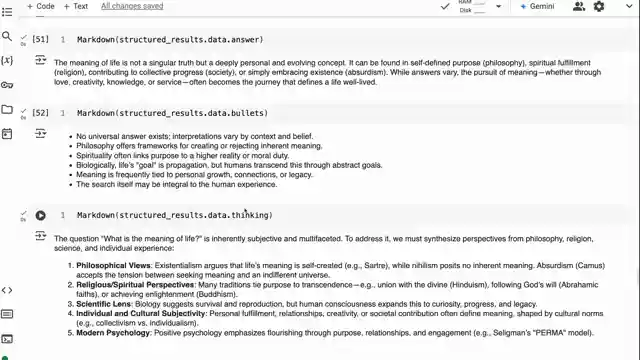

The team's hands-on approach involves utilizing the Deep seek chat model for a search agent, while grappling with the limitations of the Deep seek R1 reasoning model in handling function calling. To overcome this hurdle, they ingeniously employ a simpler model for formatting structured outputs, ensuring a smooth flow of information. Emphasizing the importance of capturing both content and reasoning content from Deep seek R1's responses, they delve into the intricacies of the model's output structure, highlighting the need for a comprehensive understanding of the reasoning chain of thought.

In a captivating twist, the team navigates through the nuances of multi-round conversations, underlining the strategic storage and utilization of the Chain of Thought for optimal results. By showcasing a method to extract both content and reasoning content from Deep seek R1's responses using a standard OpenAI call, they demonstrate a blend of innovation and practicality in harnessing the full potential of this groundbreaking technology.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch DeepSeek R1 for Structured Agents on Youtube

Viewer Reactions for DeepSeek R1 for Structured Agents

Using a reasoning model as a tool for various processes

Combining with Gemini Flash for cleaning output

Concerns about effort and potential obsolescence of techniques

Mention of potential new models like o3 and Opus

Converting JSON output to XML

Building an MCP server with reasoning tools

Appreciation for providing Hindi track

Mention of trying Kimi 1.5

Using models for cybersecurity and penetration testing

Comparison between DeepSeek and OpenAI search

Related Articles

Unleashing Gemini CLI: Google's Free AI Coding Tool

Discover the Gemini CLI by Google and the Gemini team. This free tool offers 60 requests per minute and 1,000 requests per day, empowering users with AI-assisted coding capabilities. Explore its features, from grounding prompts in Google Search to using various MCPS for seamless project management.

Nanet's OCR Small: Advanced Features for Specialized Document Processing

Nanet's OCR Small, based on Quen 2.5VL, offers advanced features like equation recognition, signature detection, and table extraction. This model excels in specialized OCR tasks, showcasing superior performance and versatility in document processing.

Revolutionizing Language Processing: Quen's Flexible Text Embeddings

Quen introduces cutting-edge text embeddings on HuggingFace, offering flexibility and customization. Ranging from 6B to 8B in size, these models excel in benchmarks and support instruction-based embeddings and reranking. Accessible for local or cloud use, Quen's models pave the way for efficient and dynamic language processing.

Unleashing Chatterbox TTS: Voice Cloning & Emotion Control Revolution

Discover Resemble AI's Chatterbox TTS model, revolutionizing voice cloning and emotion control with 500M parameters. Easily clone voices, adjust emotion levels, and verify authenticity with watermarks. A versatile and user-friendly tool for personalized audio content creation.